This is the multi-page printable view of this section. Click here to print.

Documentation

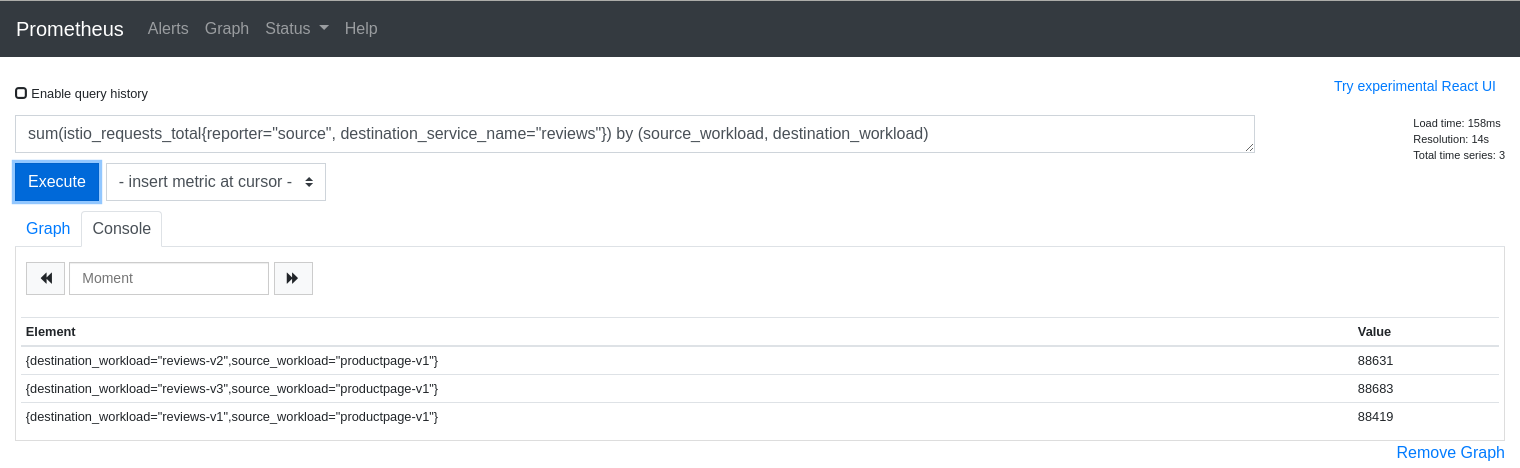

- 1: Installation

- 1.1: Quick Start

- 1.2: Installation Guide

- 1.2.1: Prerequisites

- 1.2.2: Install via Helm

- 1.2.3: Install via OperatorHub

- 1.2.4: The Kiali CR

- 1.2.5: The OSSMConsole CR

- 1.2.6: Accessing Kiali

- 1.2.7: Advanced Install

- 1.2.8: Example Install

- 1.3: Deployment Options

- 2: Configuration

- 2.1: Authentication Strategies

- 2.1.1: Anonymous strategy

- 2.1.2: Header strategy

- 2.1.3: OpenID Connect strategy

- 2.1.4: OpenShift strategy

- 2.1.5: Token strategy

- 2.1.6: Session options

- 2.2: Console Customization

- 2.3: Custom Dashboards

- 2.4: Debugging Kiali

- 2.5: Istio Environment

- 2.6: Kiali CR Reference

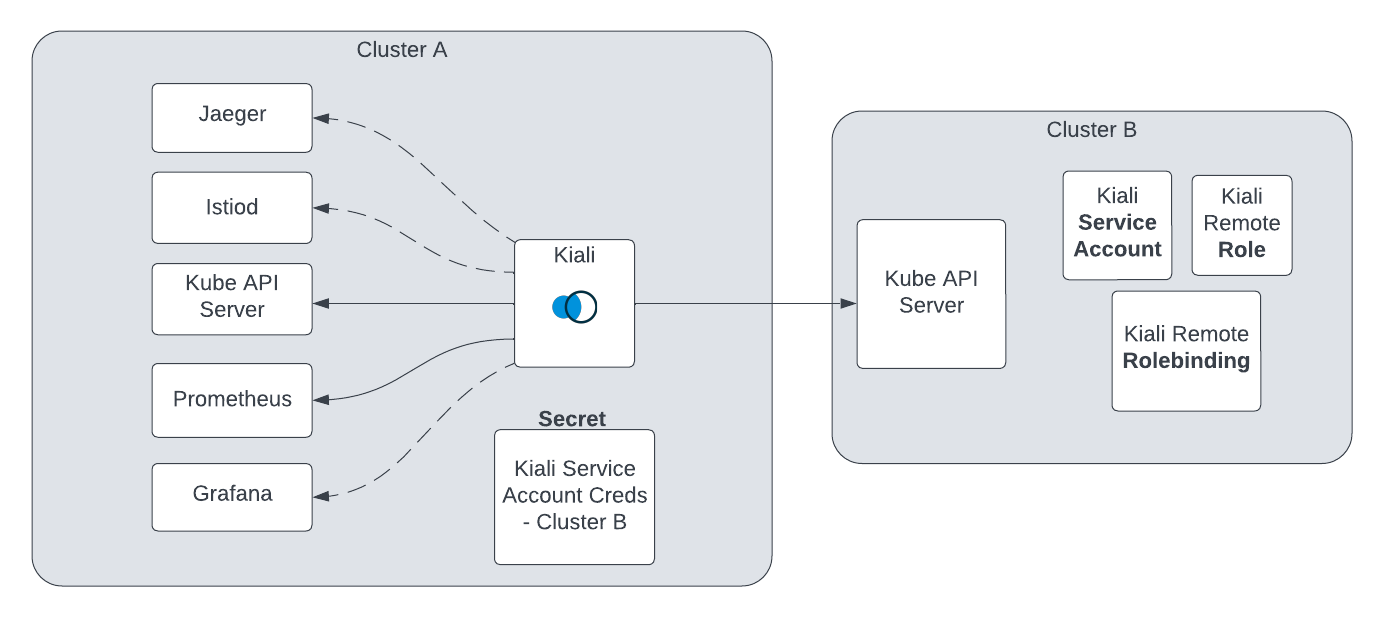

- 2.7: Multi-cluster

- 2.8: Namespace access control

- 2.9: Namespace Management

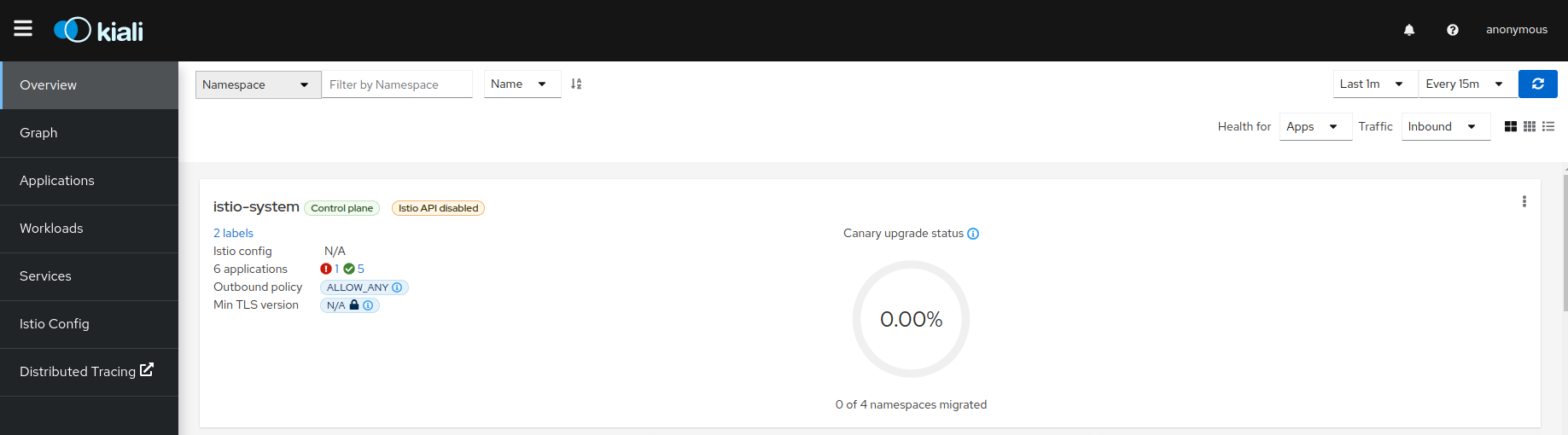

- 2.10: No Istiod Access

- 2.11: OSSMConsole CR Reference

- 2.12: Prometheus, Tracing, Grafana

- 2.12.1: Grafana

- 2.12.2: Prometheus

- 2.12.3: Tracing

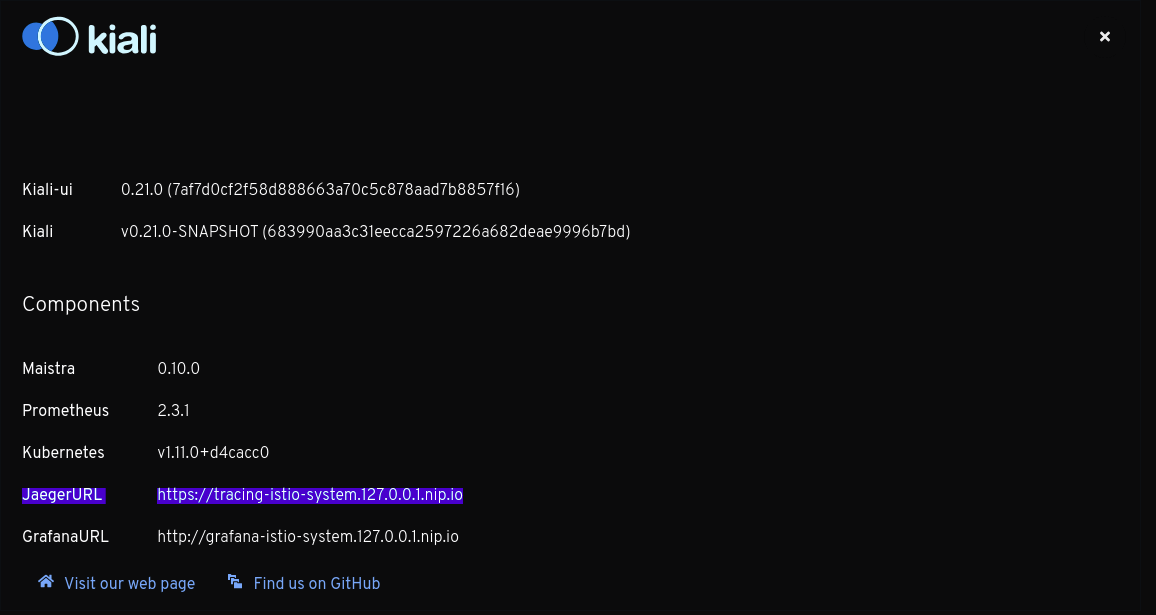

- 2.12.3.1: Jaeger

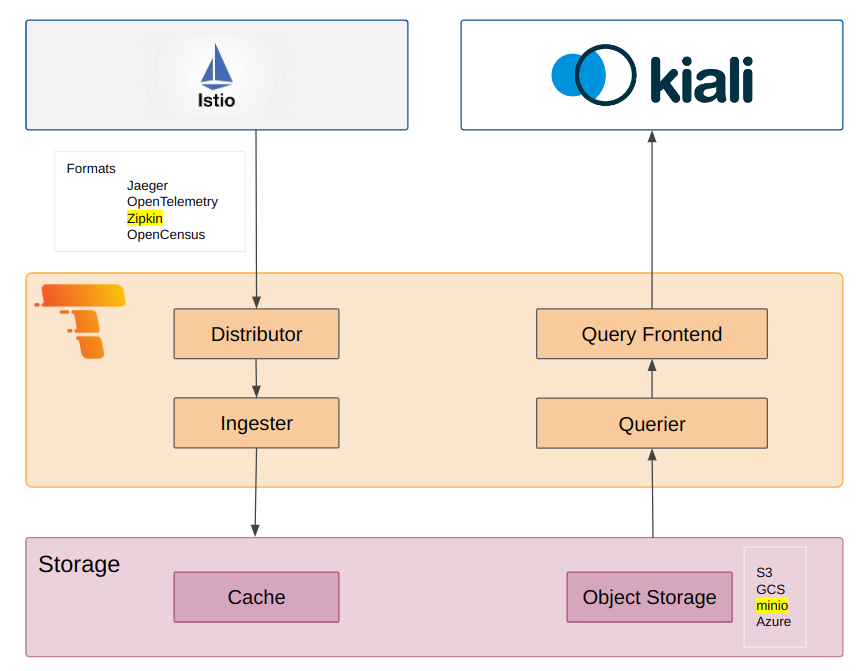

- 2.12.3.2: Grafana Tempo

- 2.13: Traffic Health

- 2.14: Virtual Machine workloads

- 3: Features

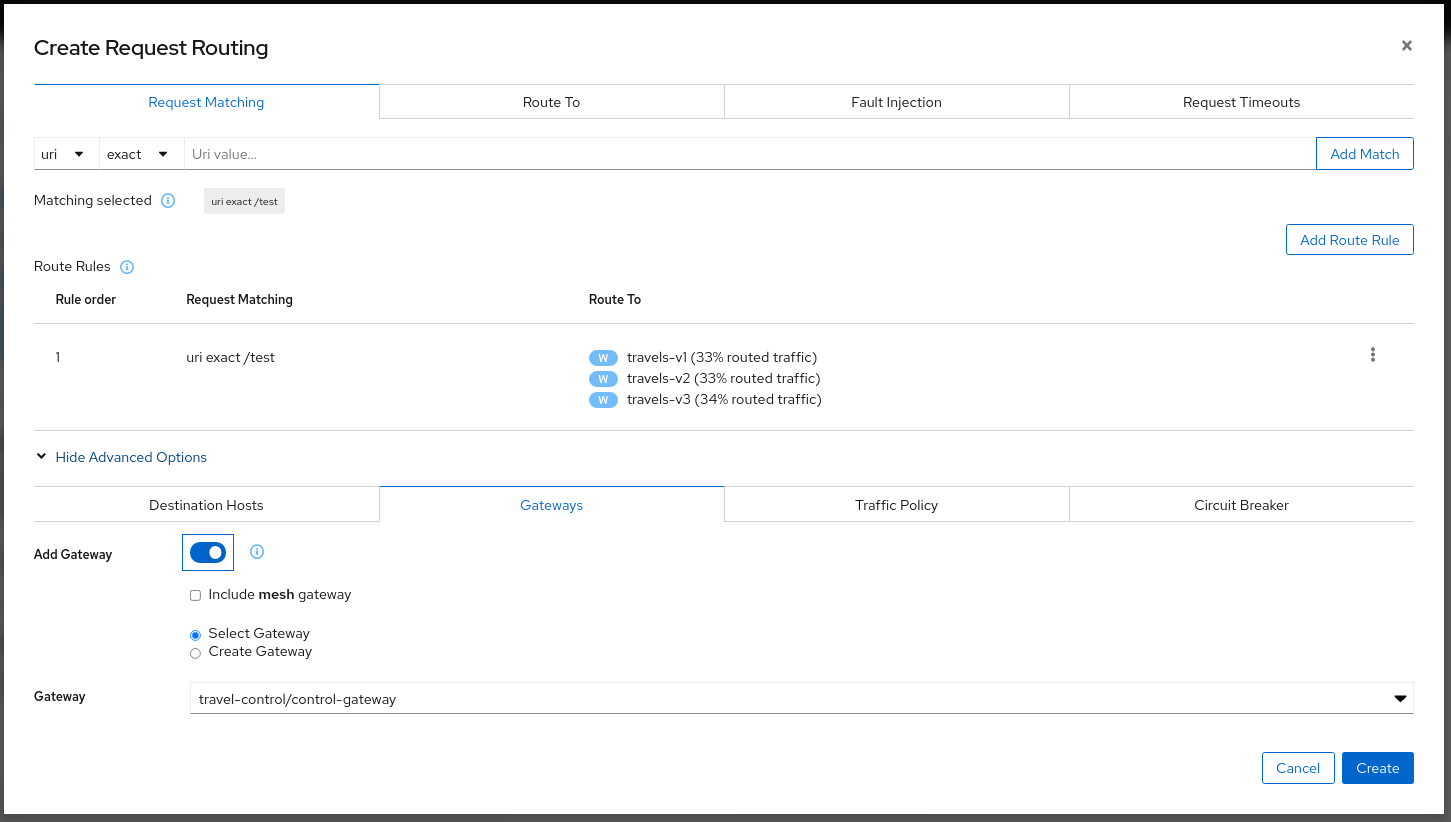

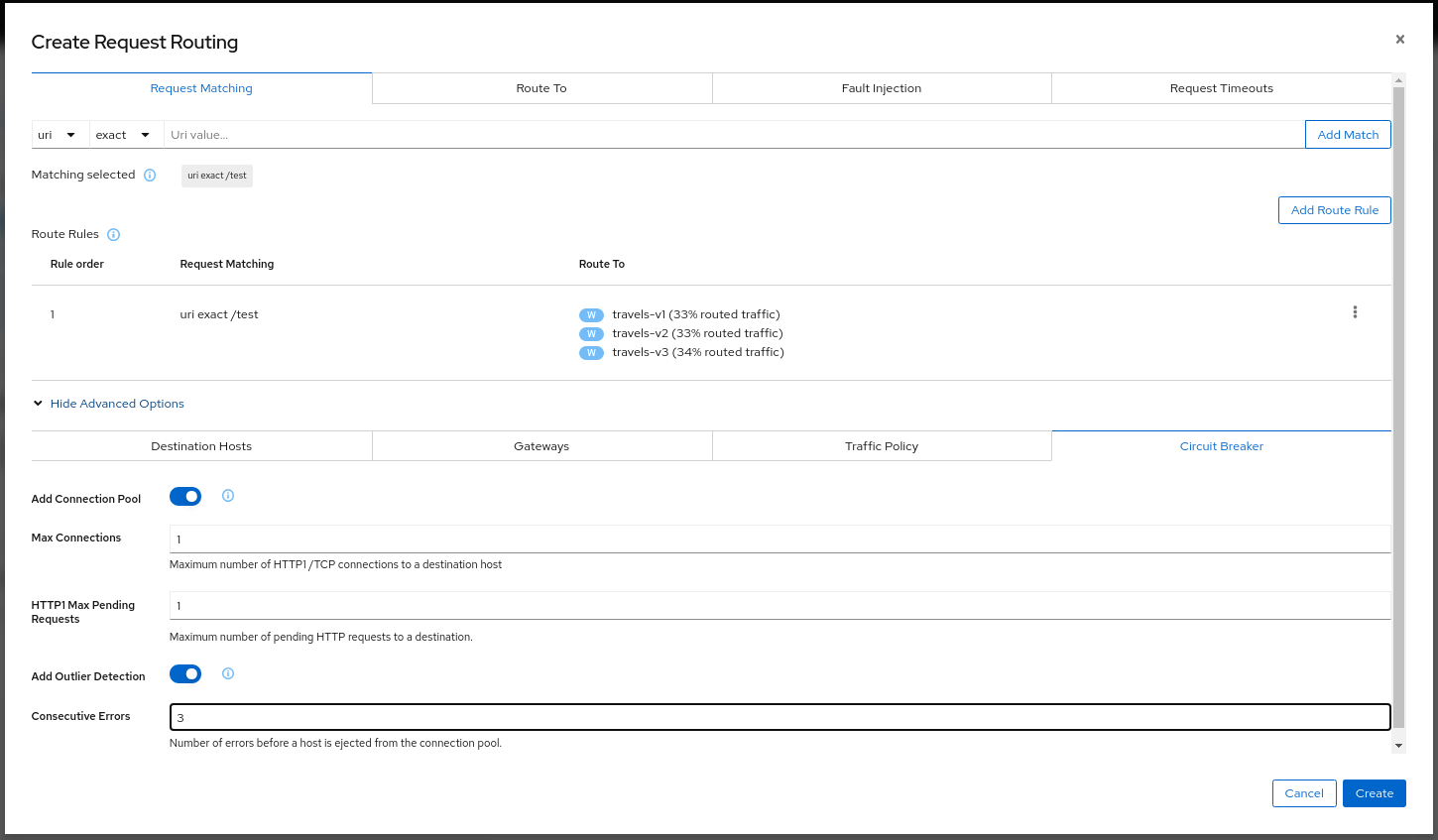

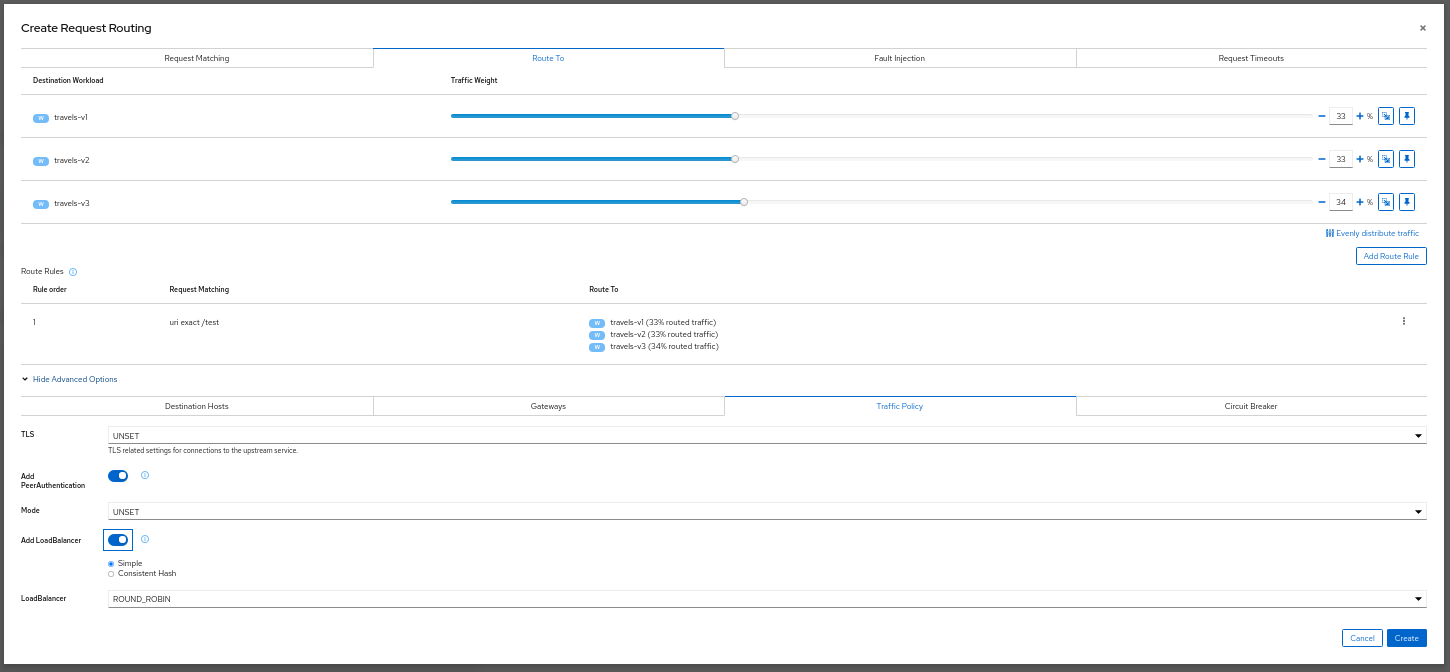

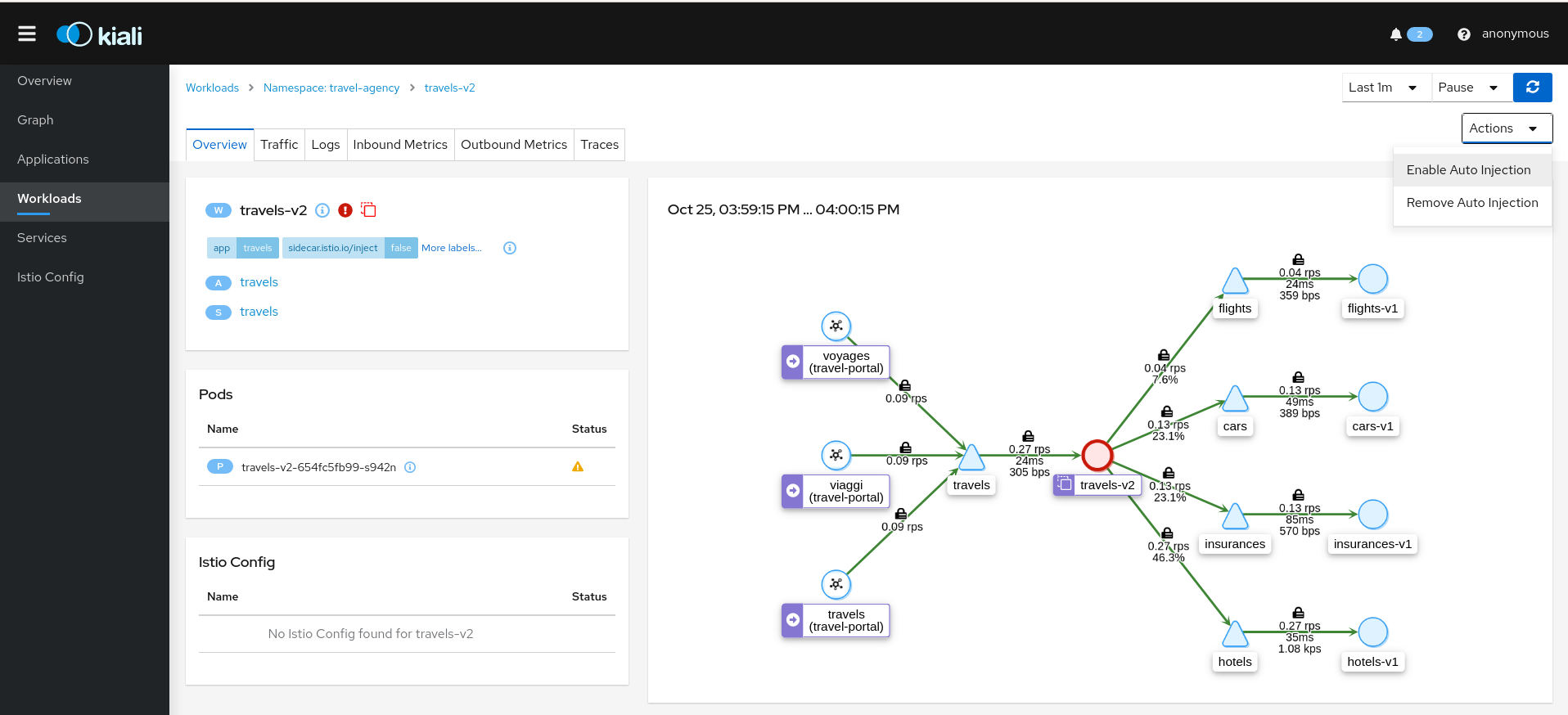

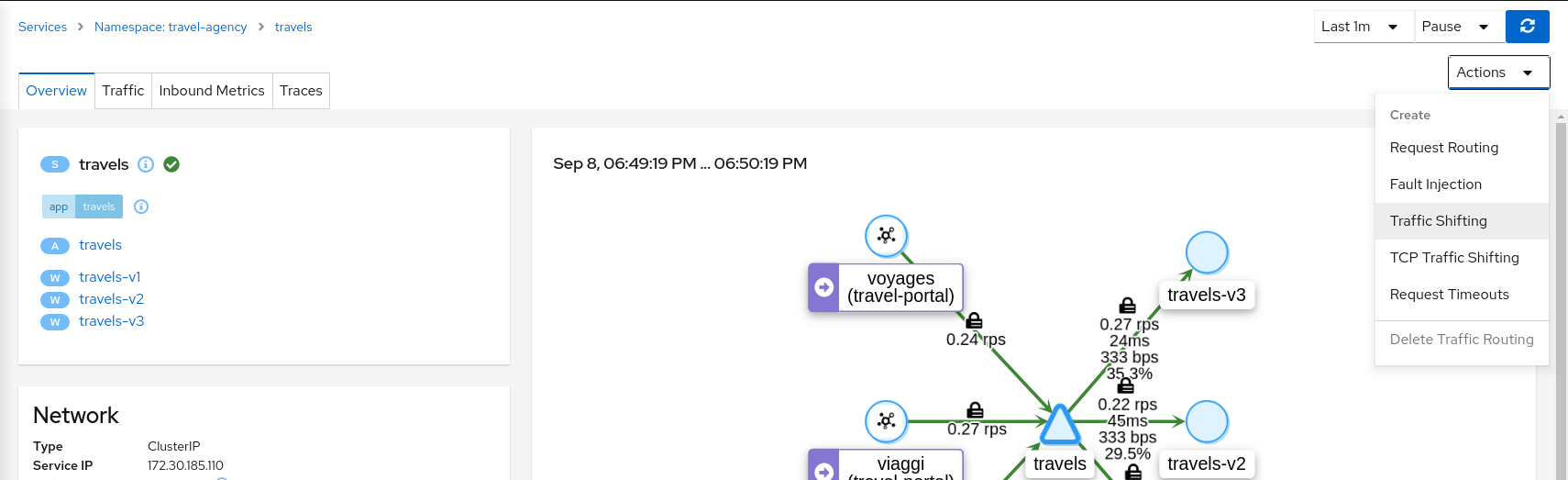

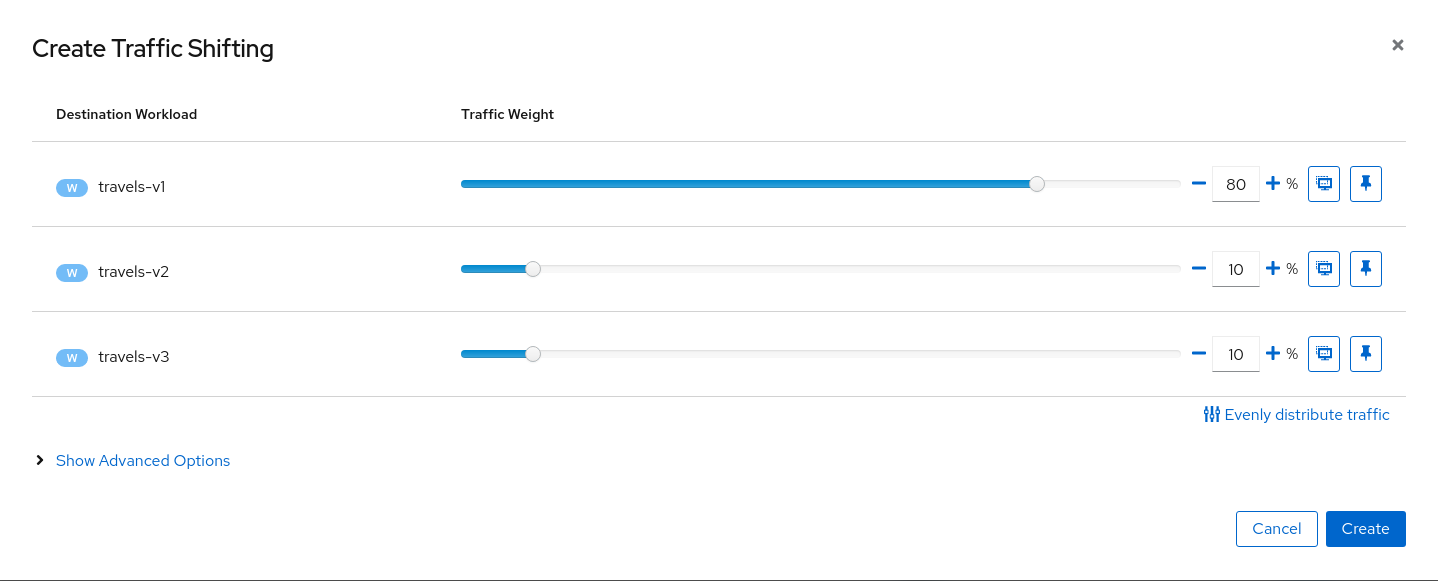

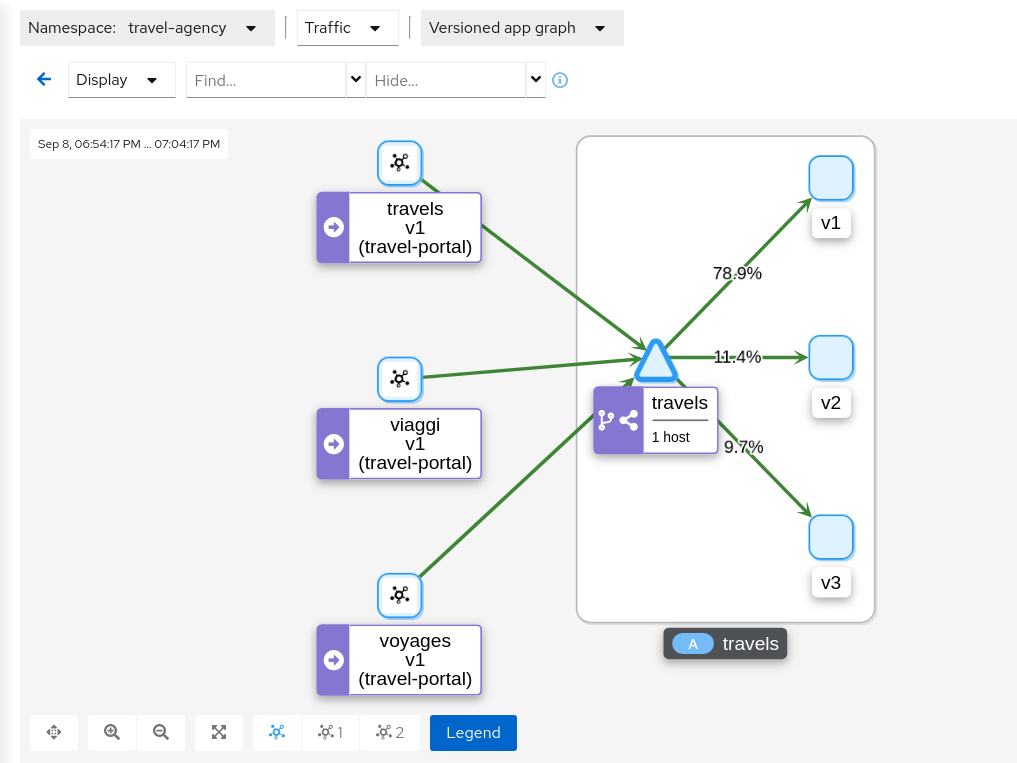

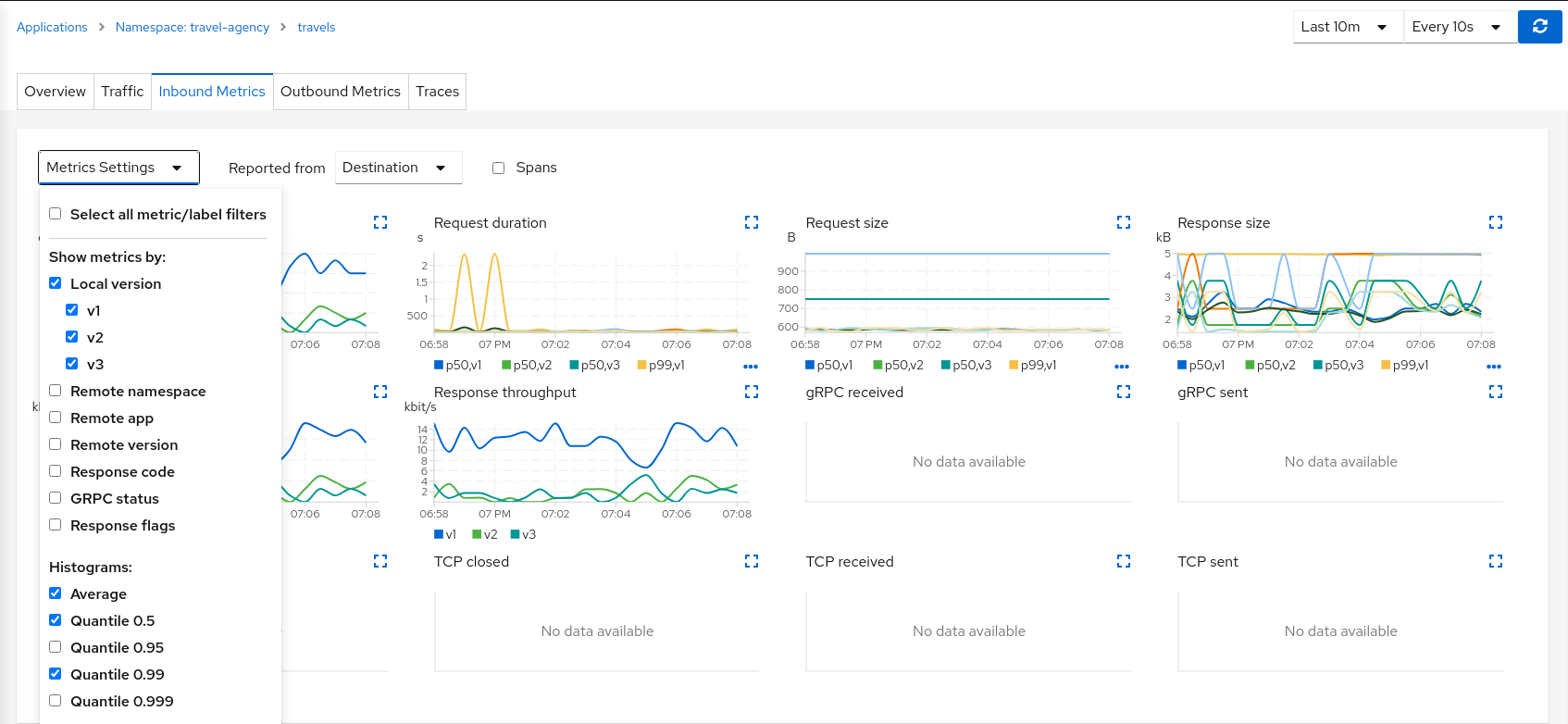

- 3.1: Application Wizards

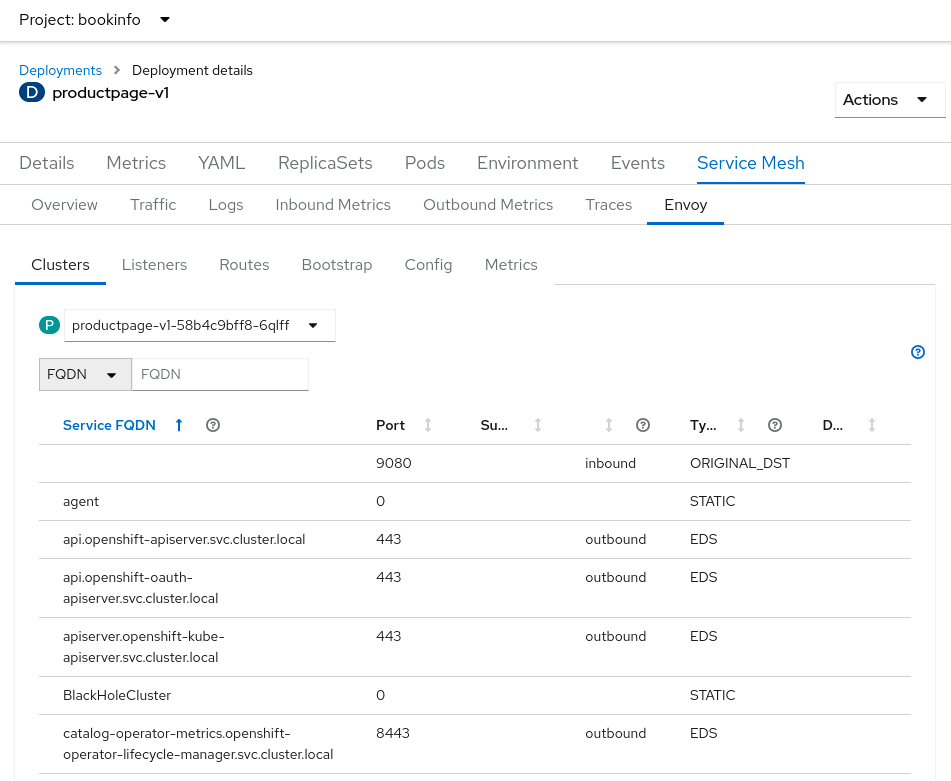

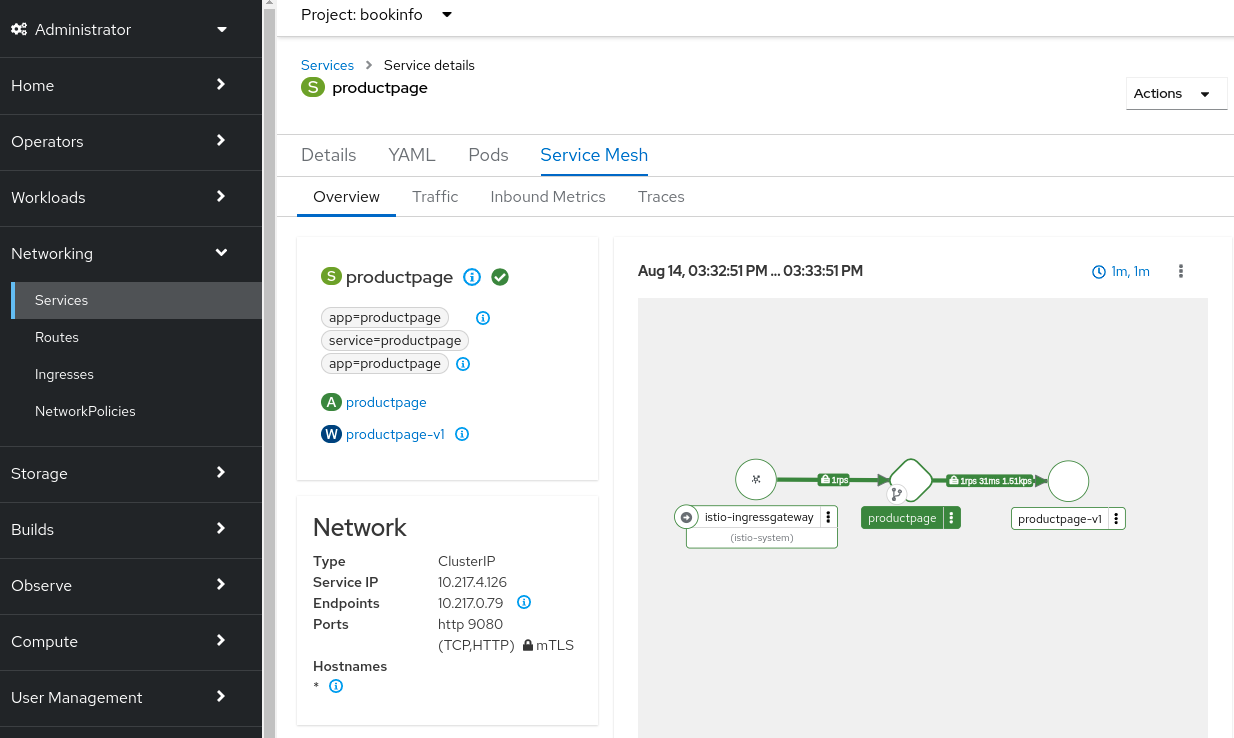

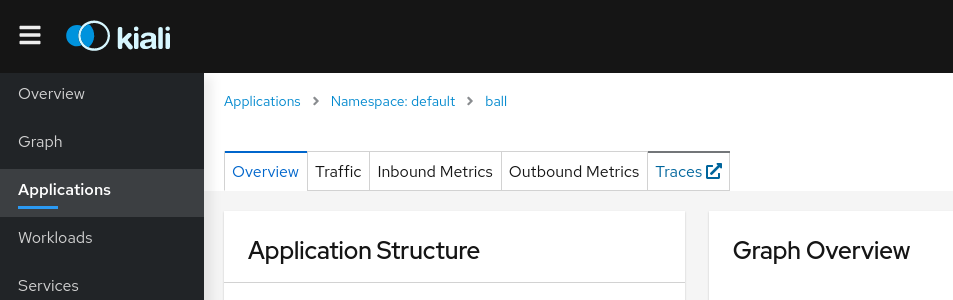

- 3.2: Detail Views

- 3.3: Health

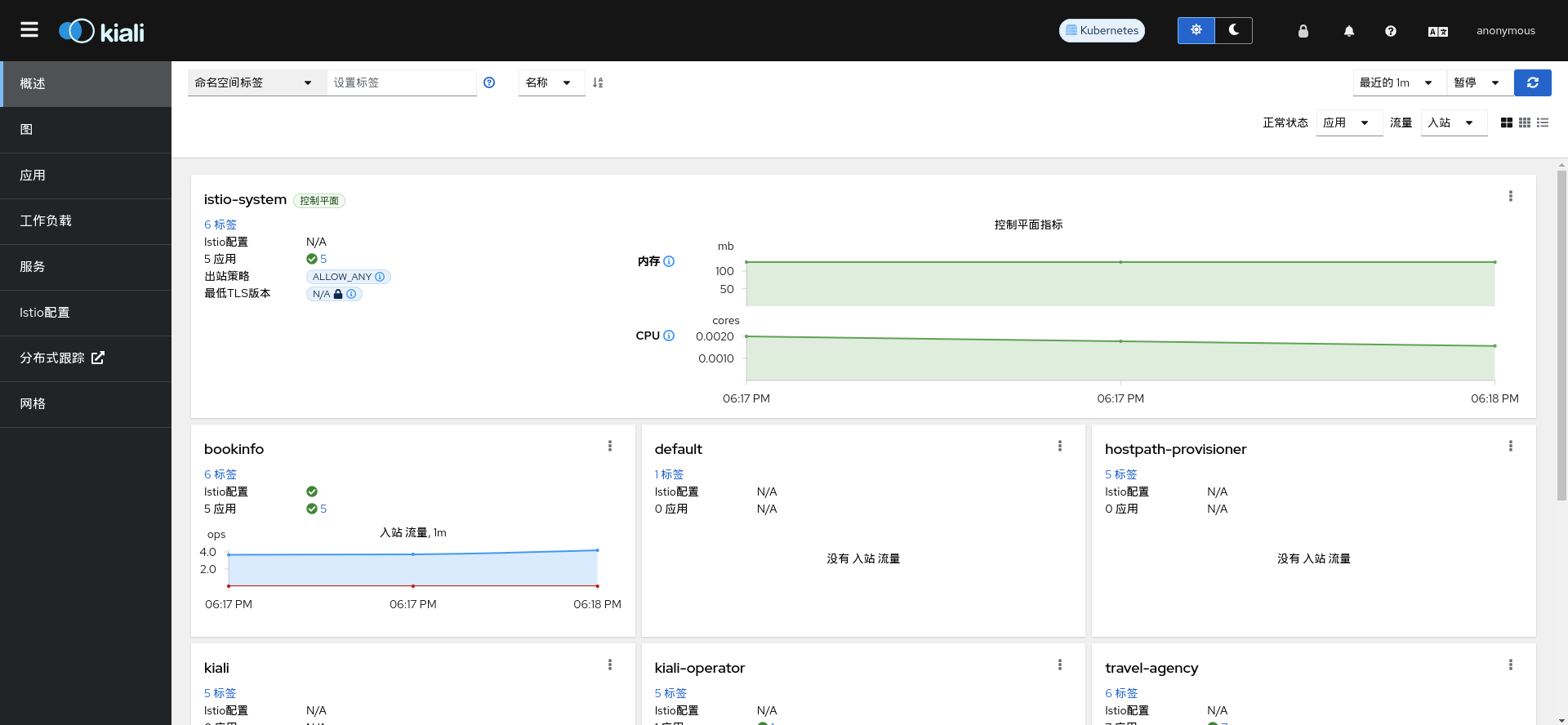

- 3.4: Internationalization

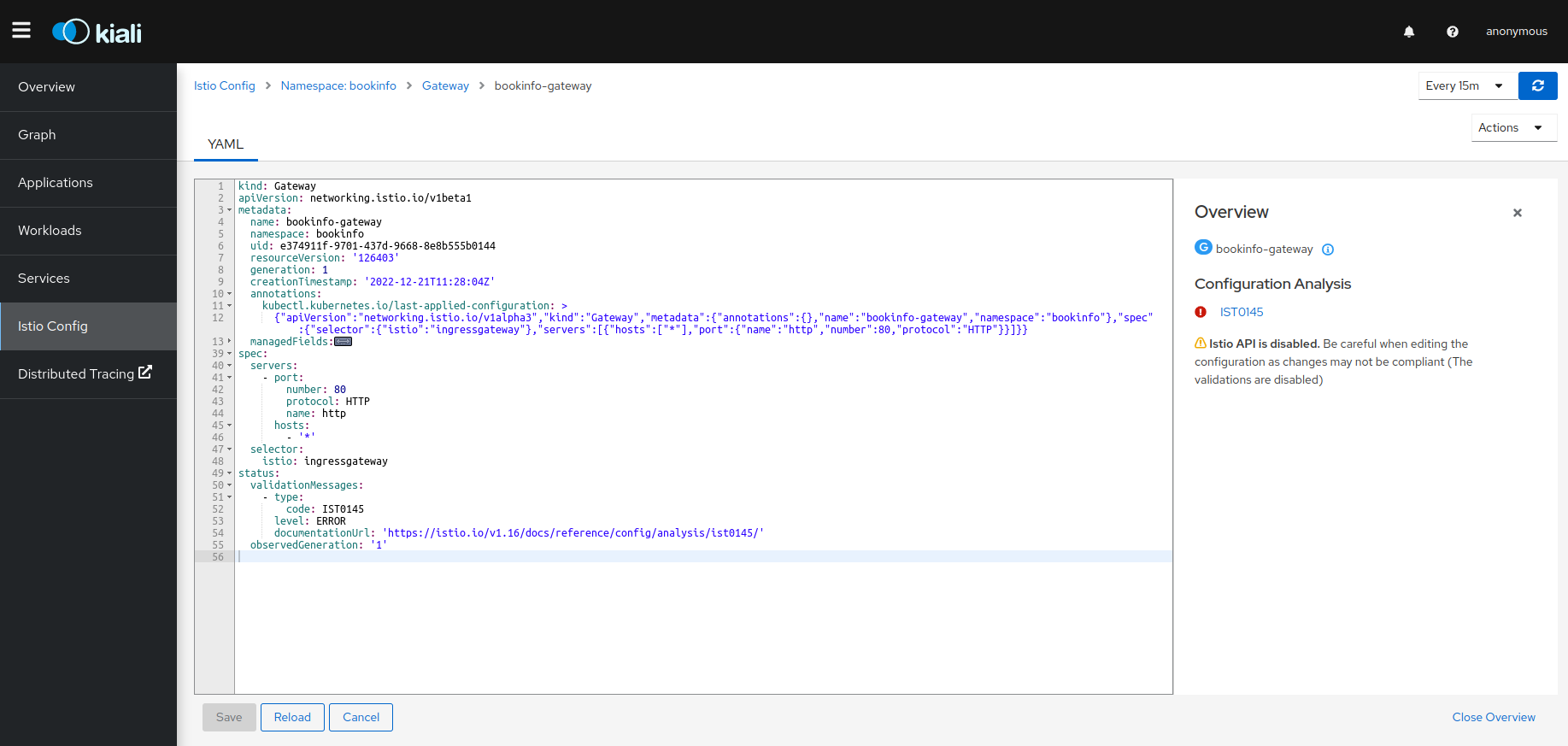

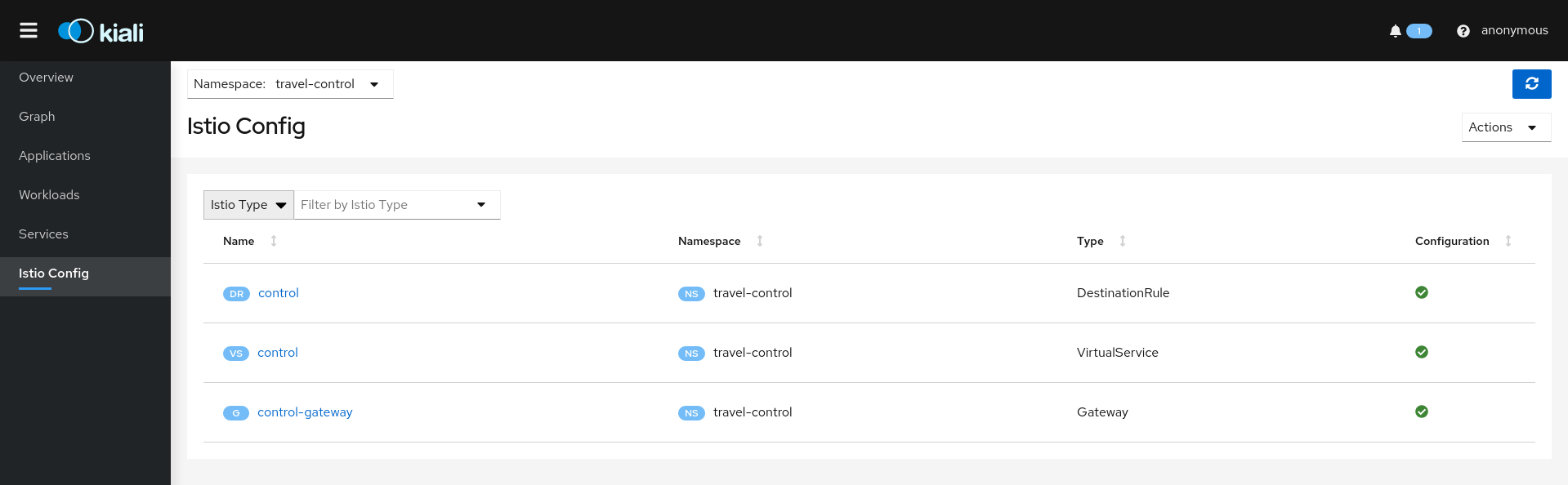

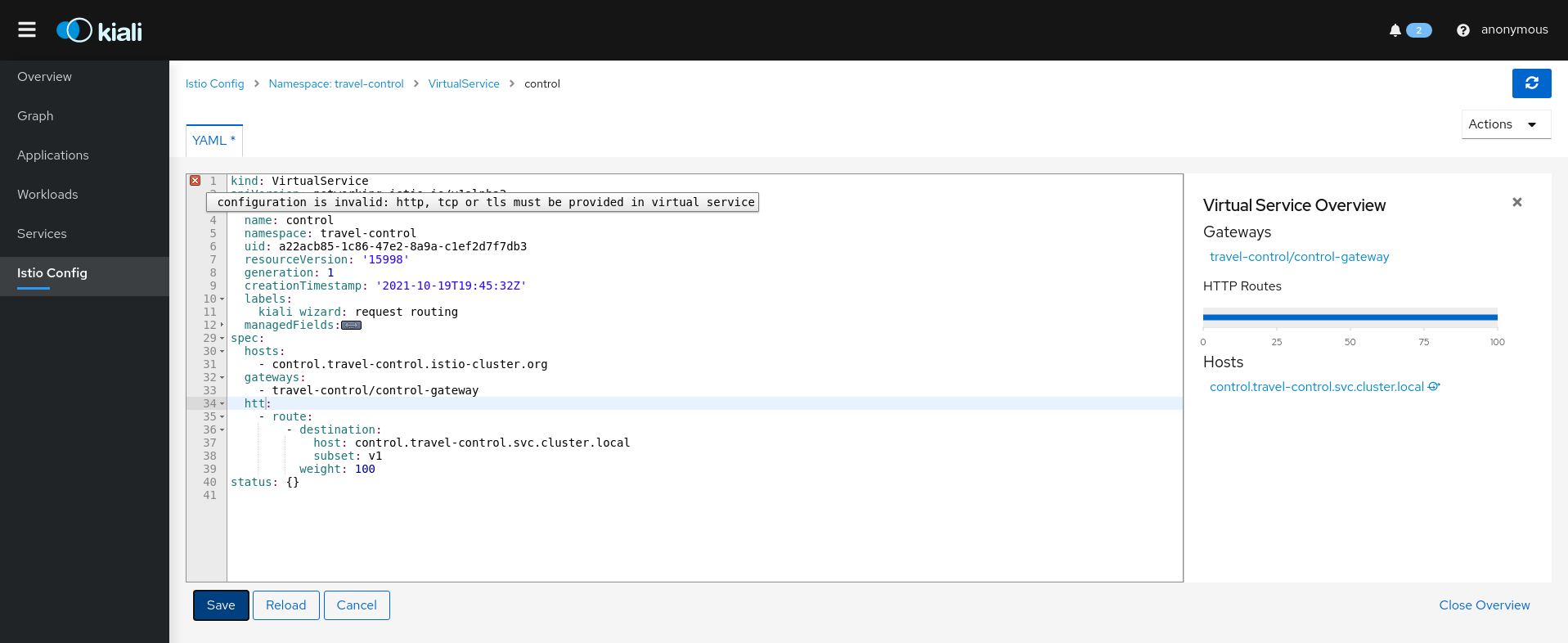

- 3.5: Istio Configuration

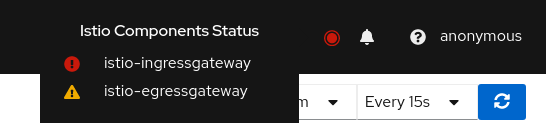

- 3.6: Istio Infrastructure Status

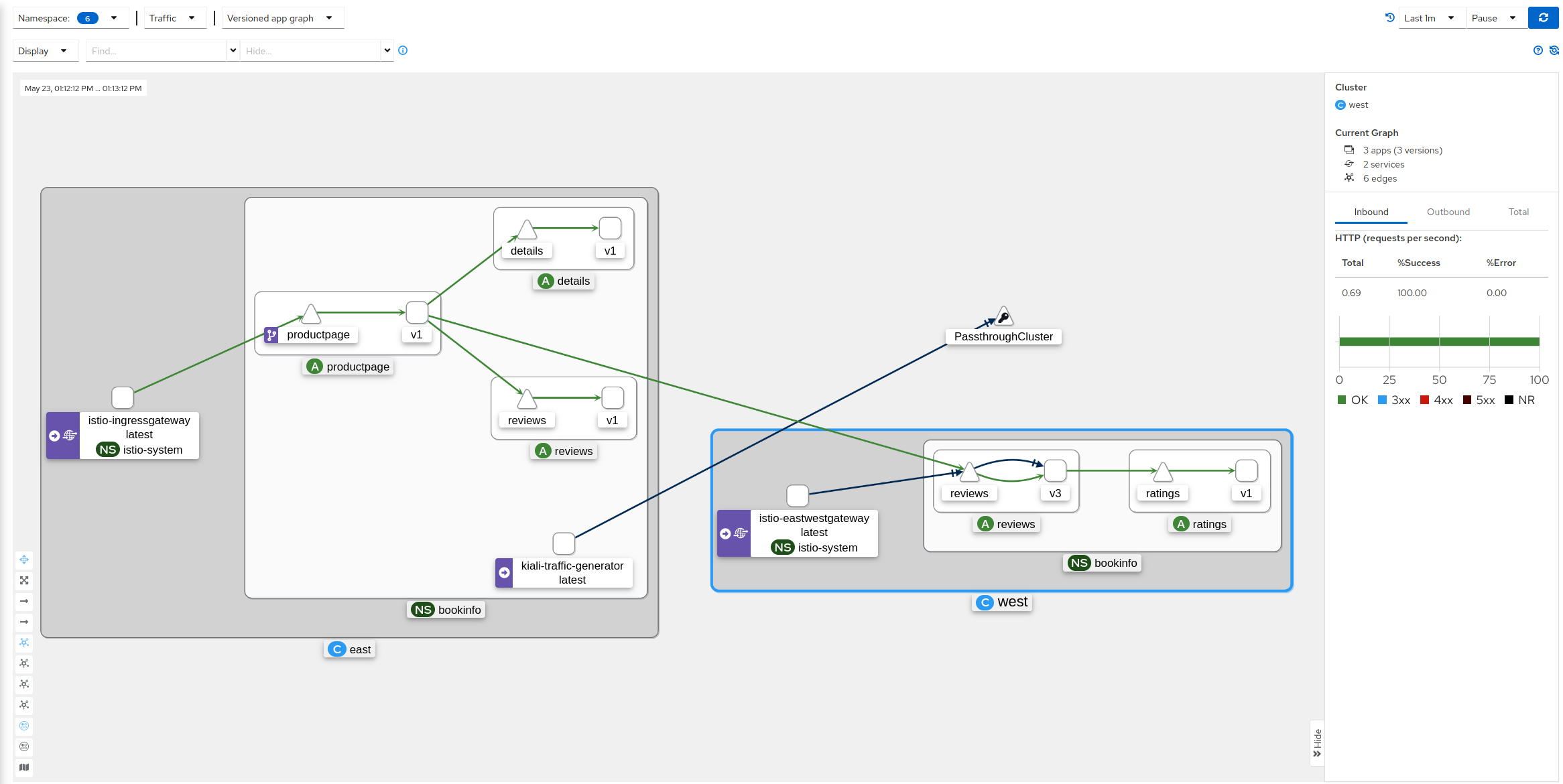

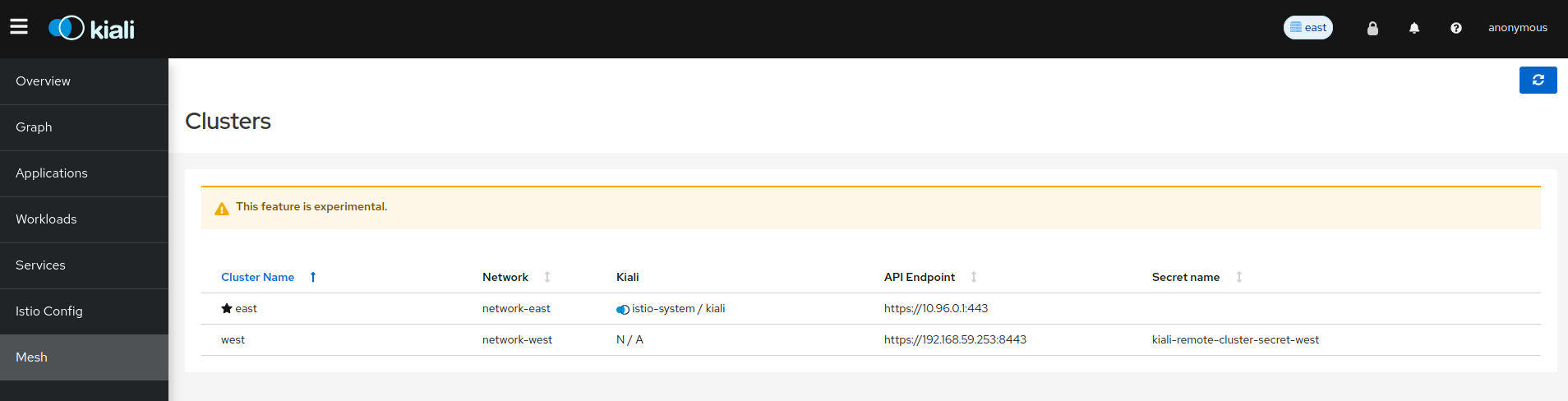

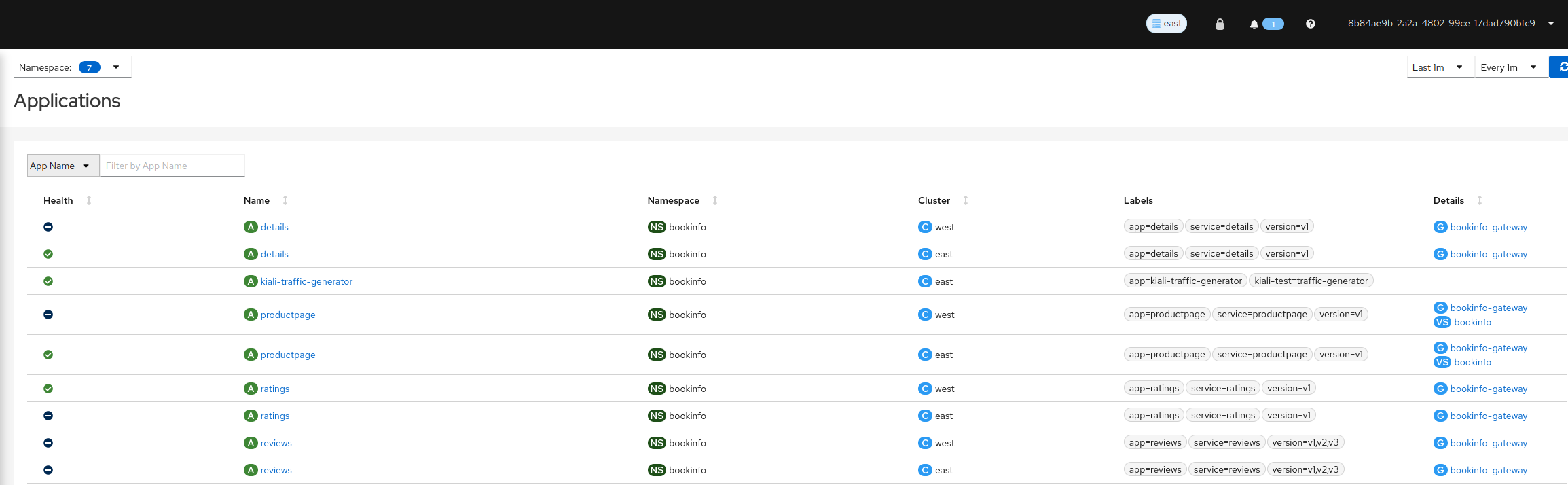

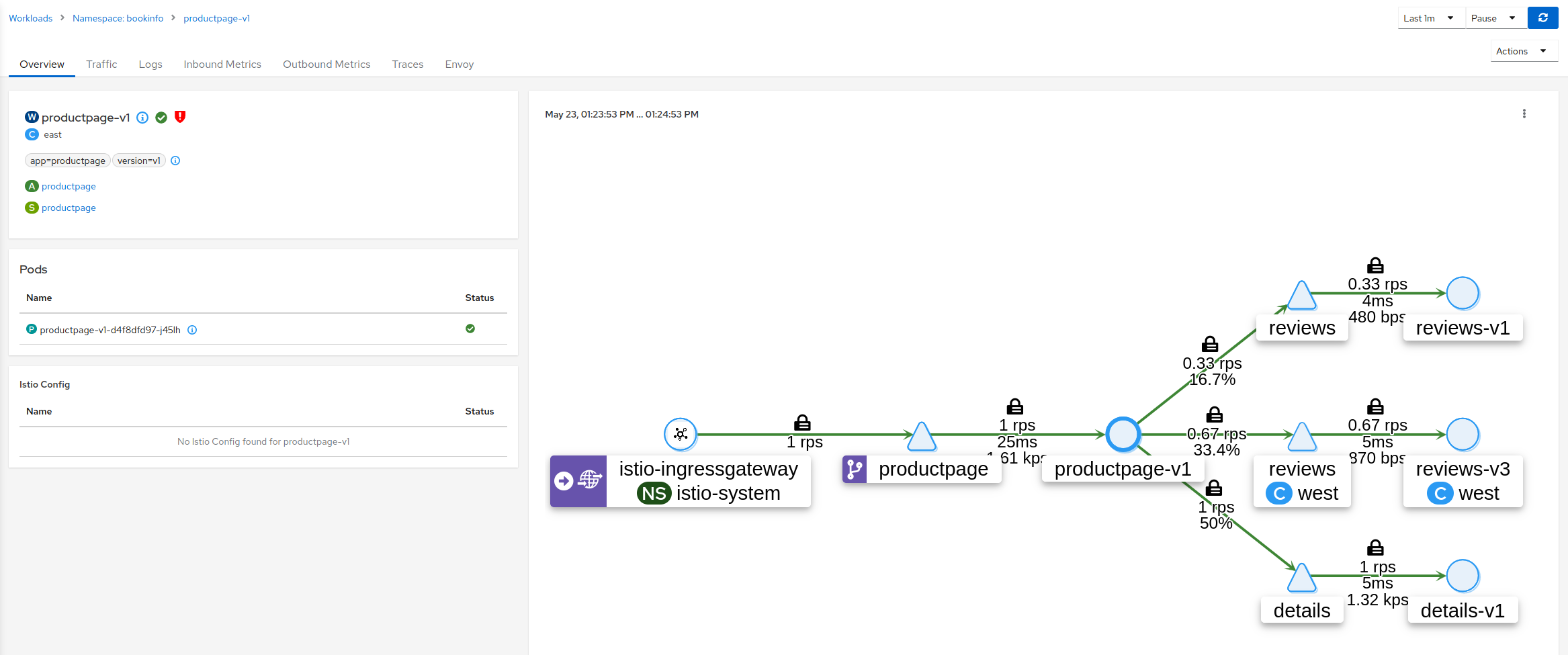

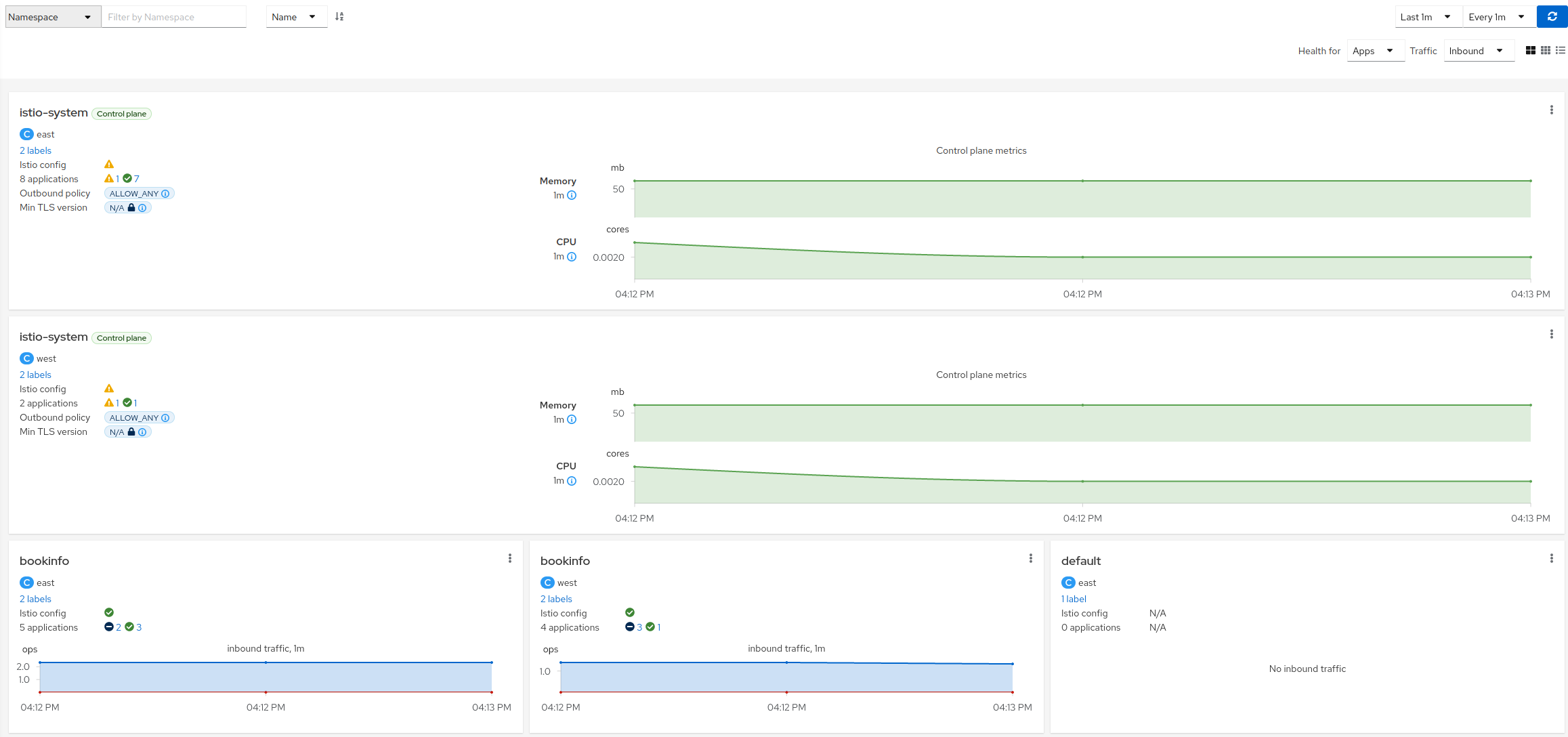

- 3.7: Multi-cluster

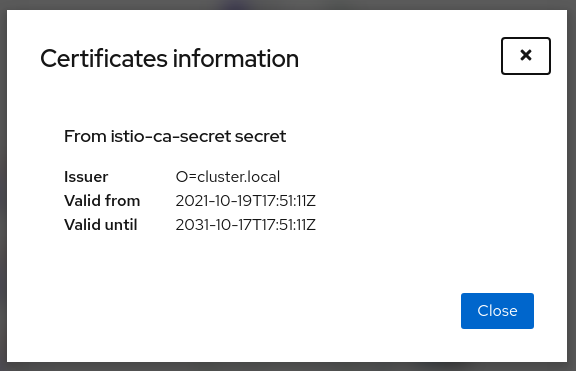

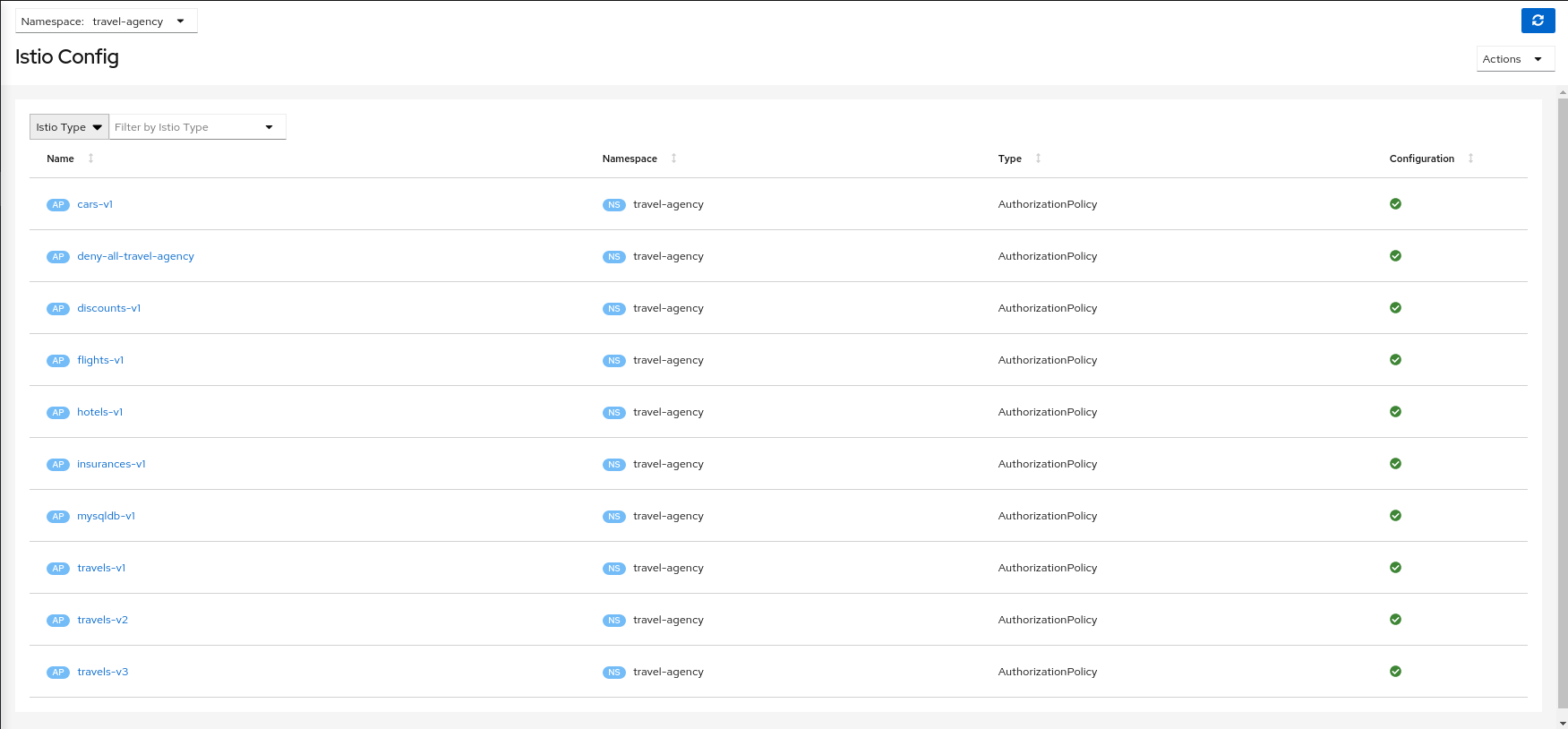

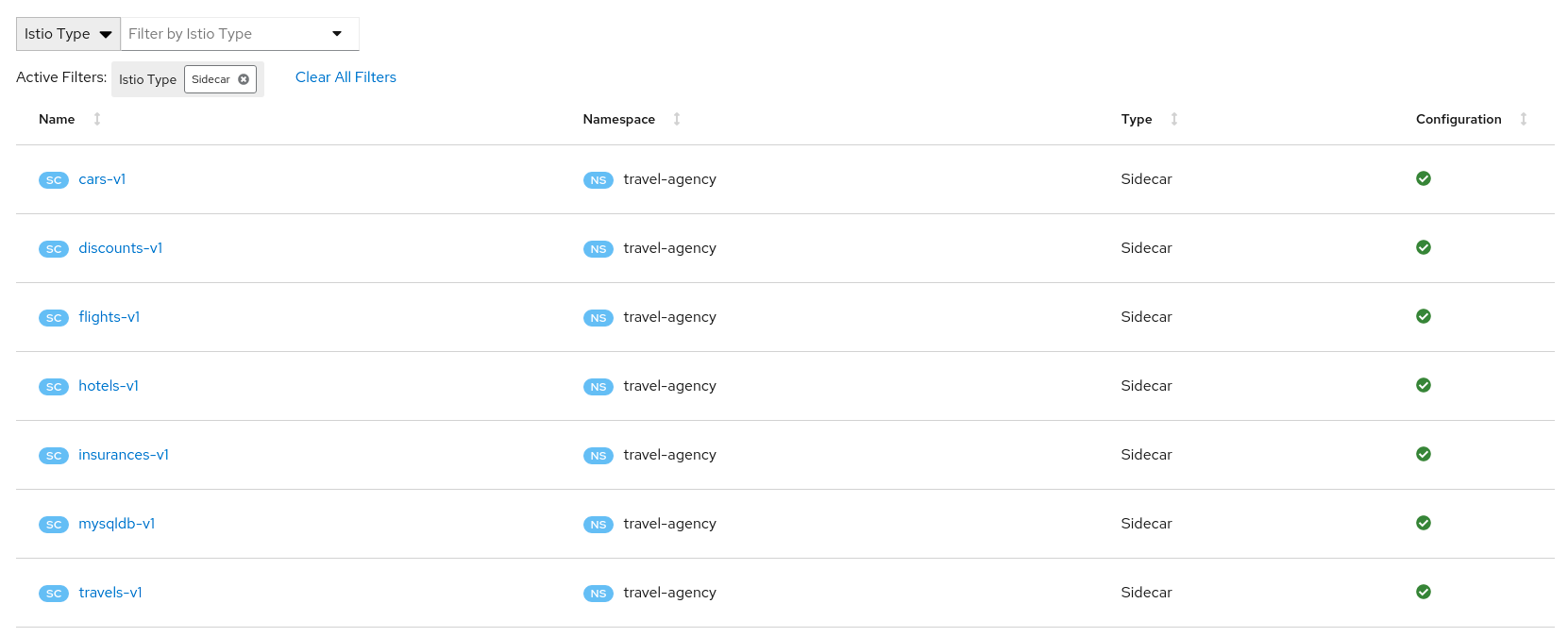

- 3.8: Security

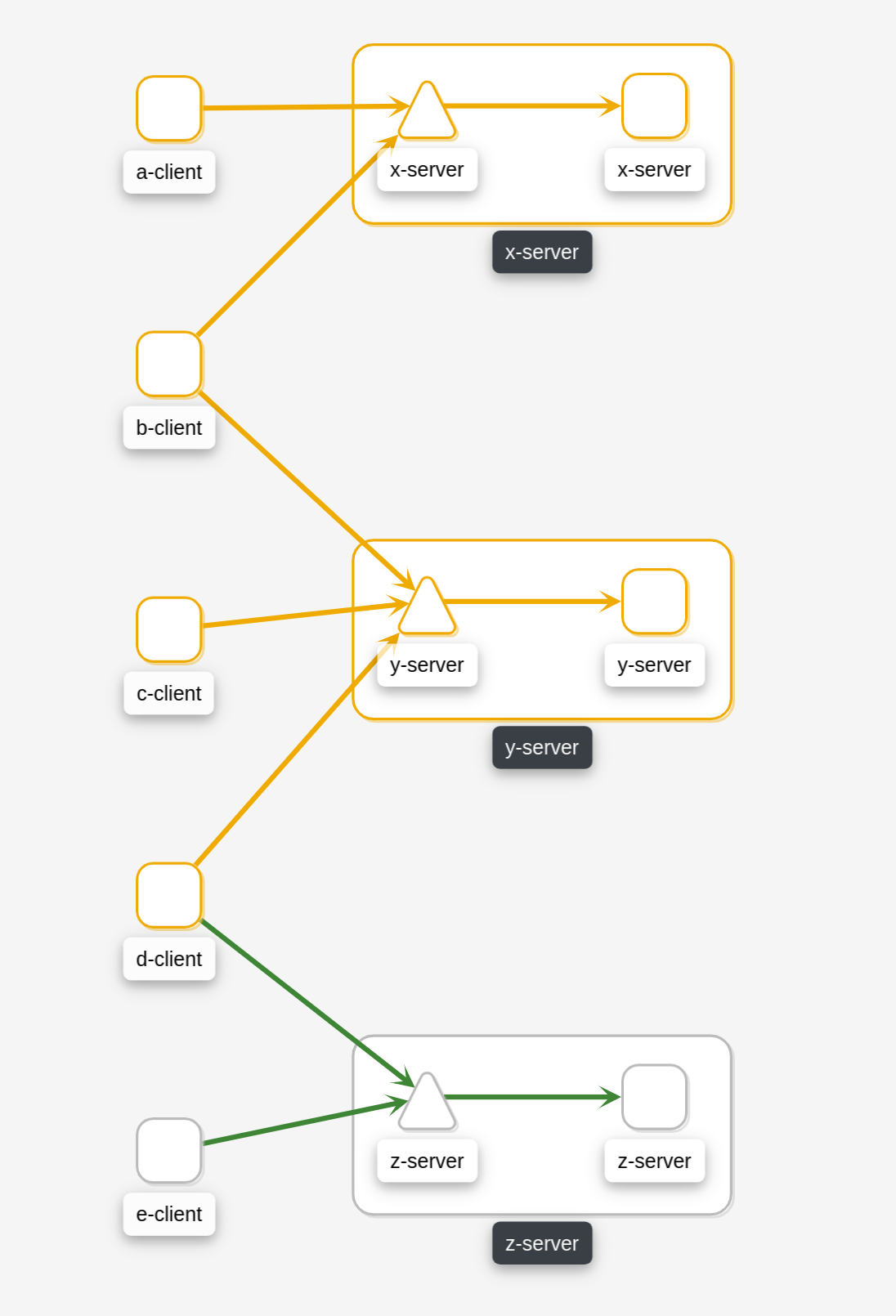

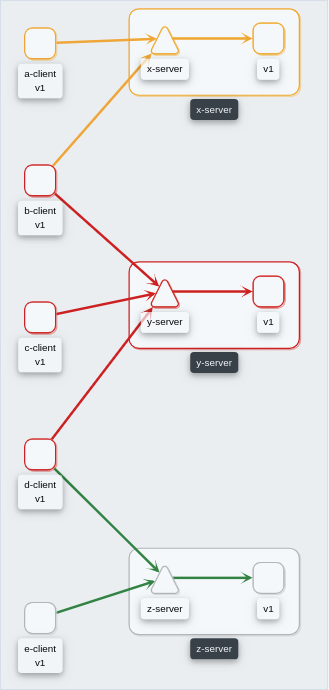

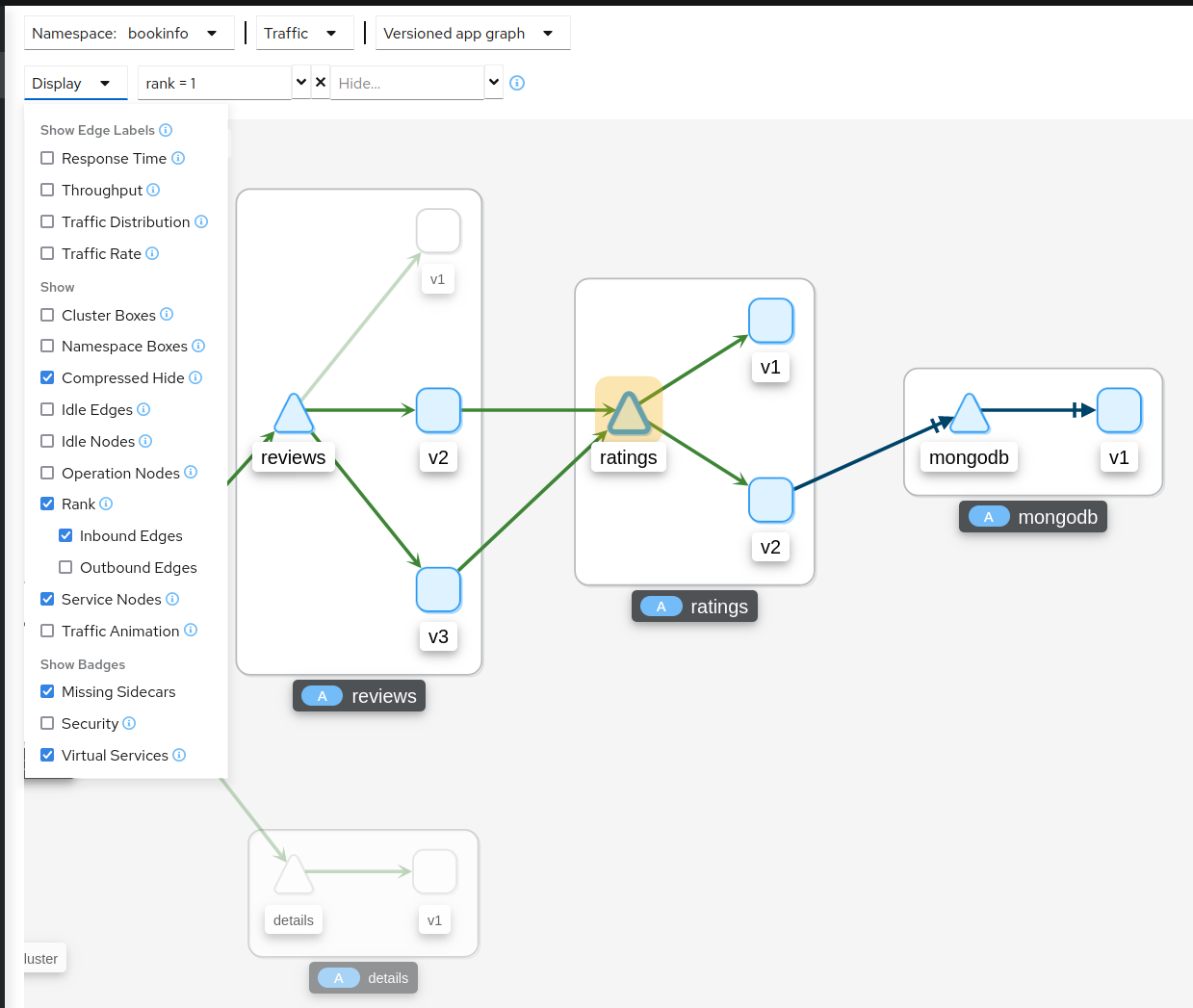

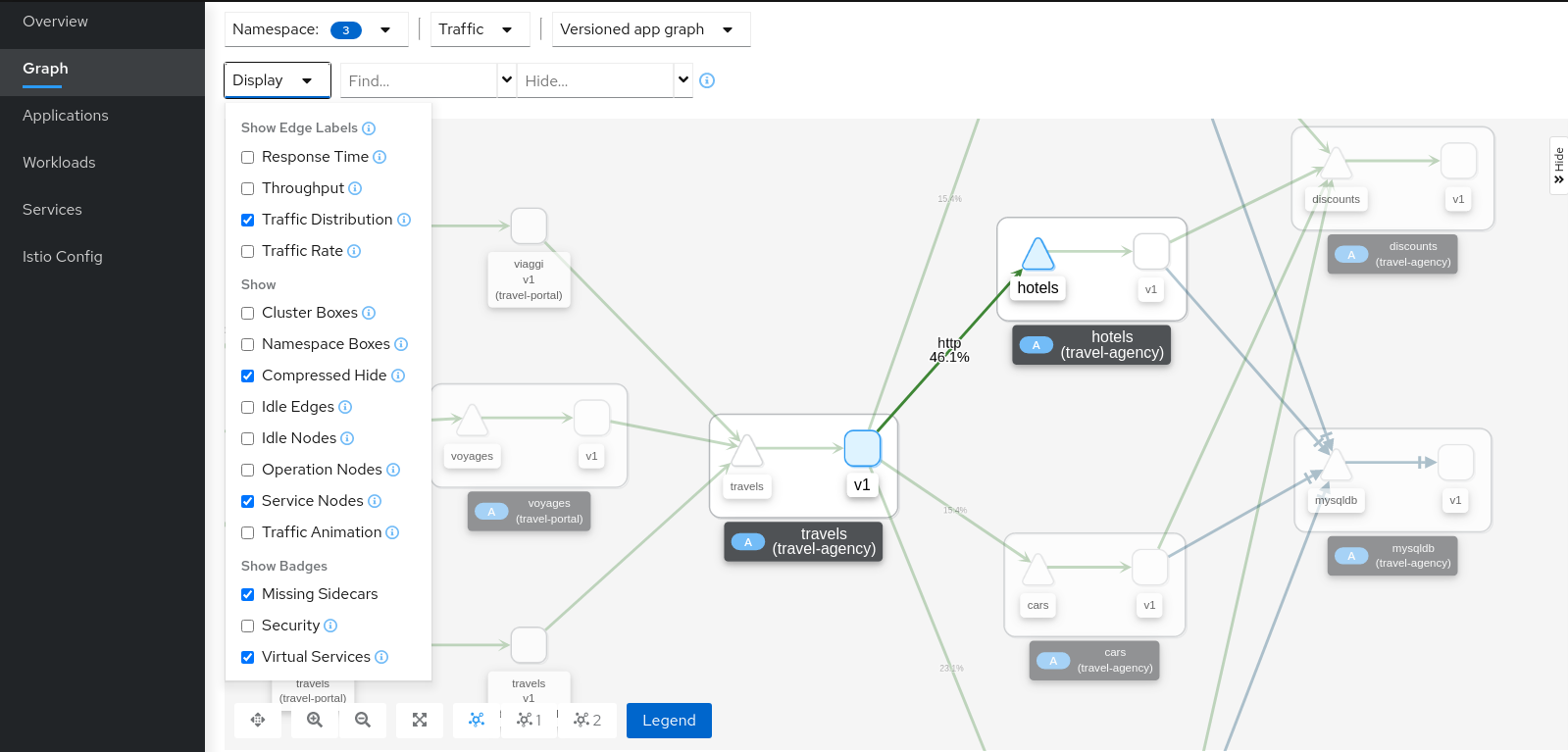

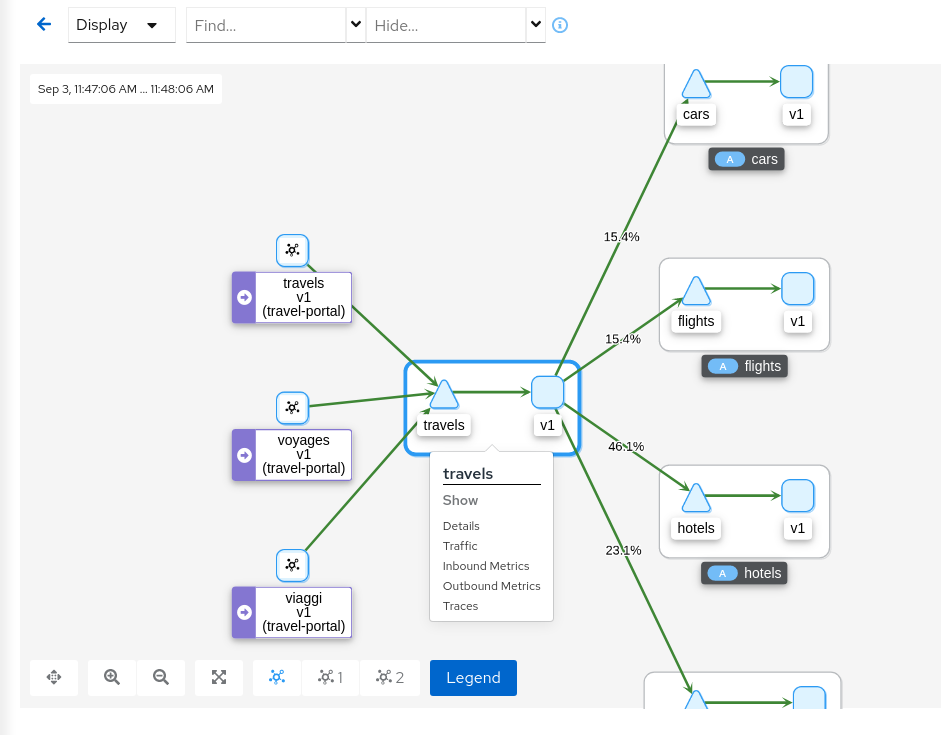

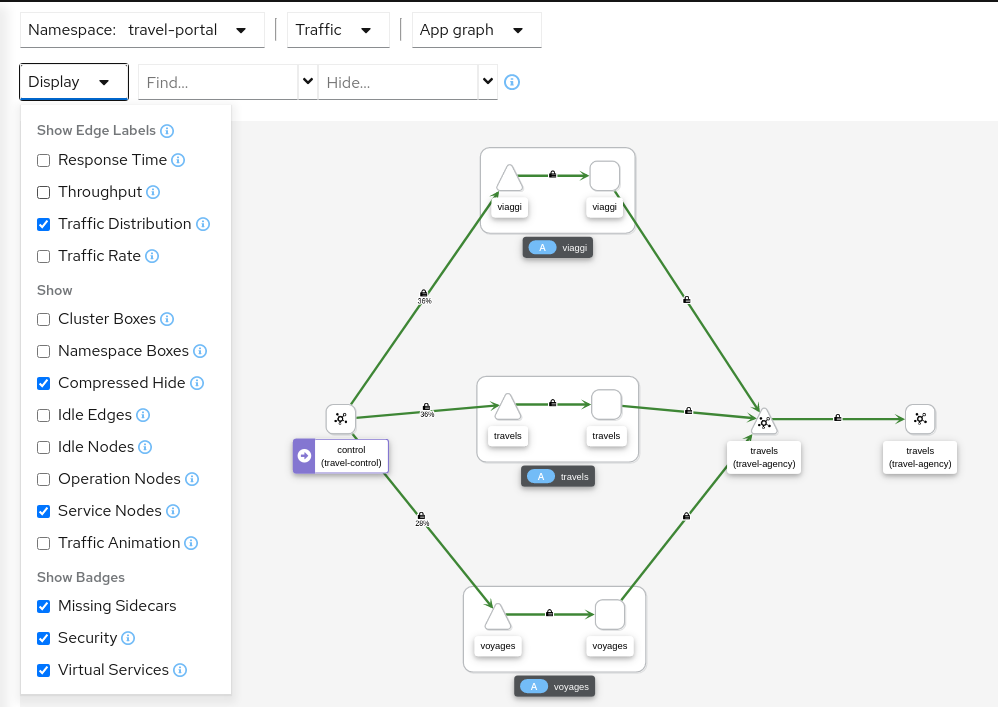

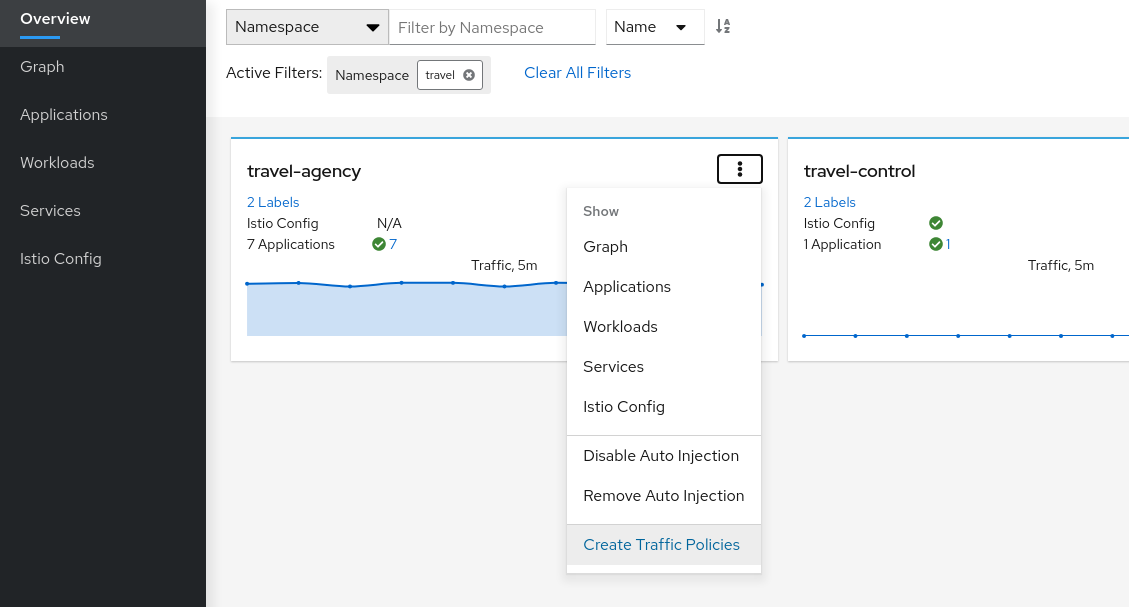

- 3.9: Topology

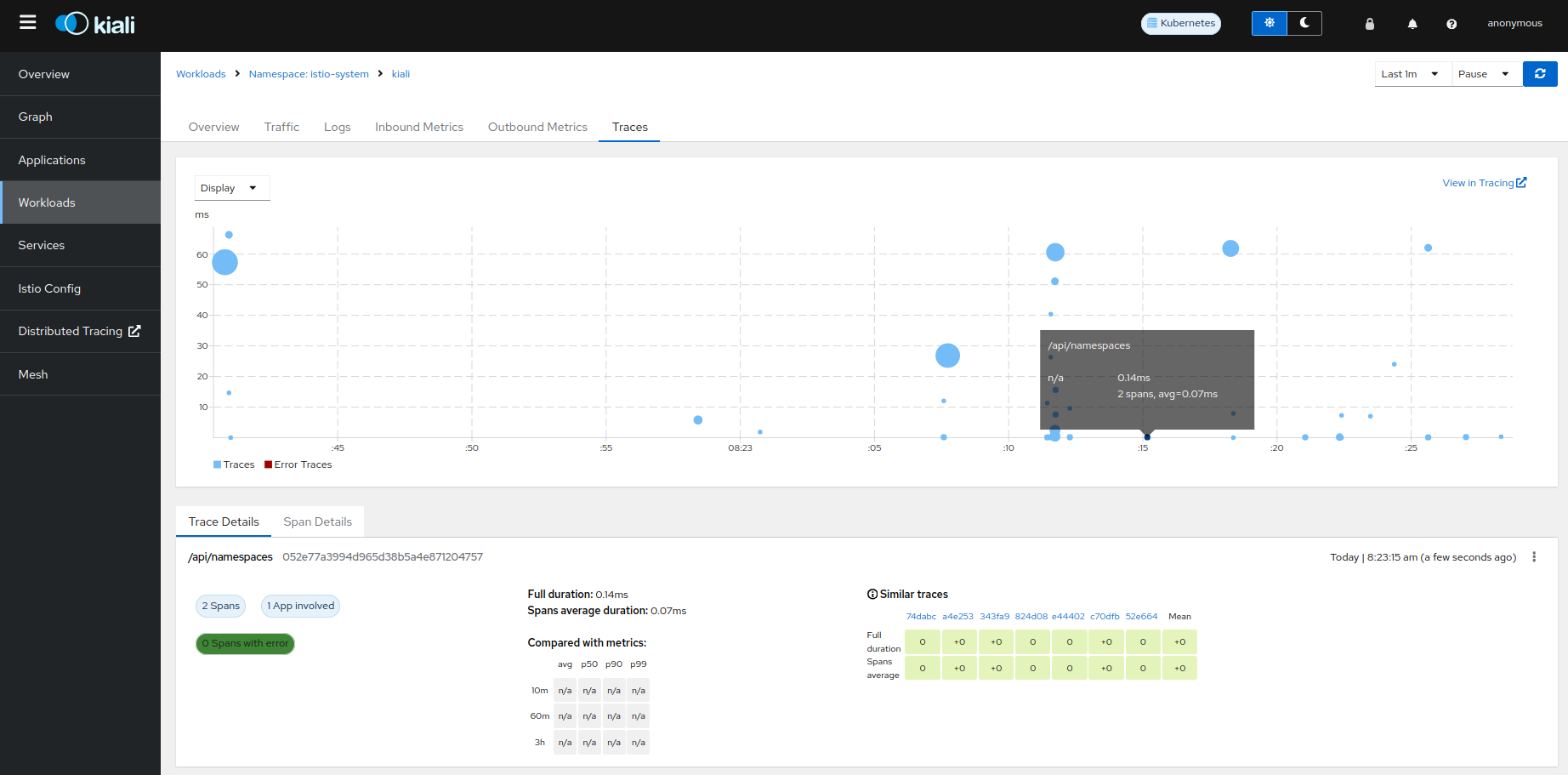

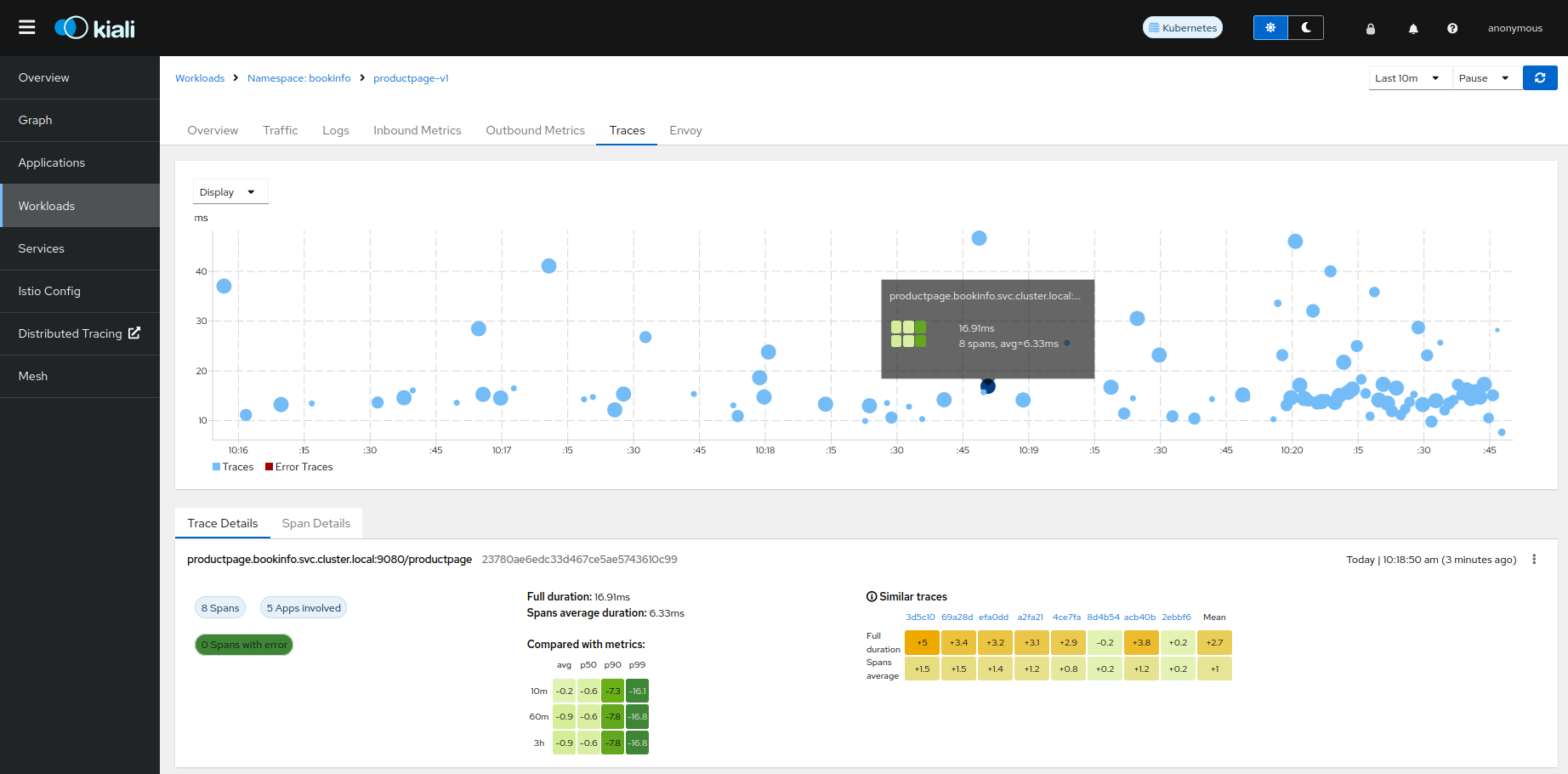

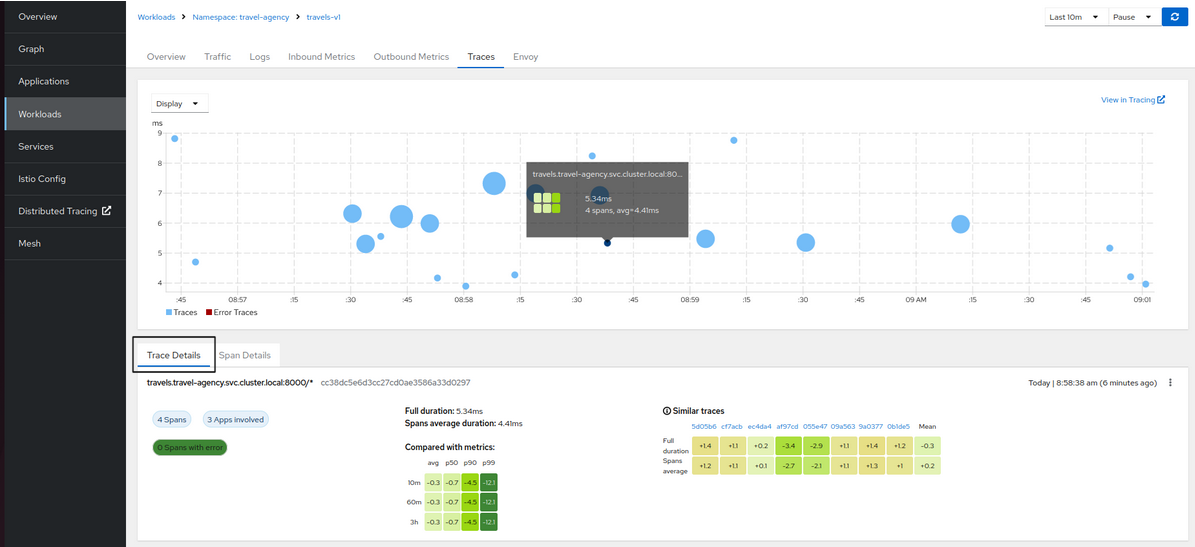

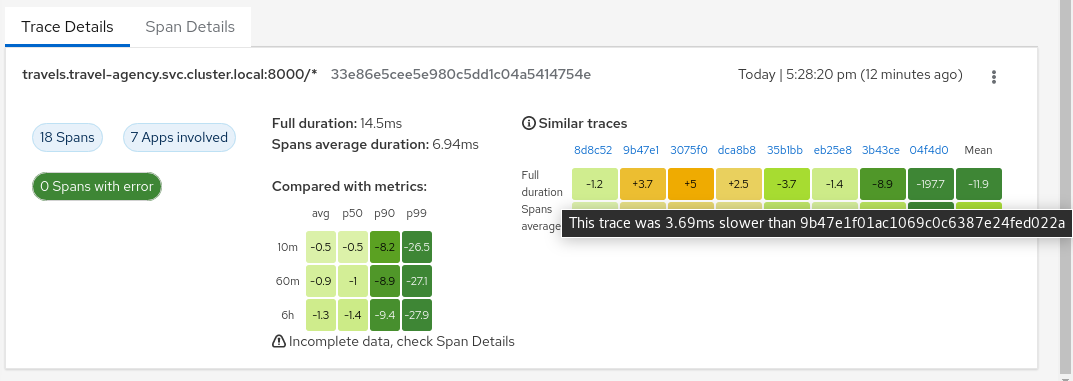

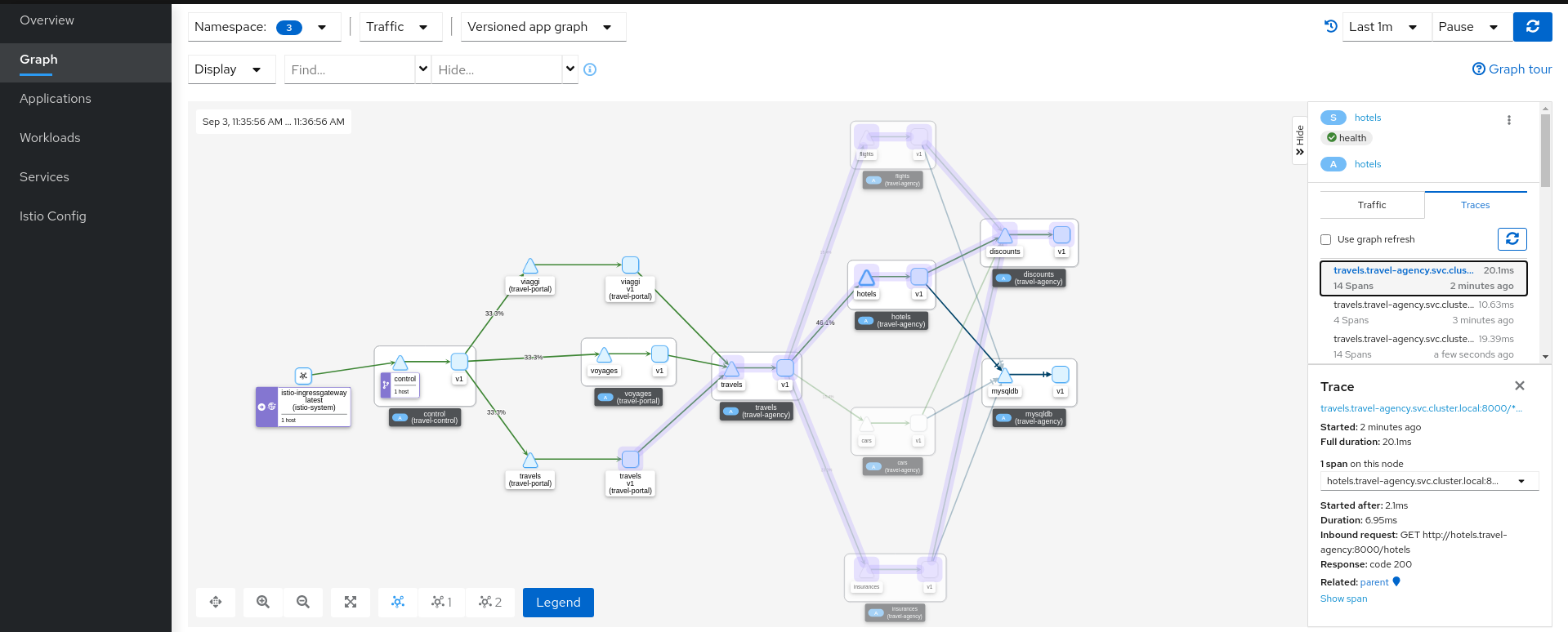

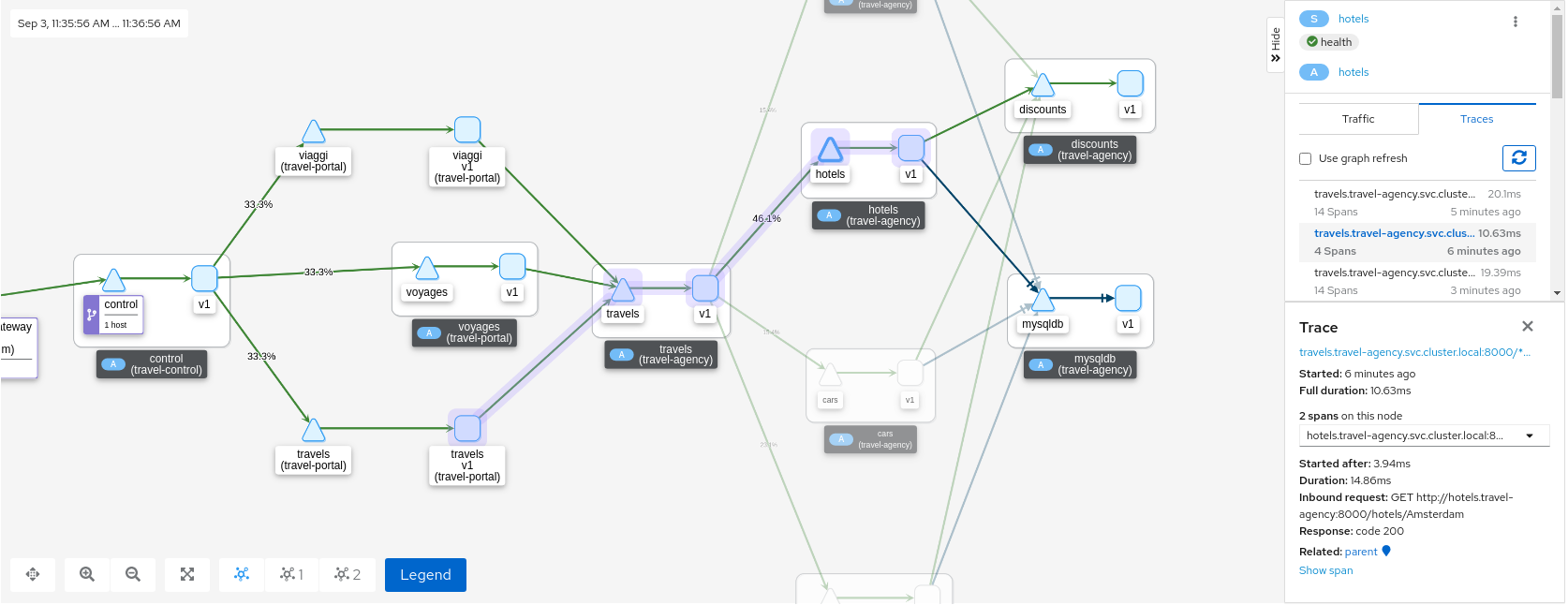

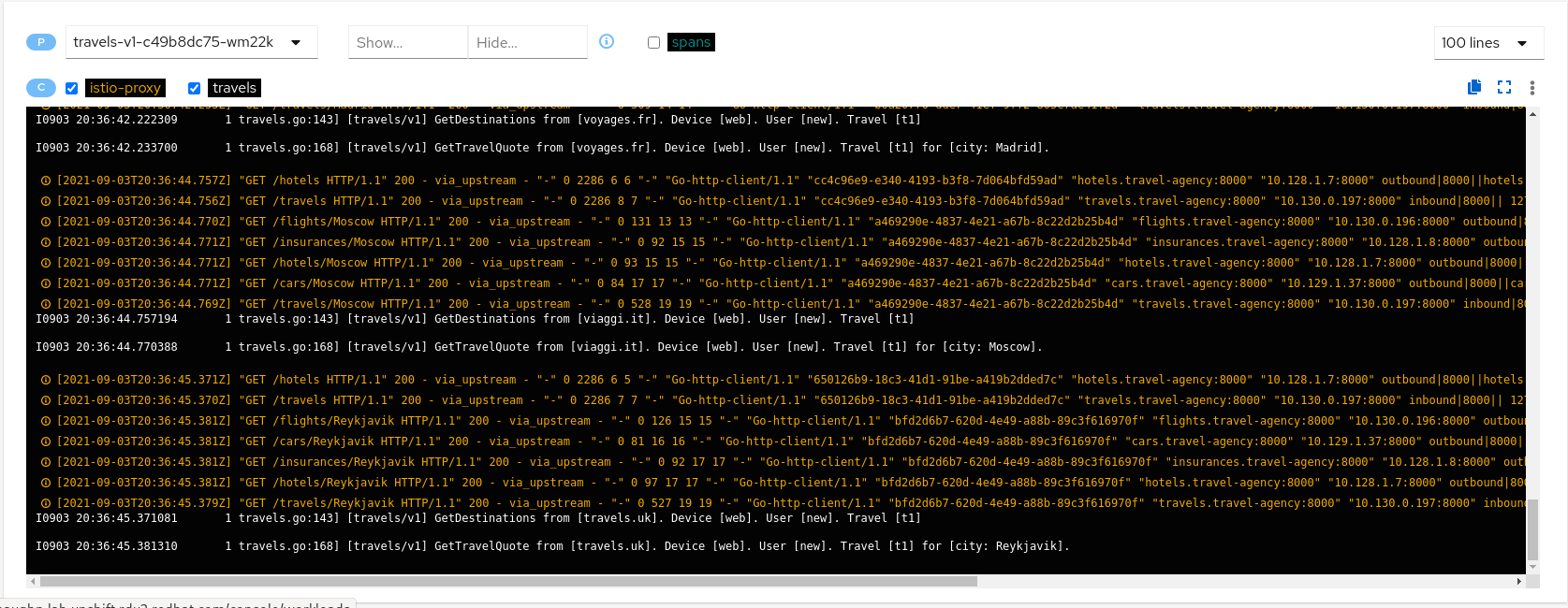

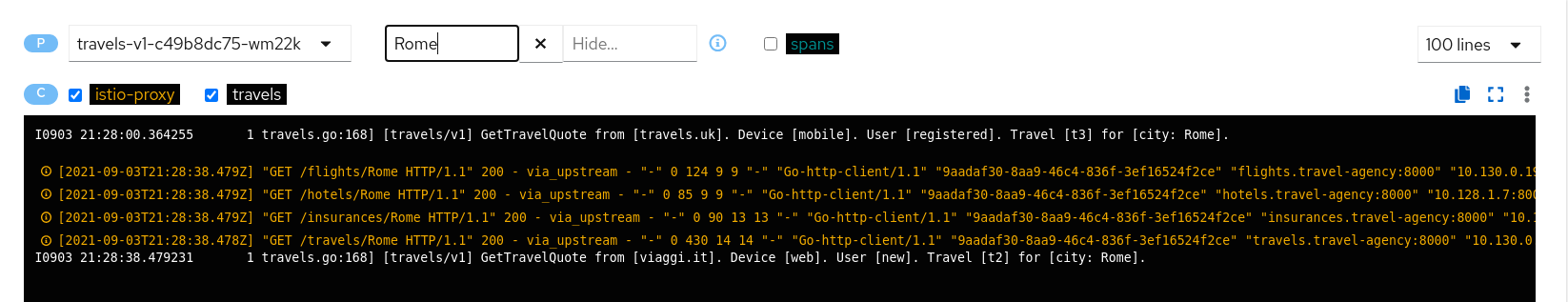

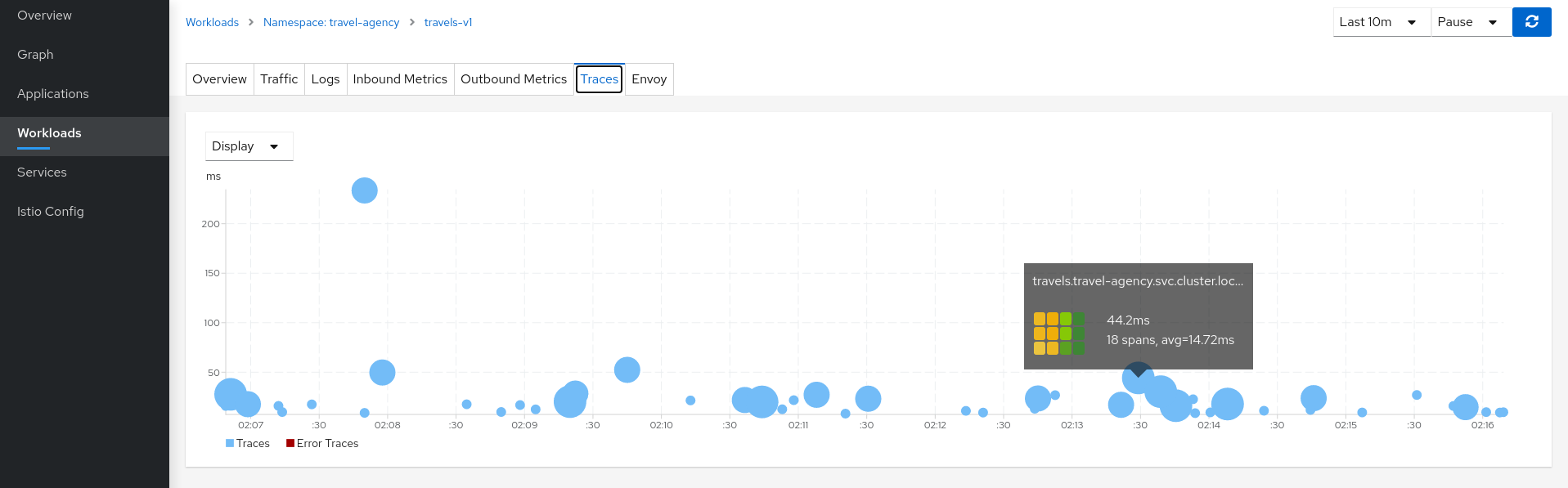

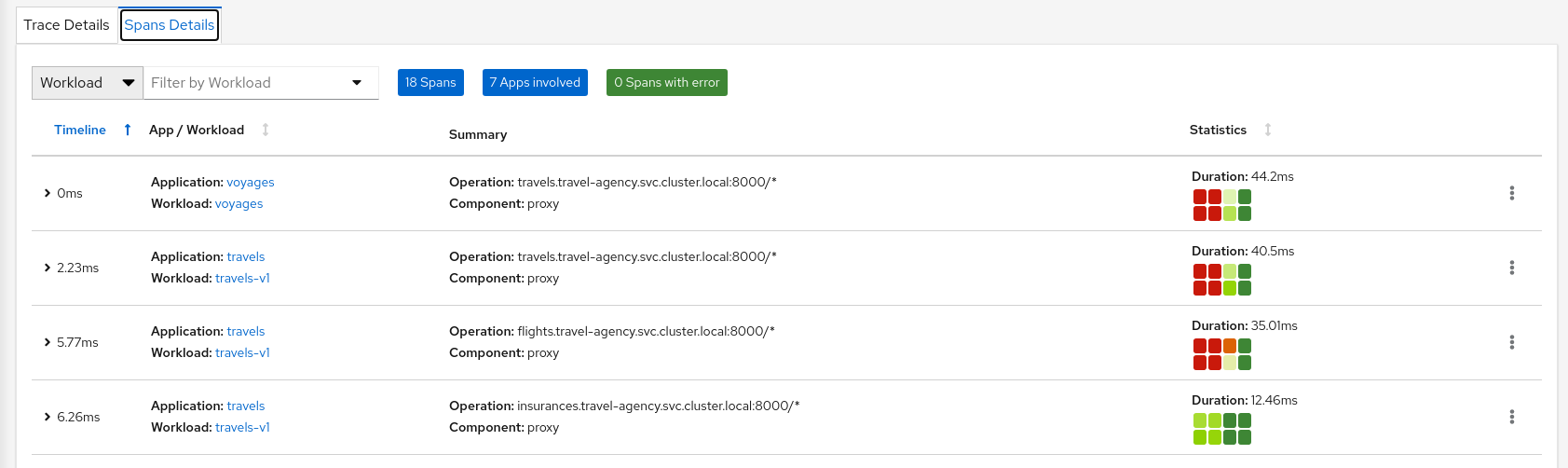

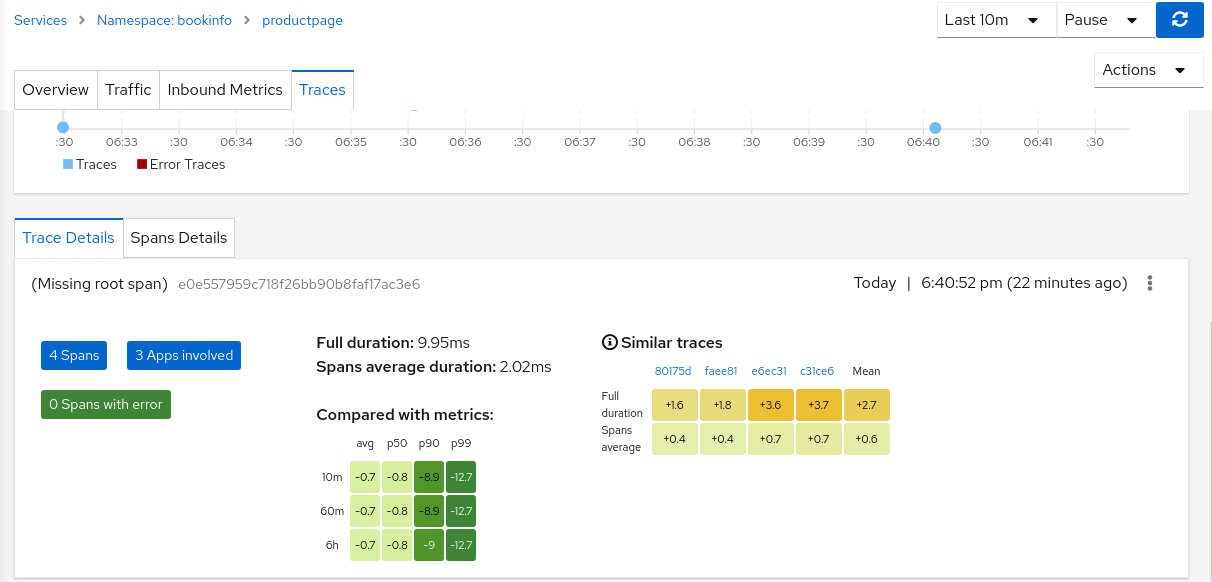

- 3.10: Tracing

- 3.11: Validation

- 4: OSSMC

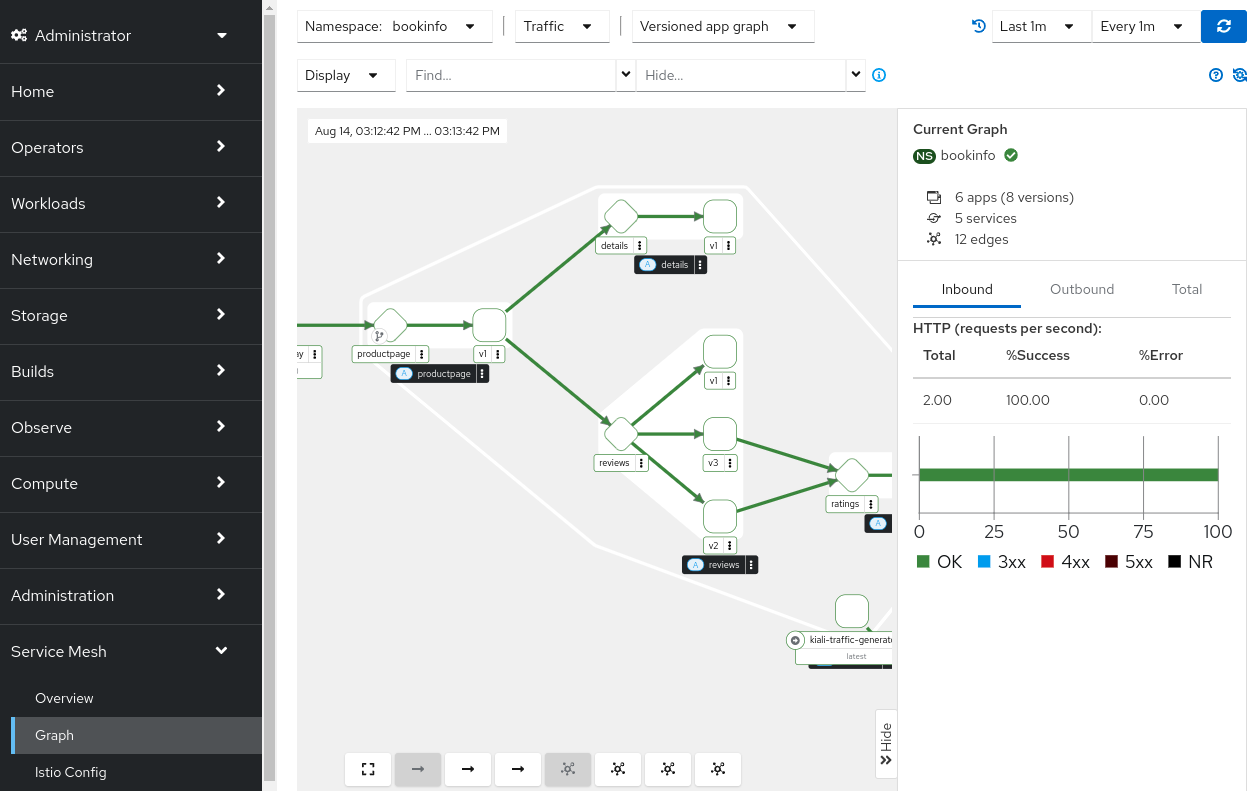

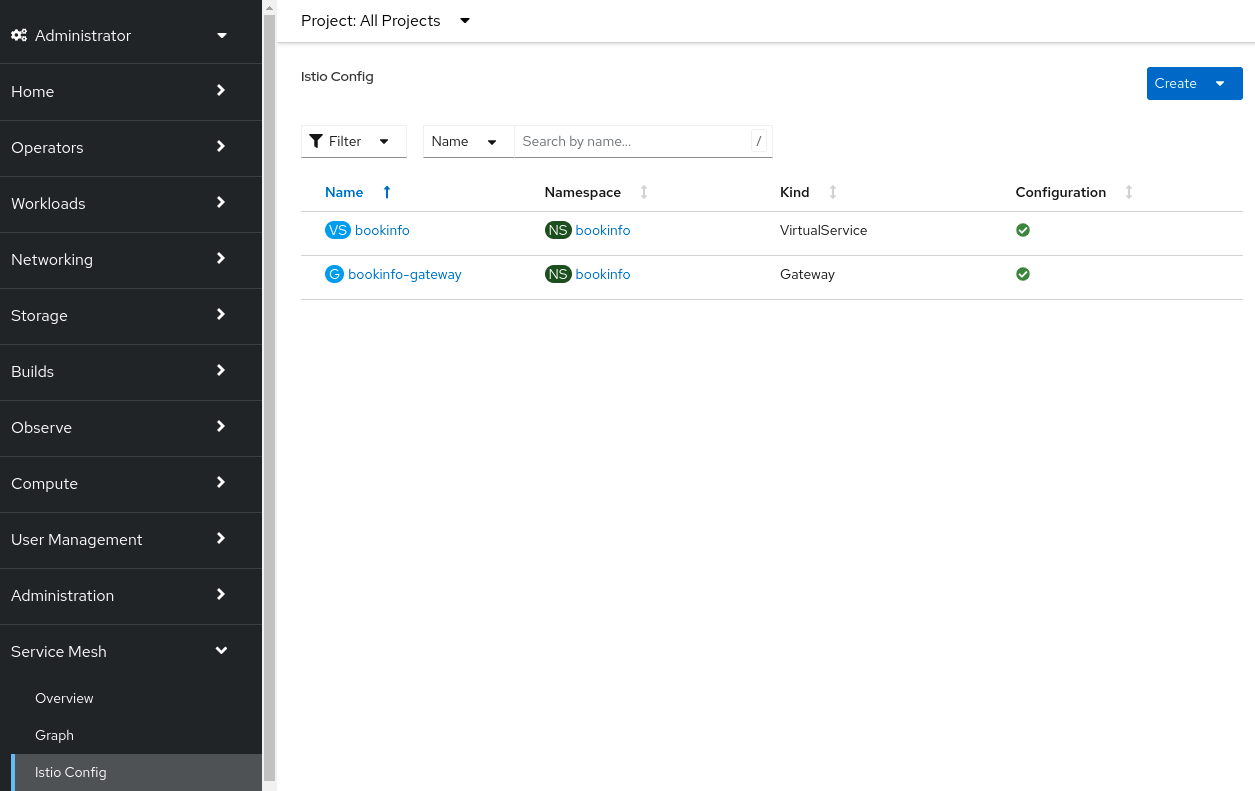

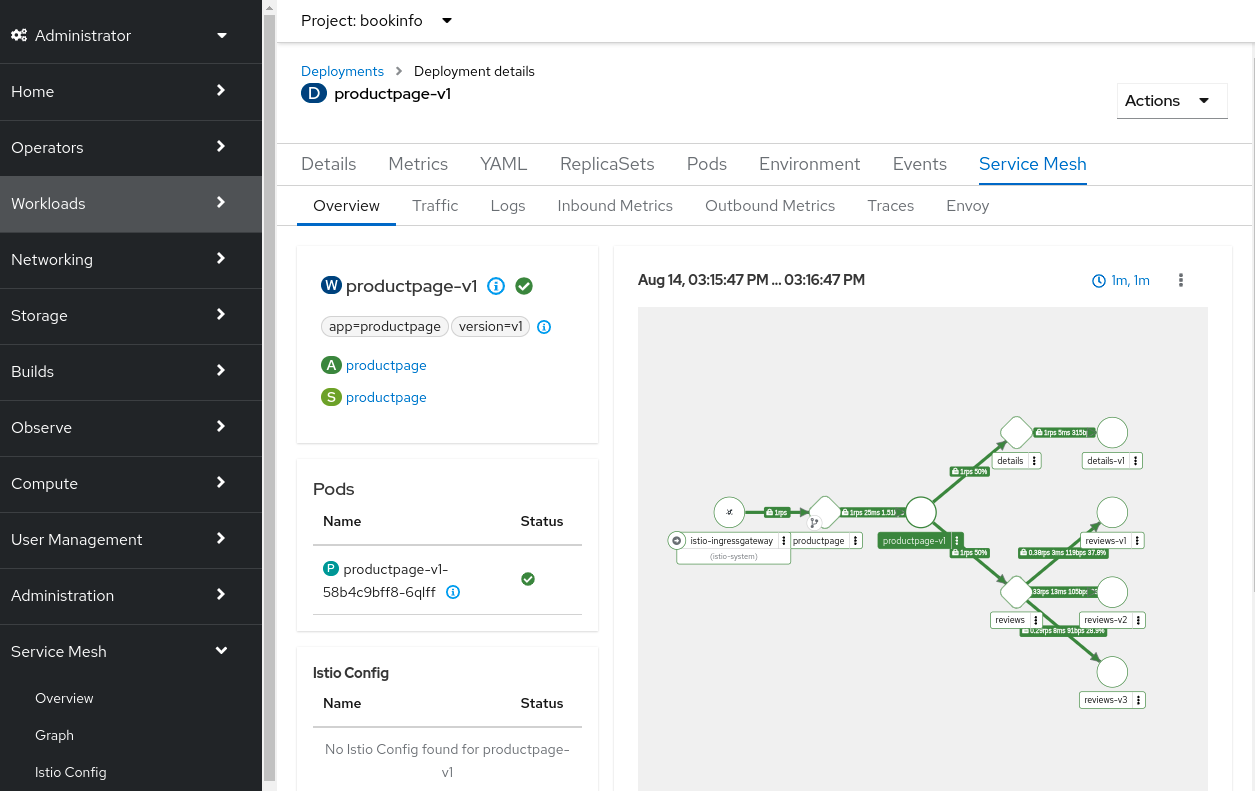

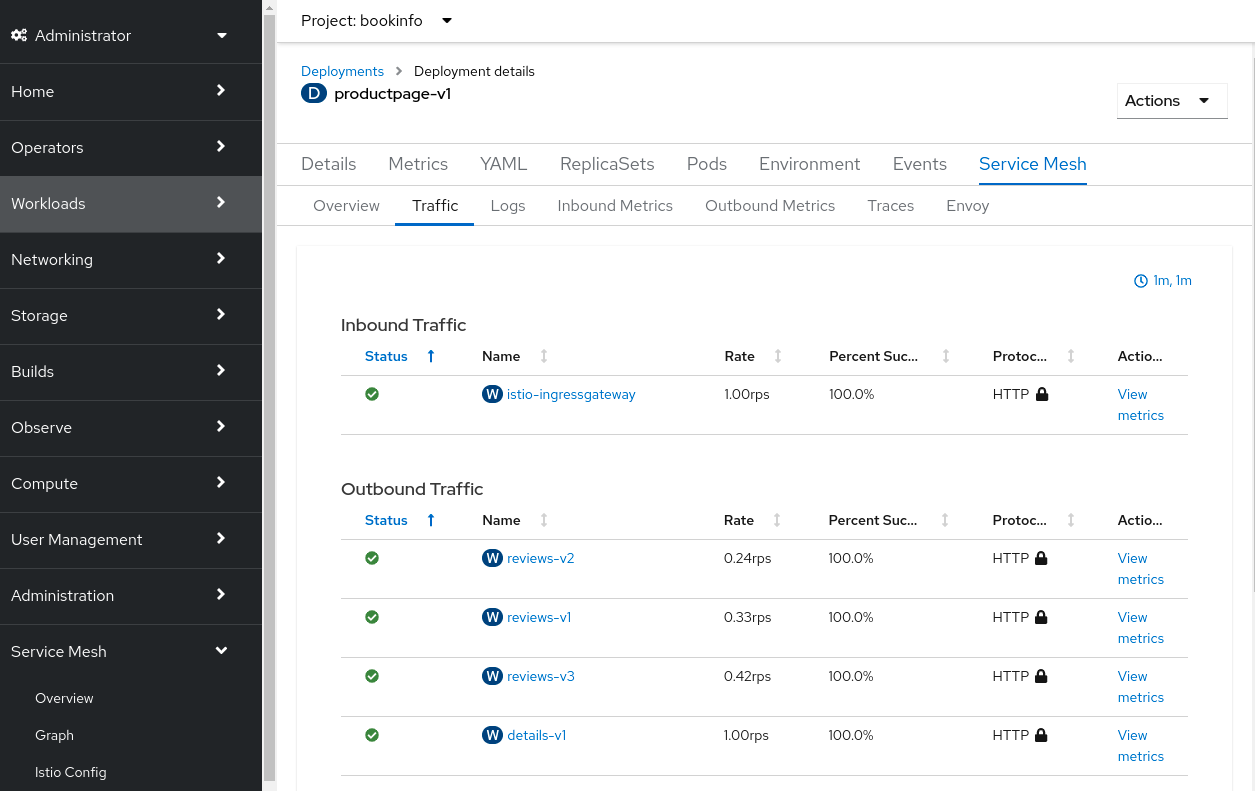

- 4.1: OSSMC User Guide

- 5: Tutorials

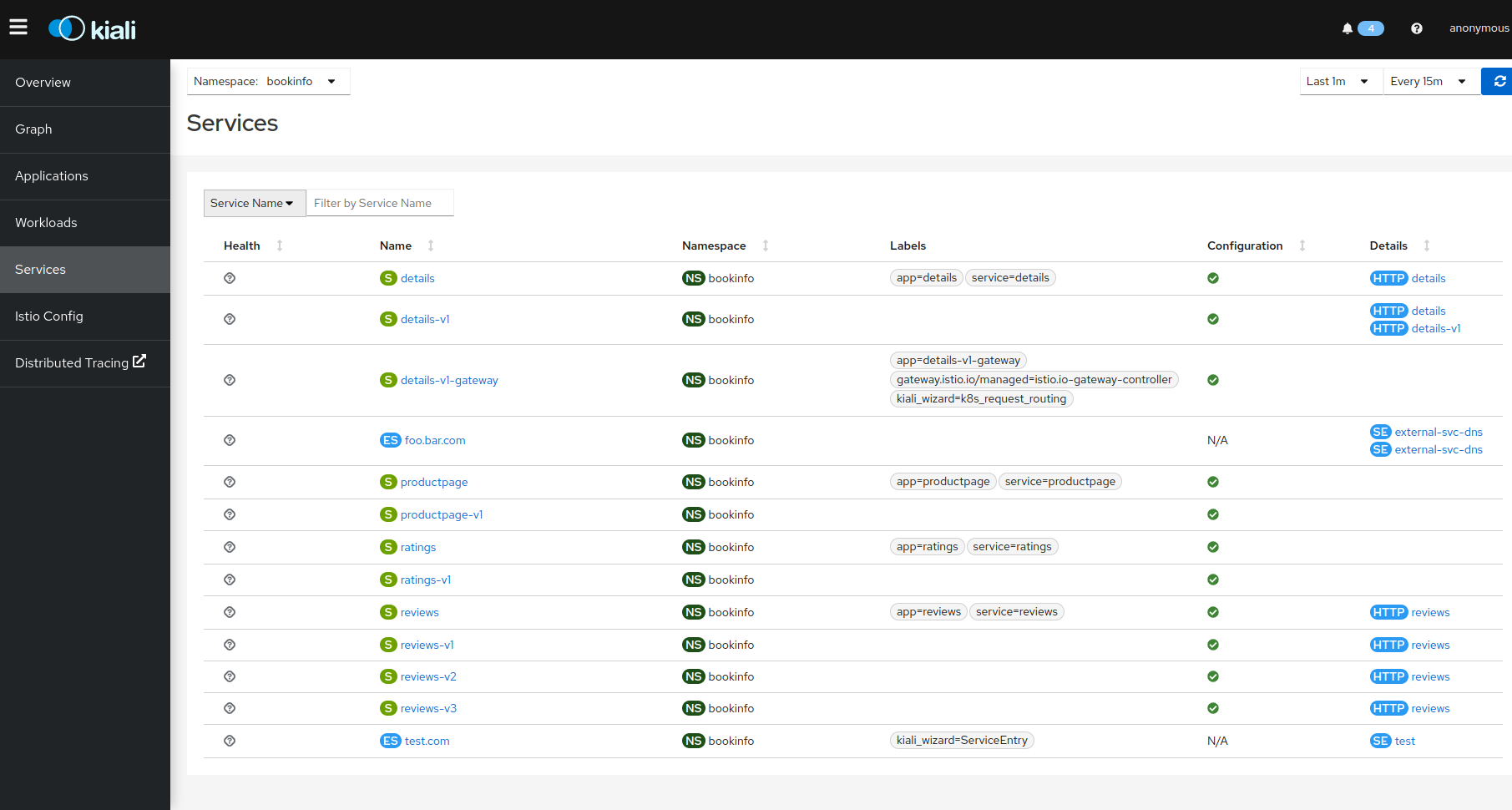

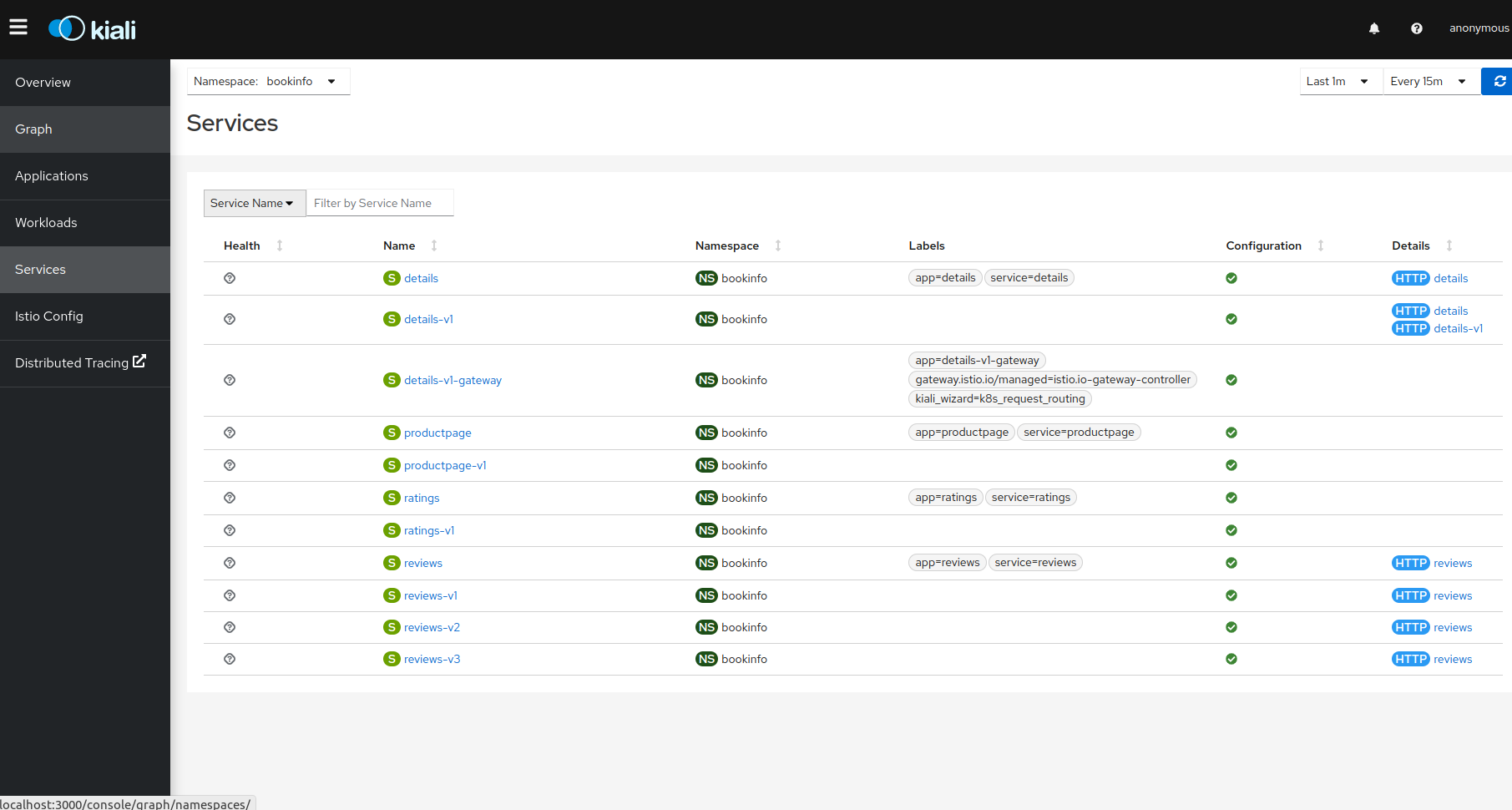

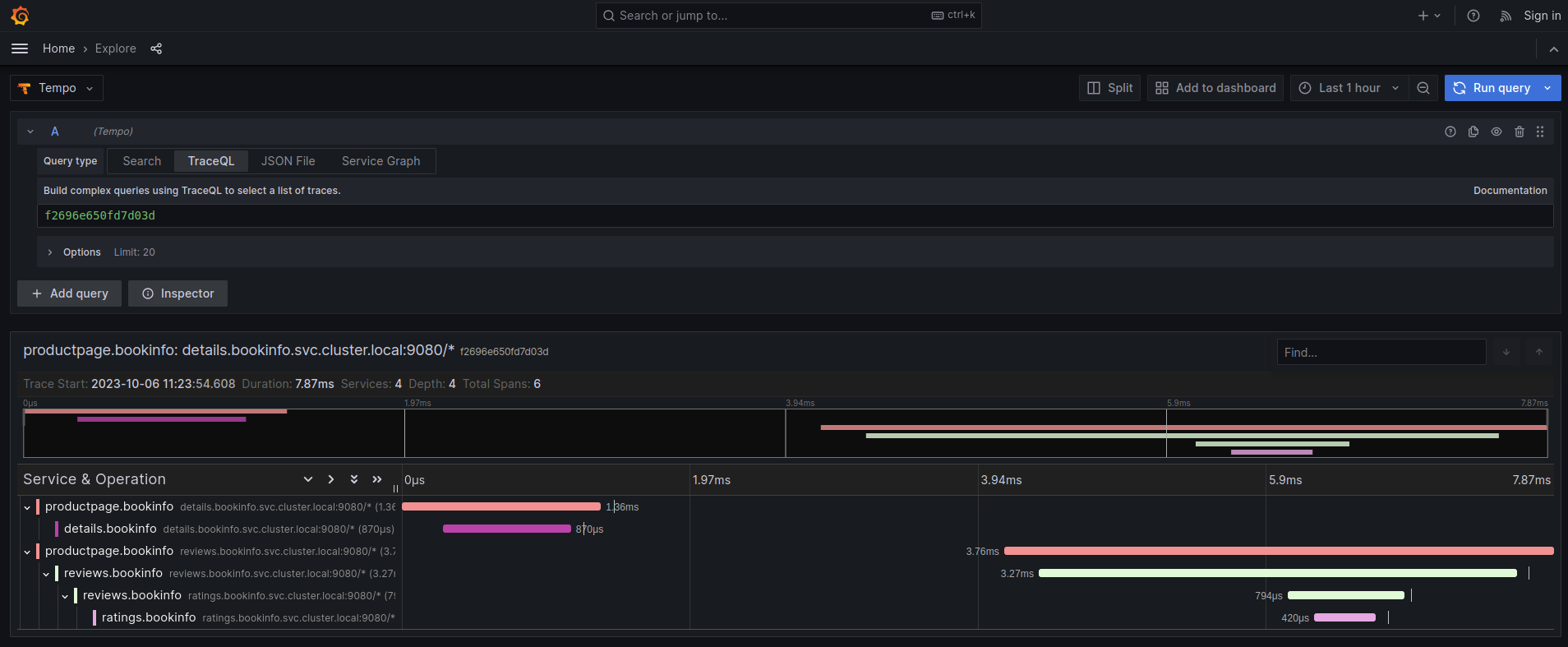

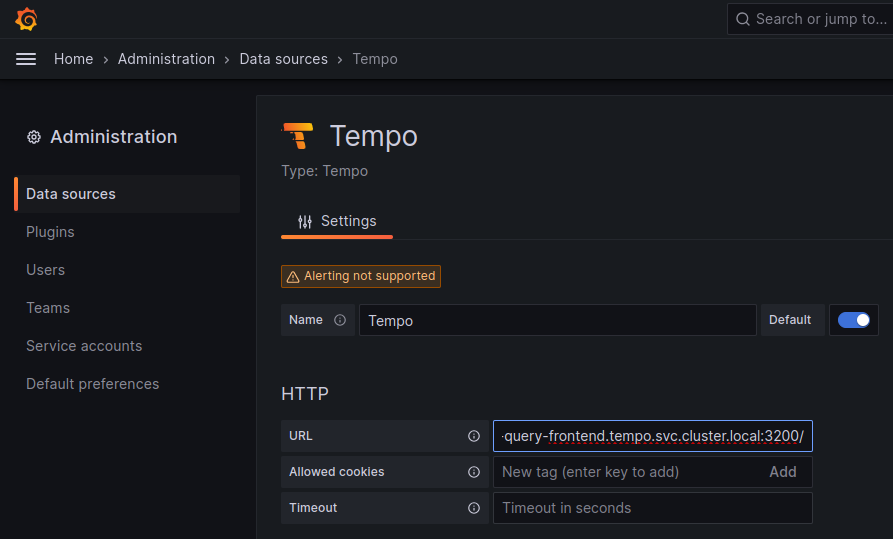

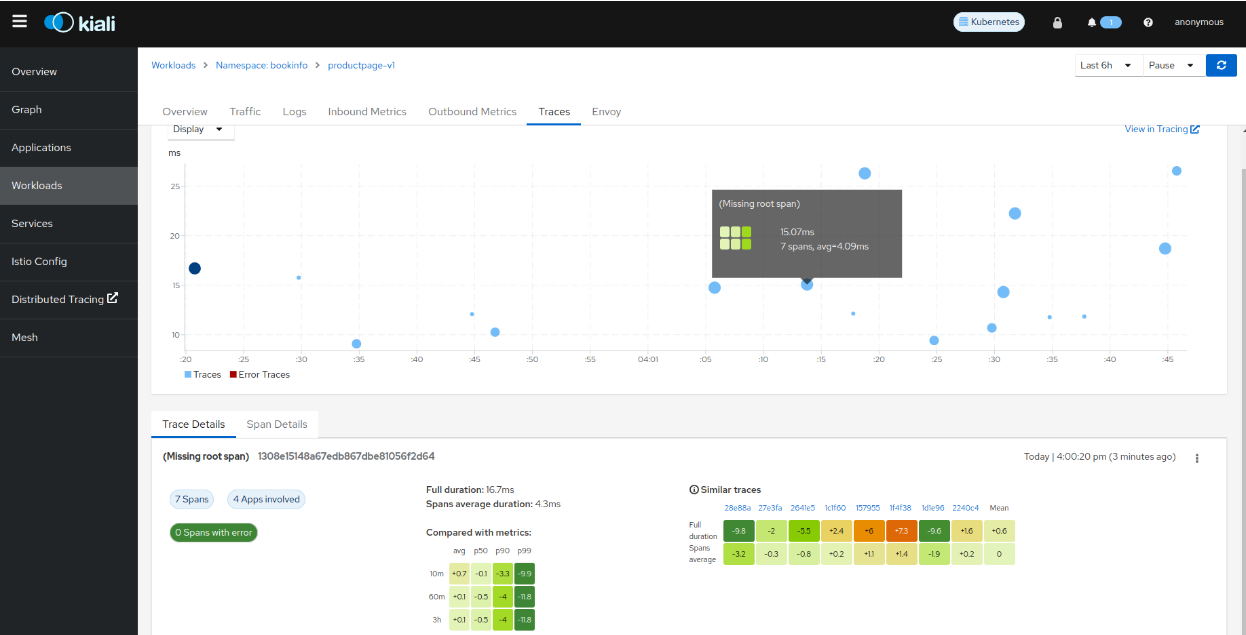

- 5.1: Kiali and Grafana Tempo Query integration

- 5.1.1: Introduction

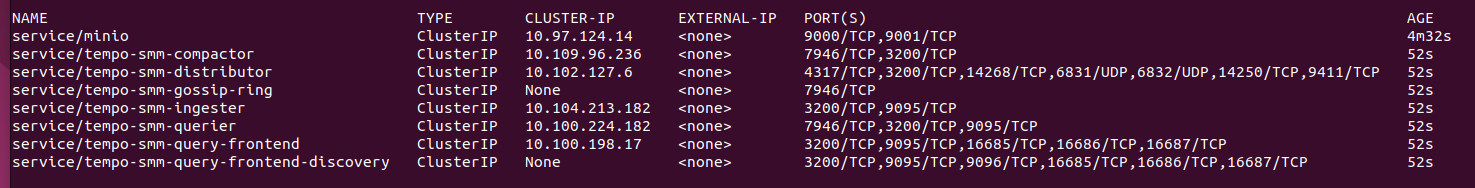

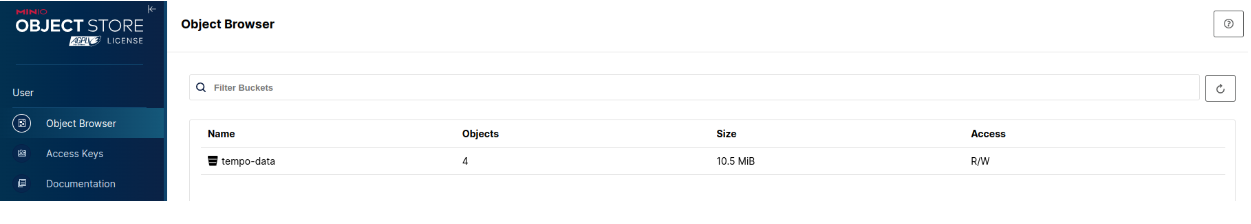

- 5.1.2: Kiali and Tempo setup

- 5.2: Travels Demo - Multicluster

- 5.2.1: Introduction

- 5.2.2: Prerequisites

- 5.2.3: Deploy East cluster

- 5.2.4: Install Istio on East cluster

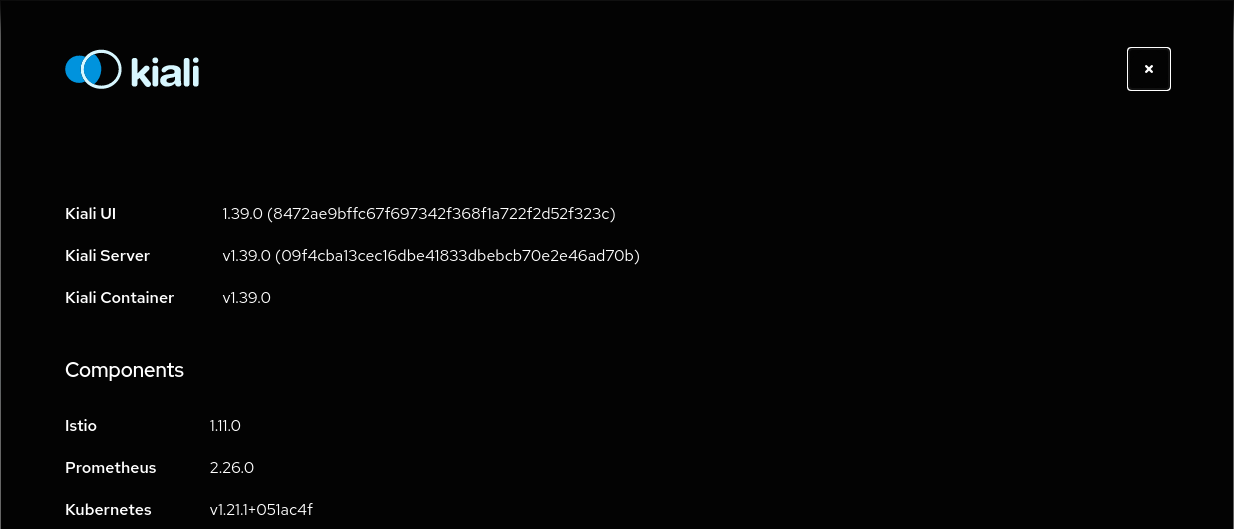

- 5.2.5: Install Kiali

- 5.2.6: Install Travels on East cluster

- 5.2.7: Deploy West cluster

- 5.2.8: Install Istio on West cluster

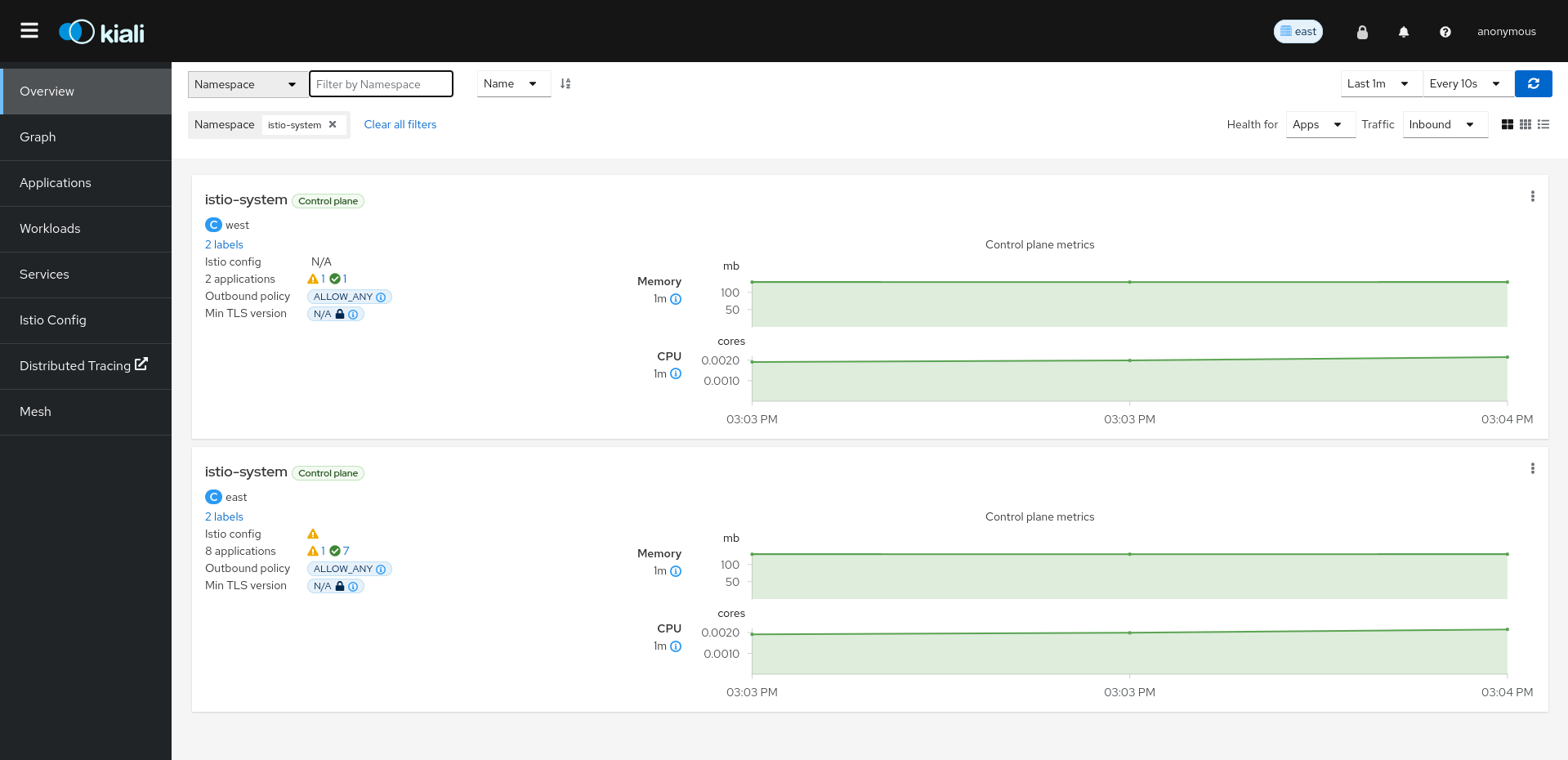

- 5.2.9: Configure Kiali for multicluster

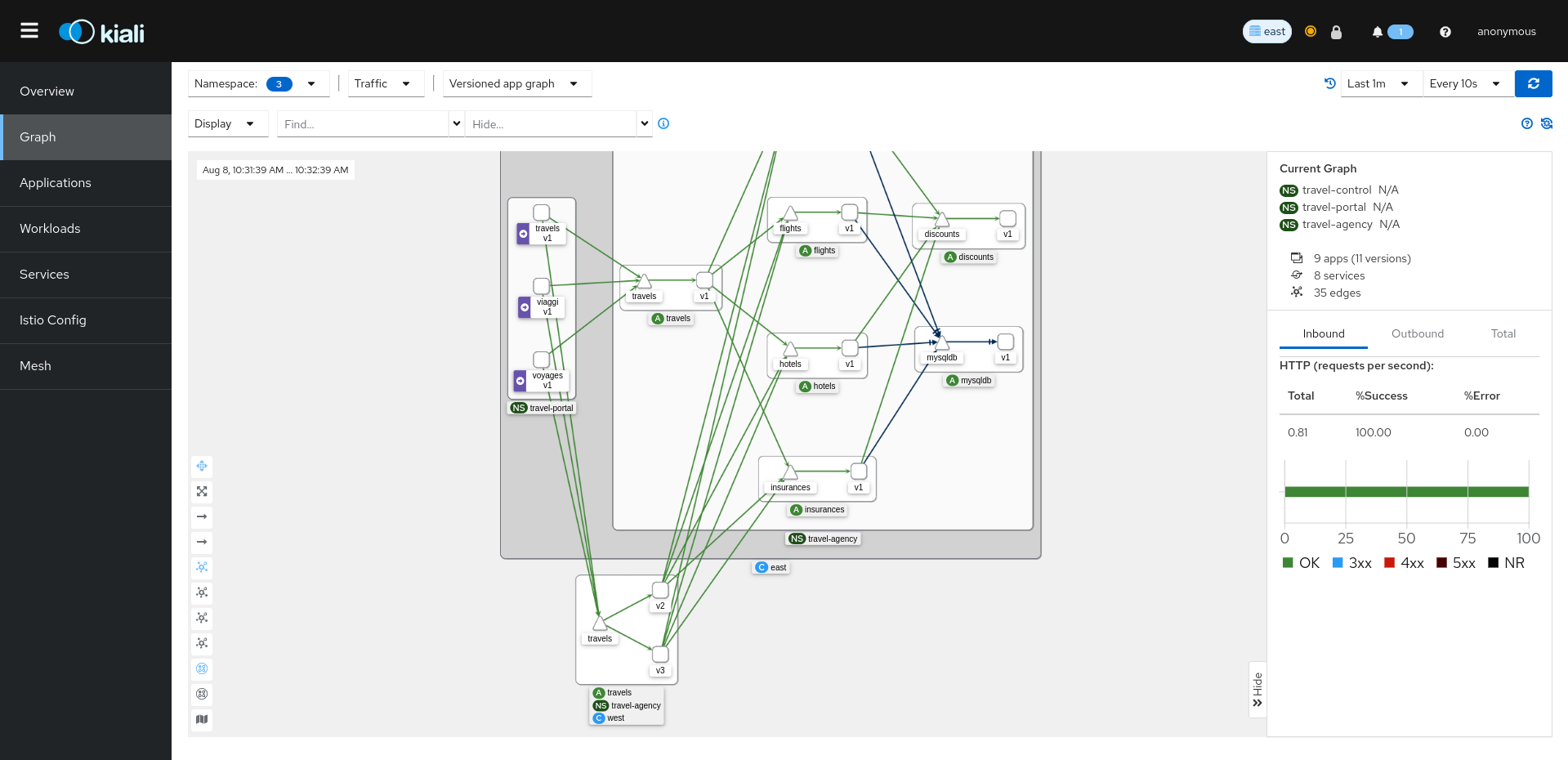

- 5.2.10: Install Travels on West cluster

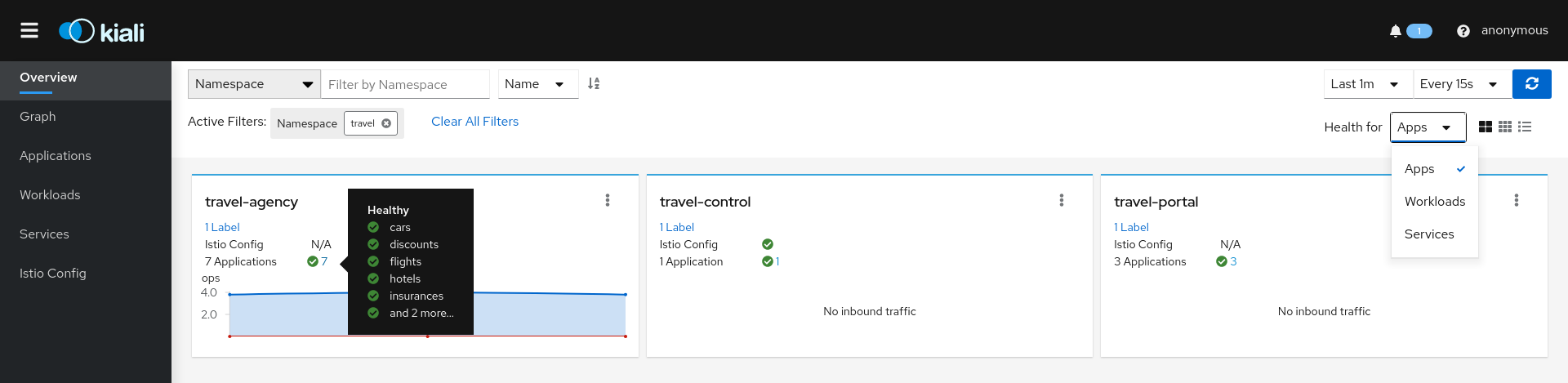

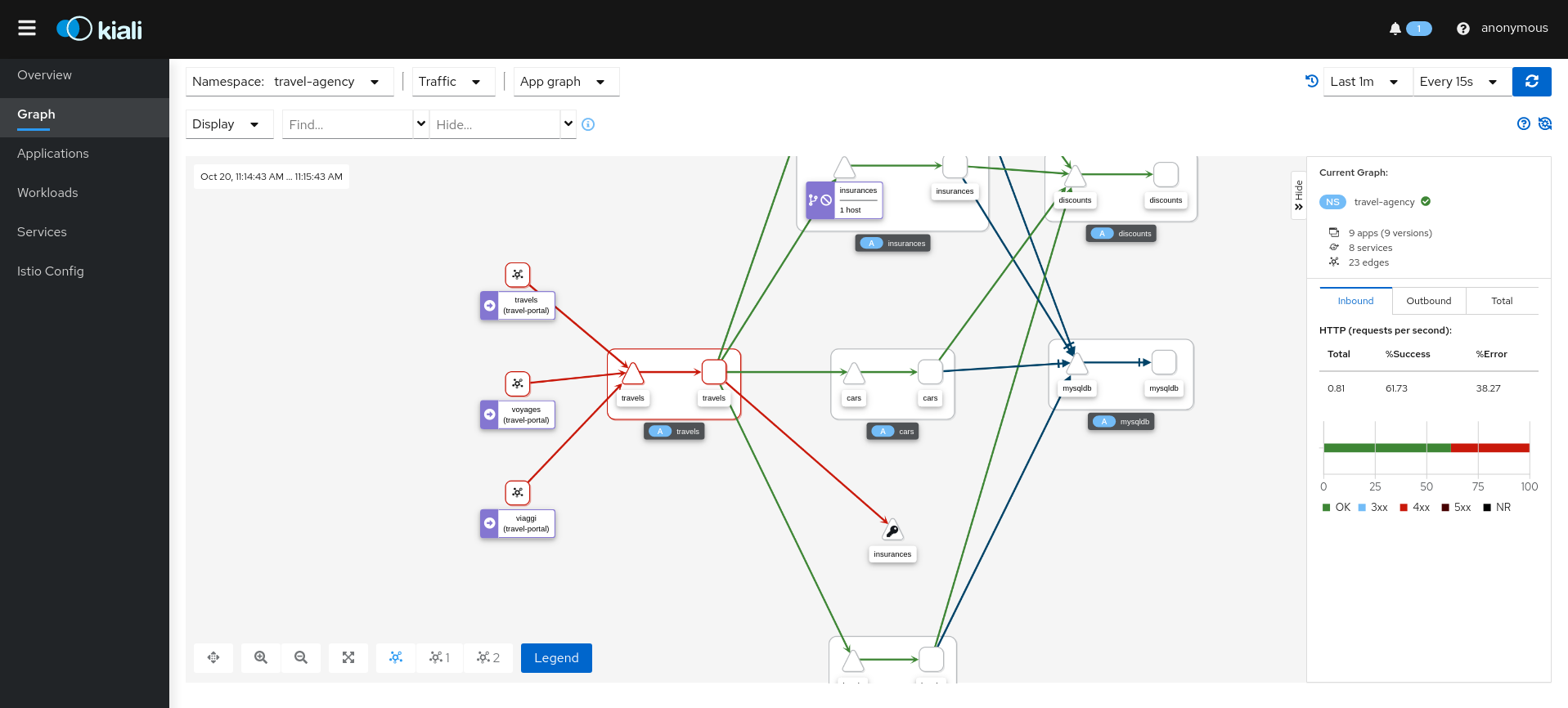

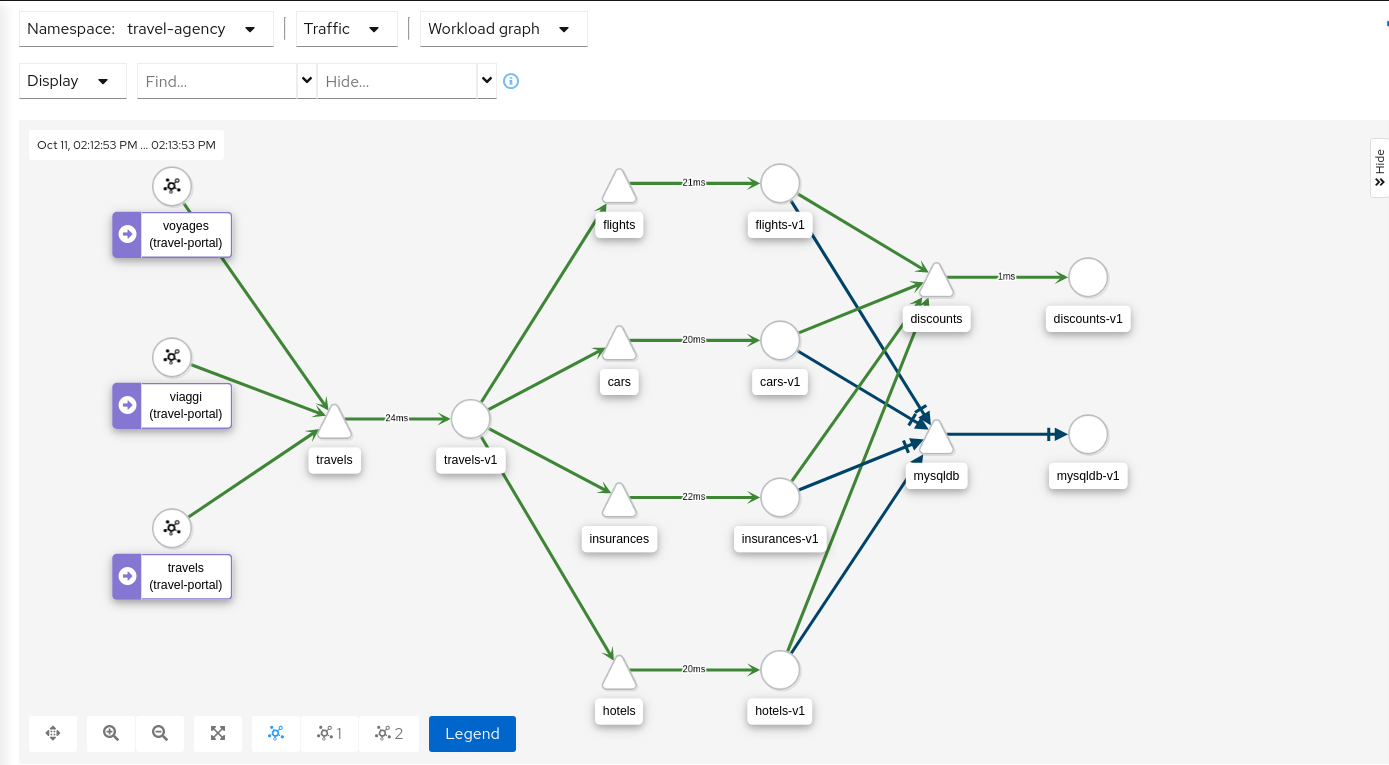

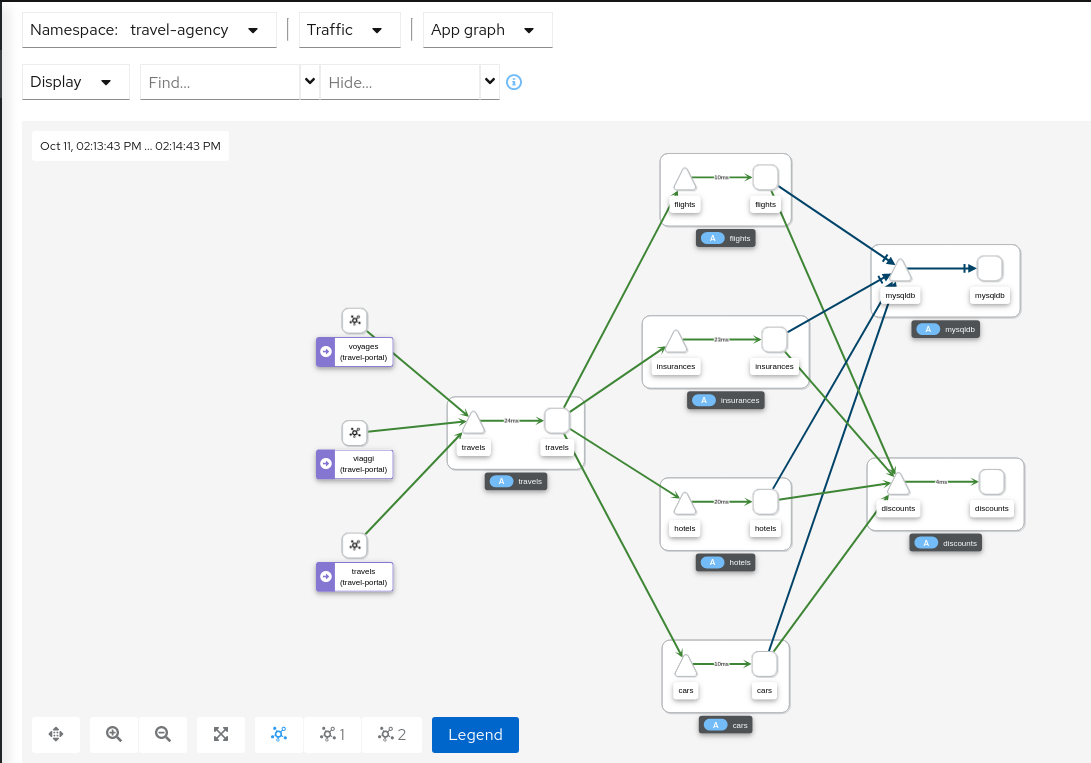

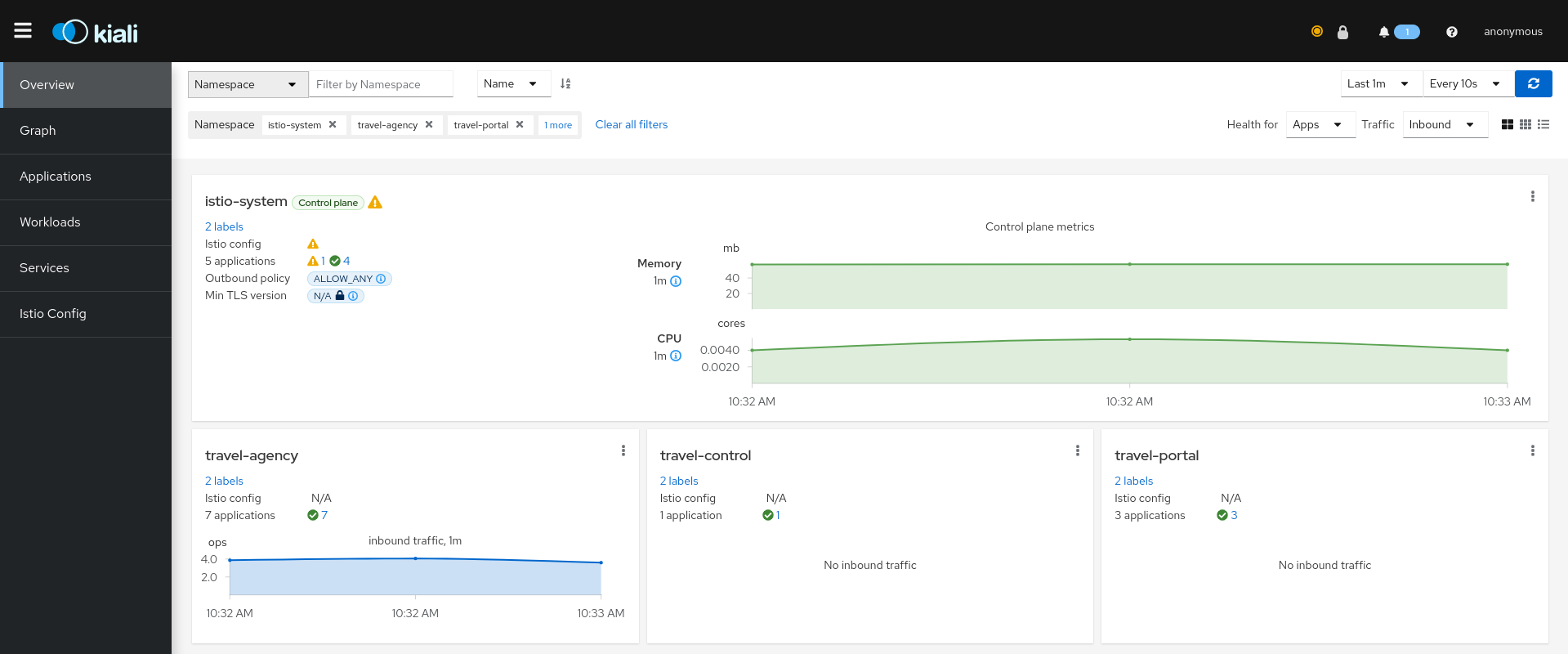

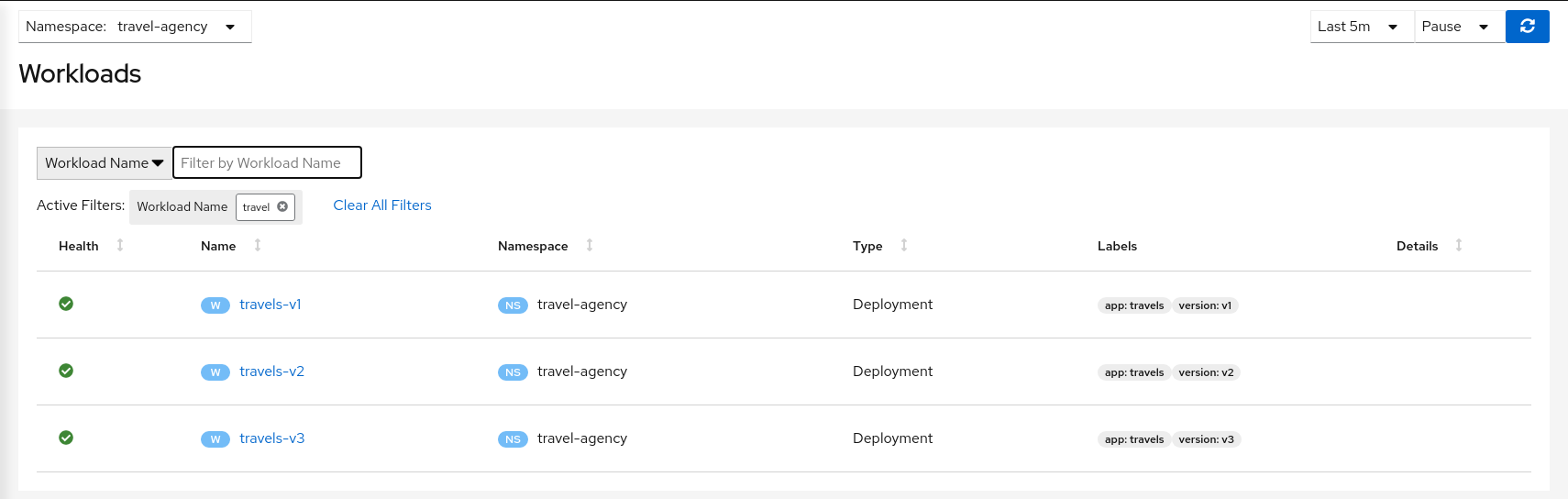

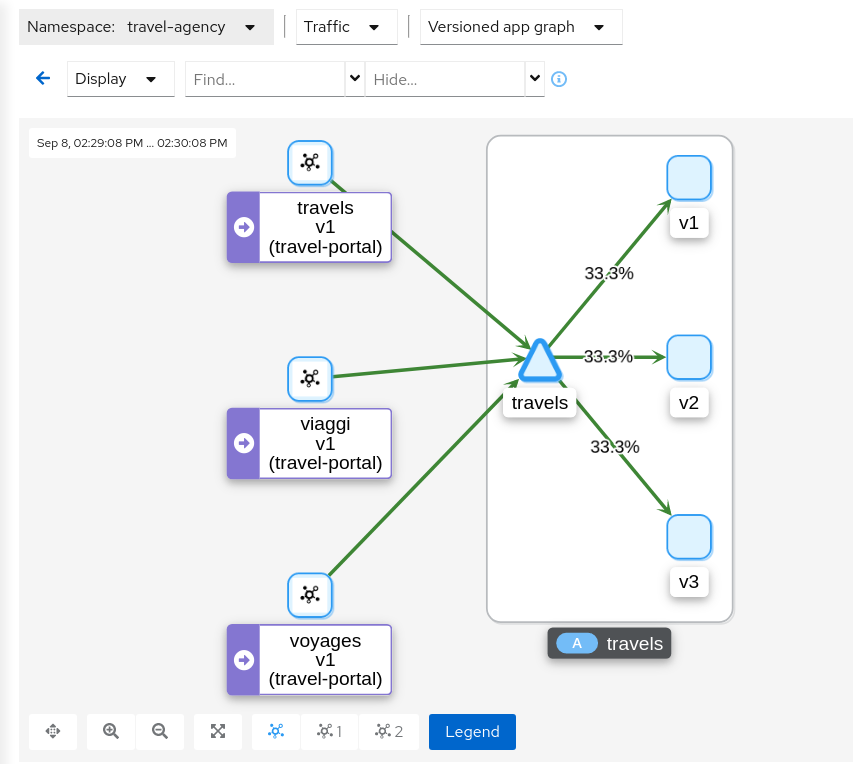

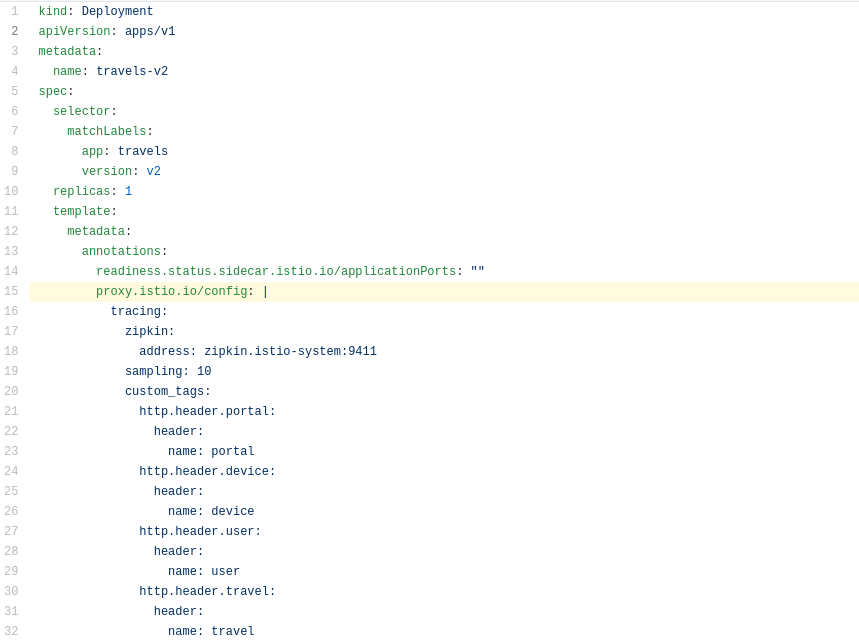

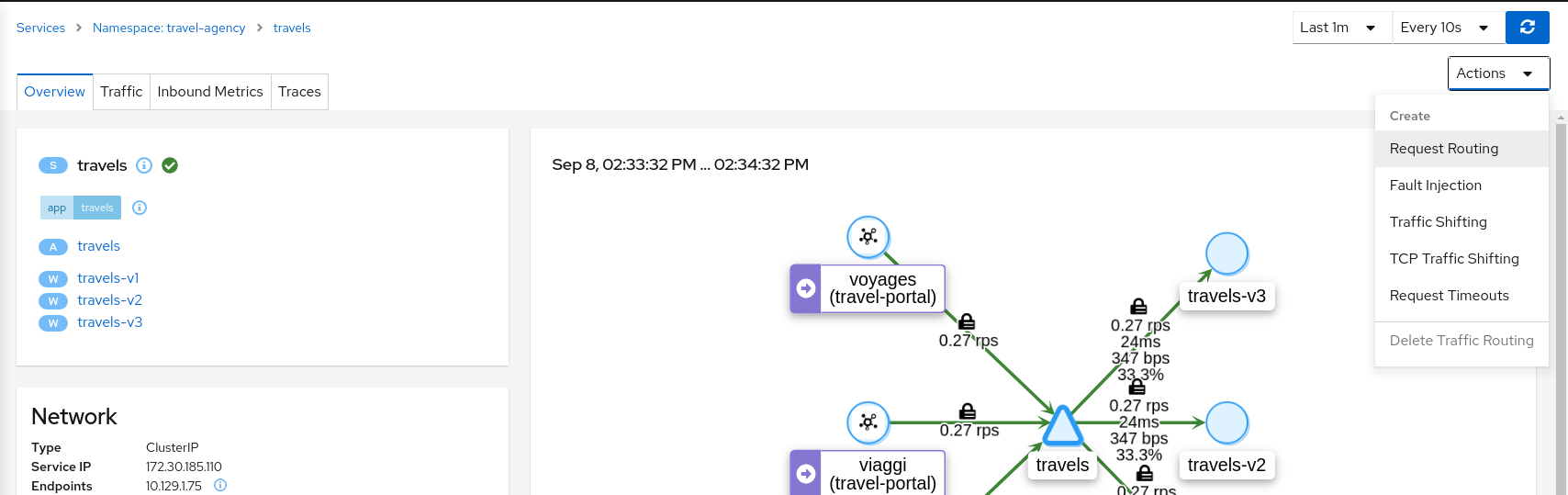

- 5.3: Travels Demo Tutorial

- 5.3.1: Prerequisites

- 5.3.2: Install Travel Demo

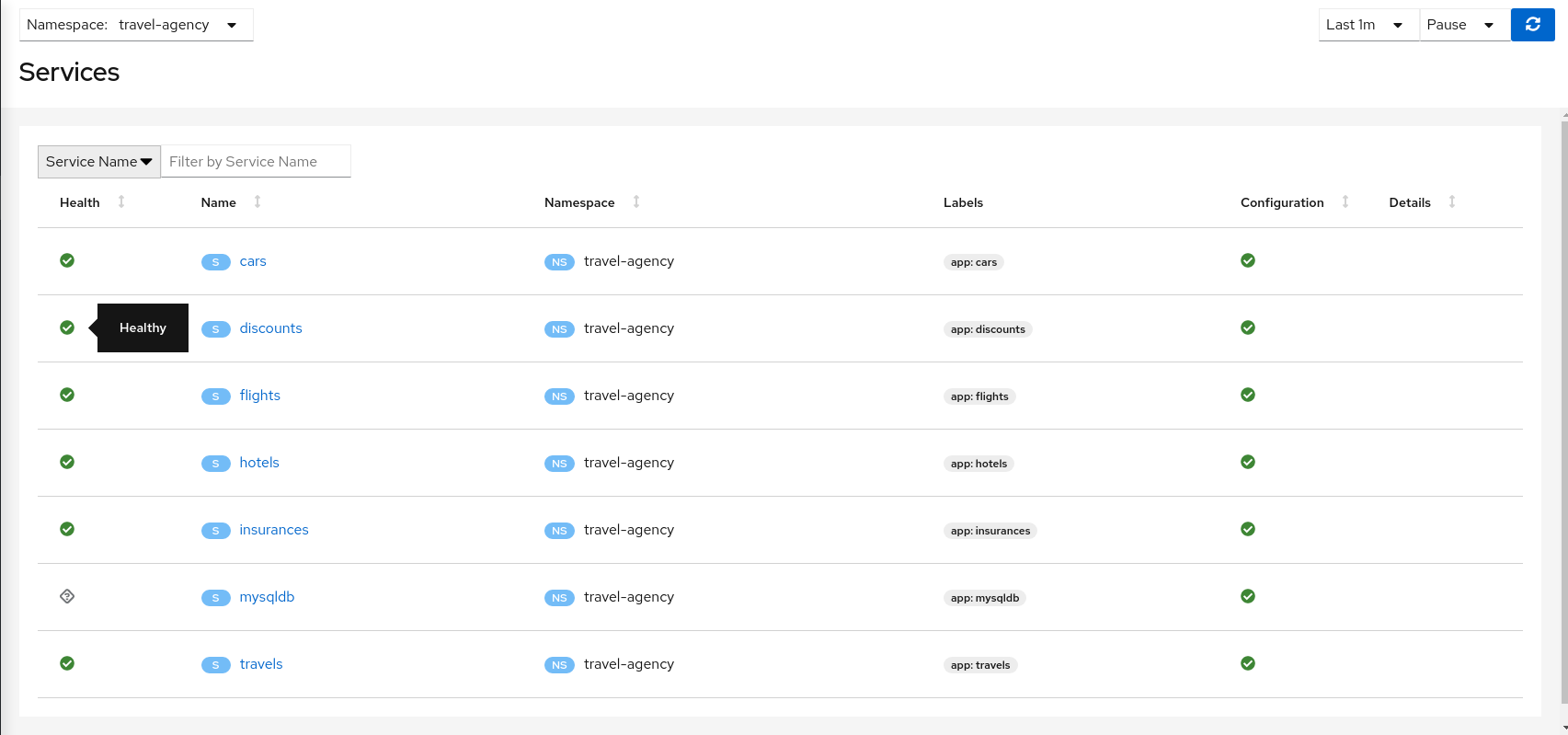

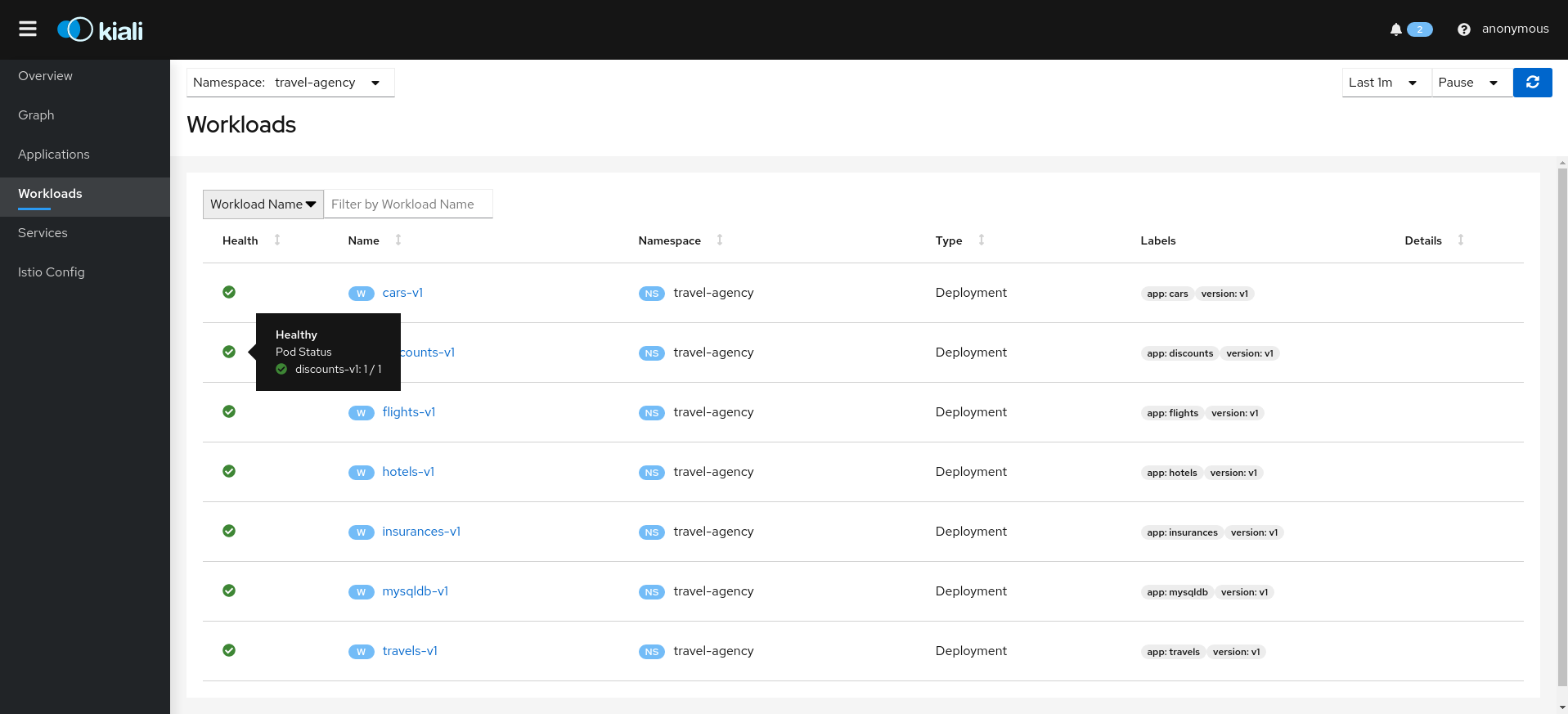

- 5.3.3: First Steps

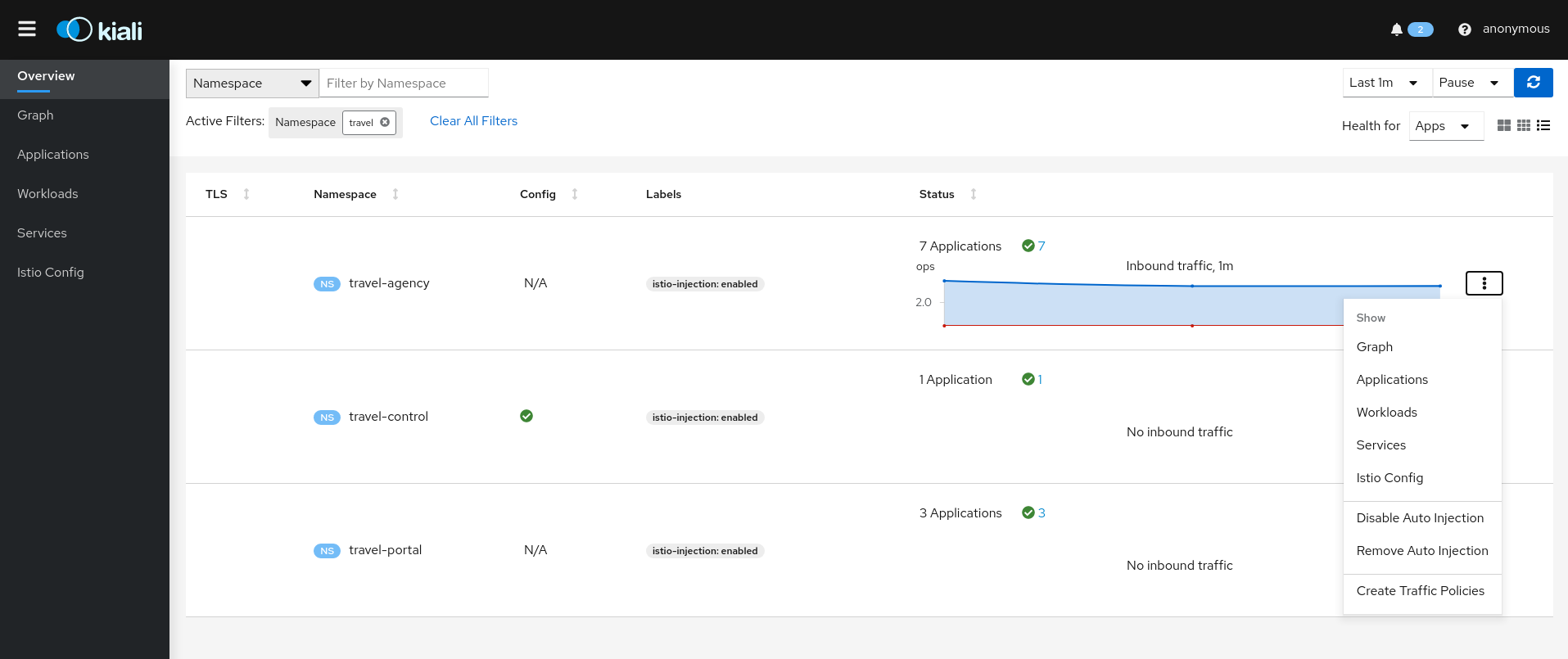

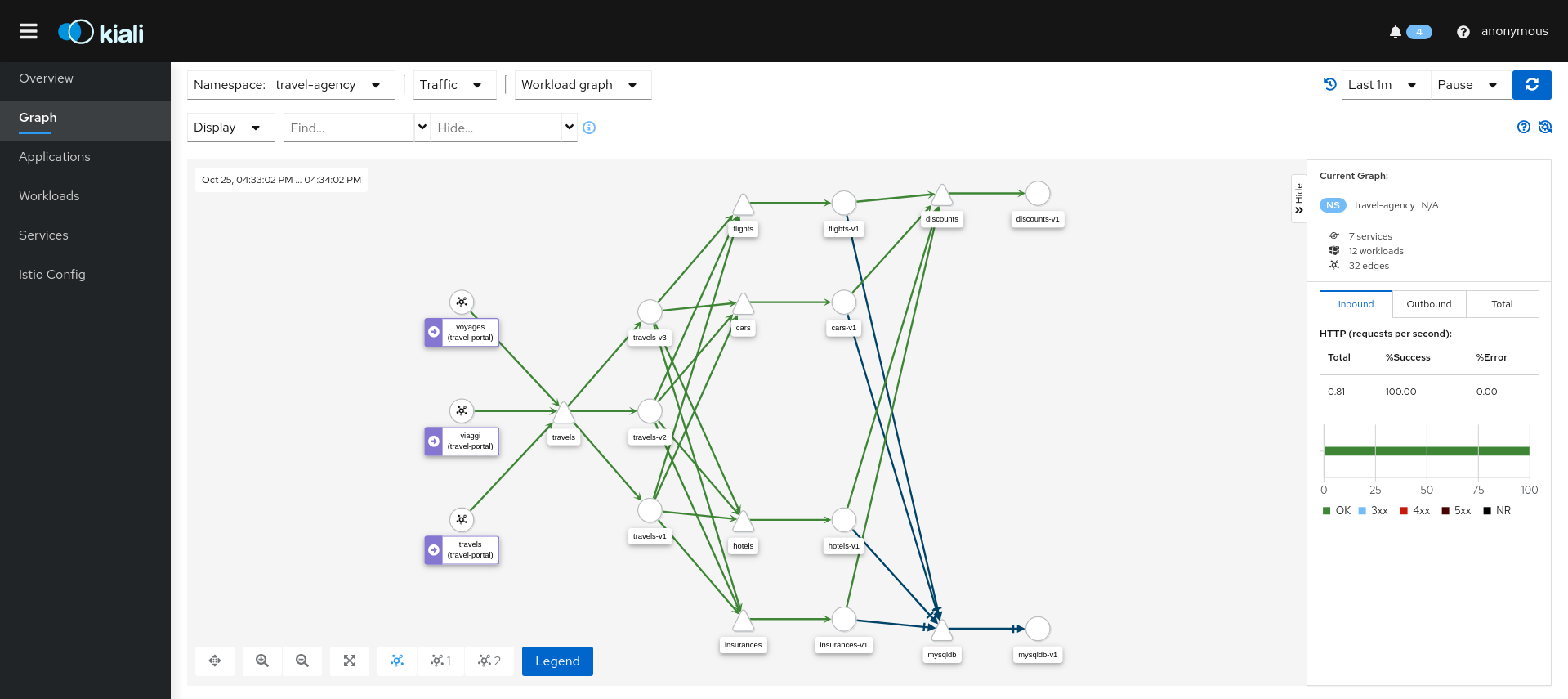

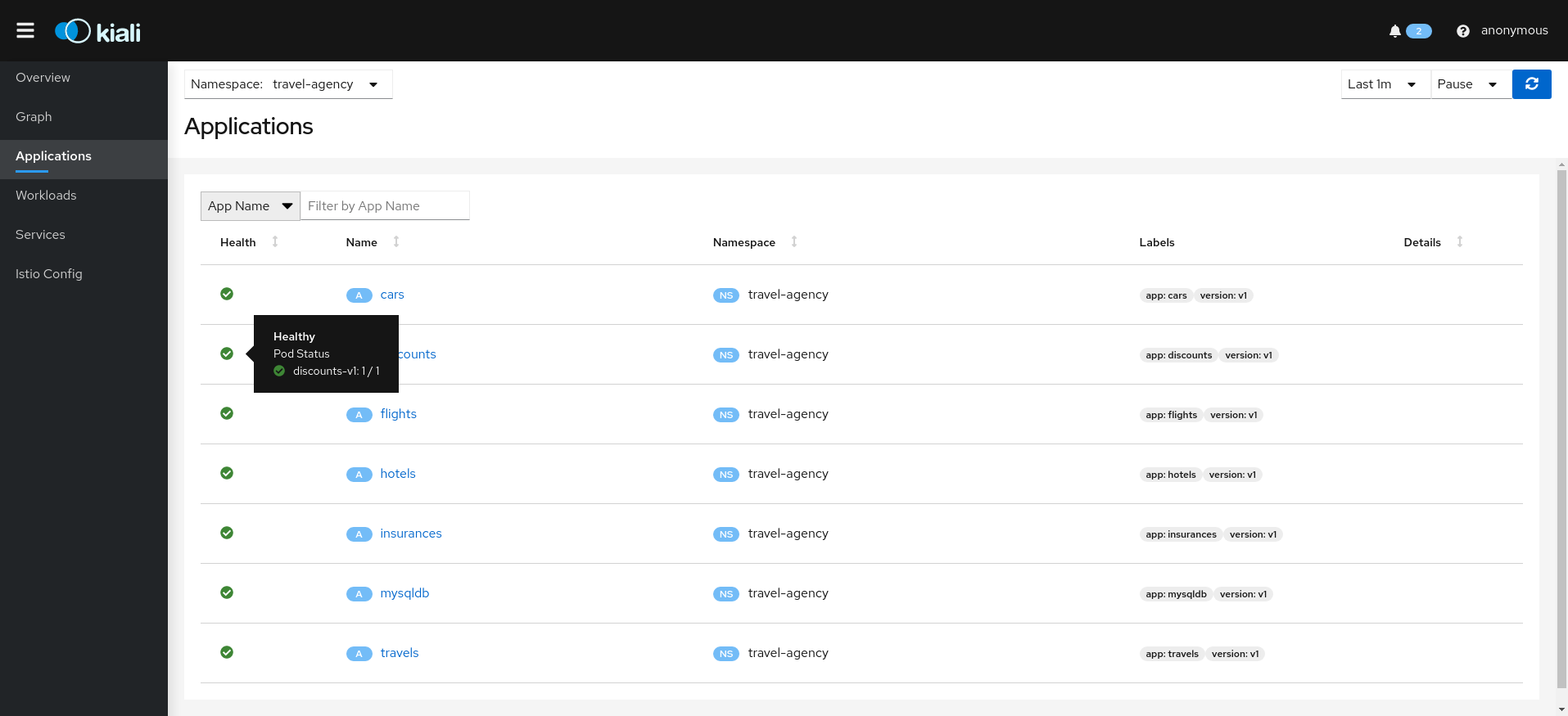

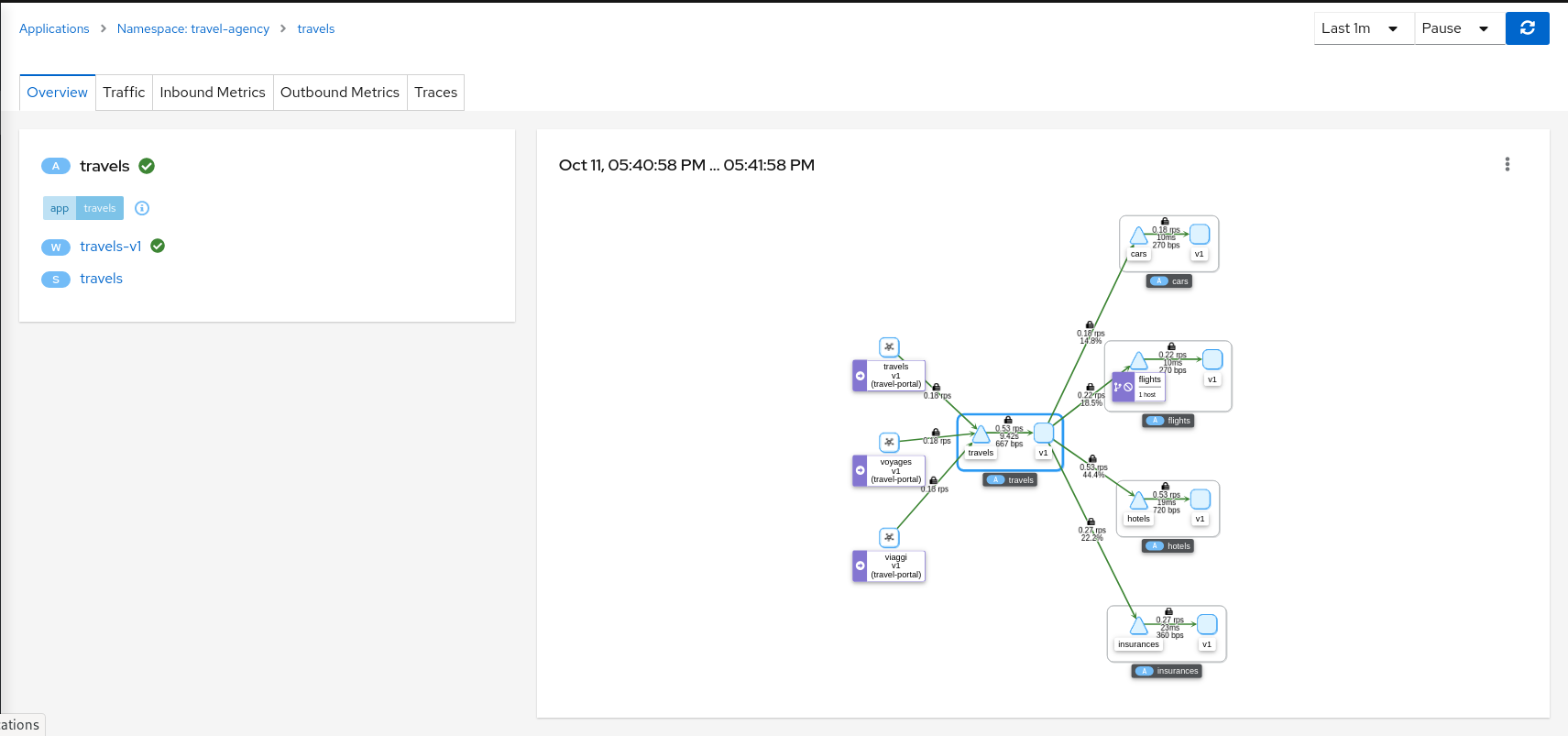

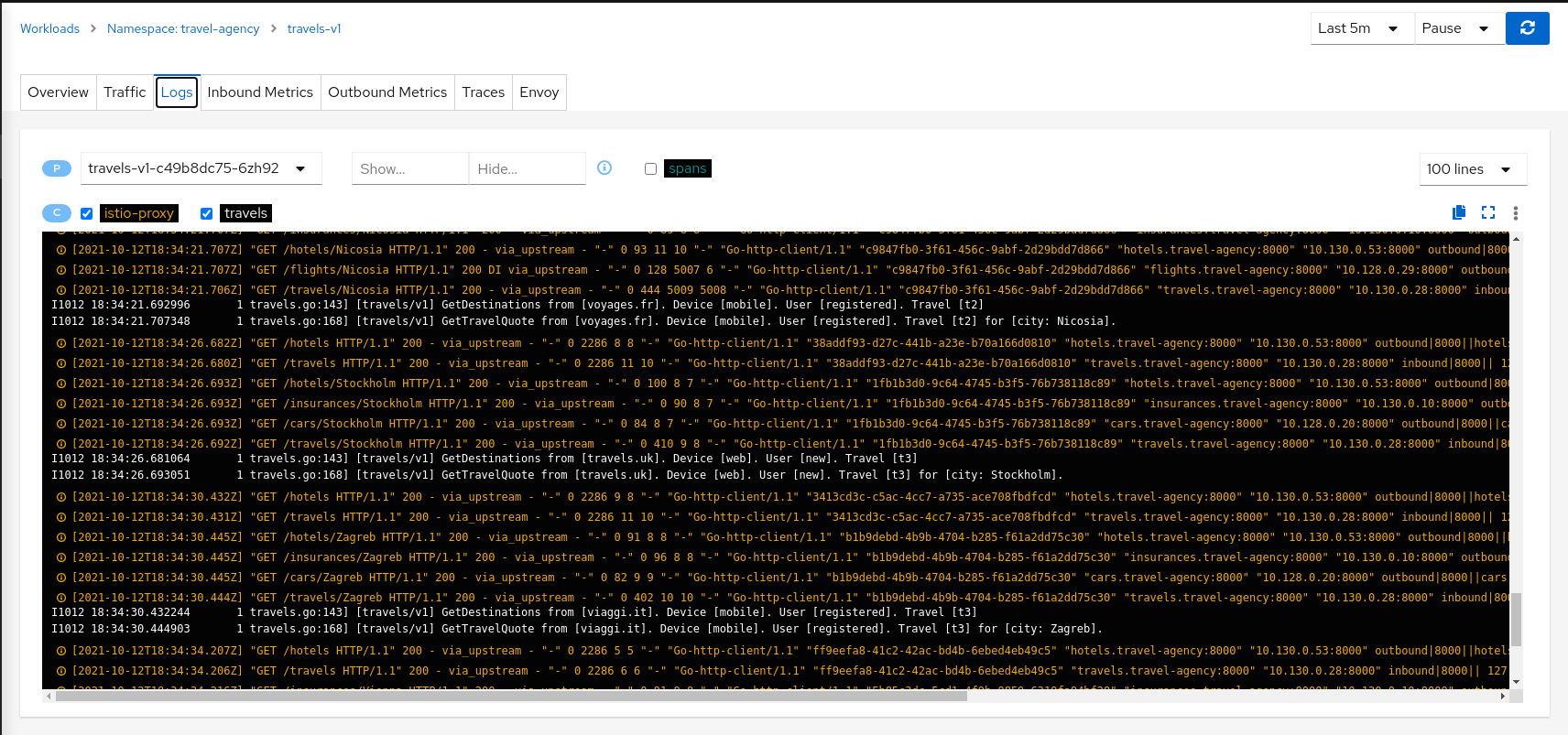

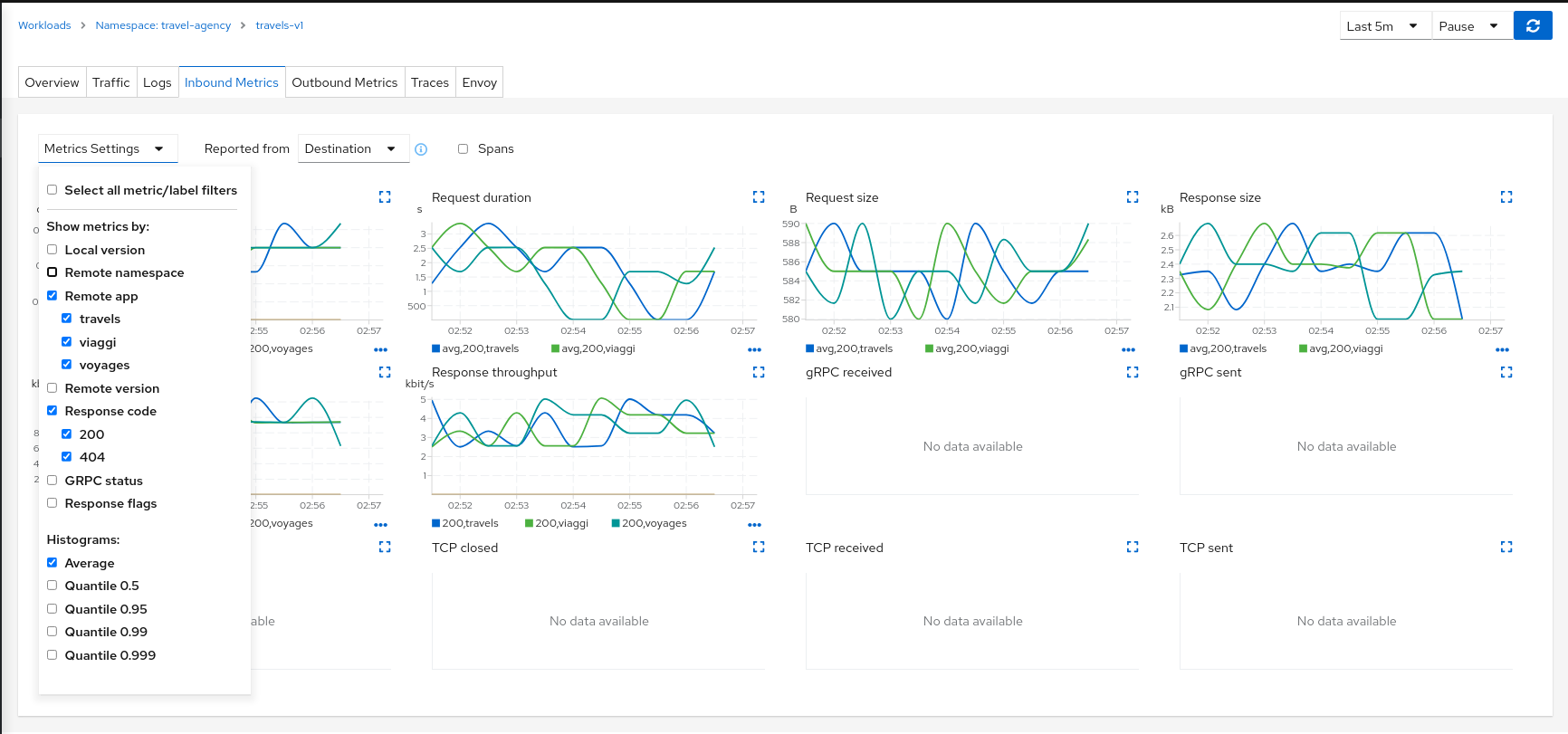

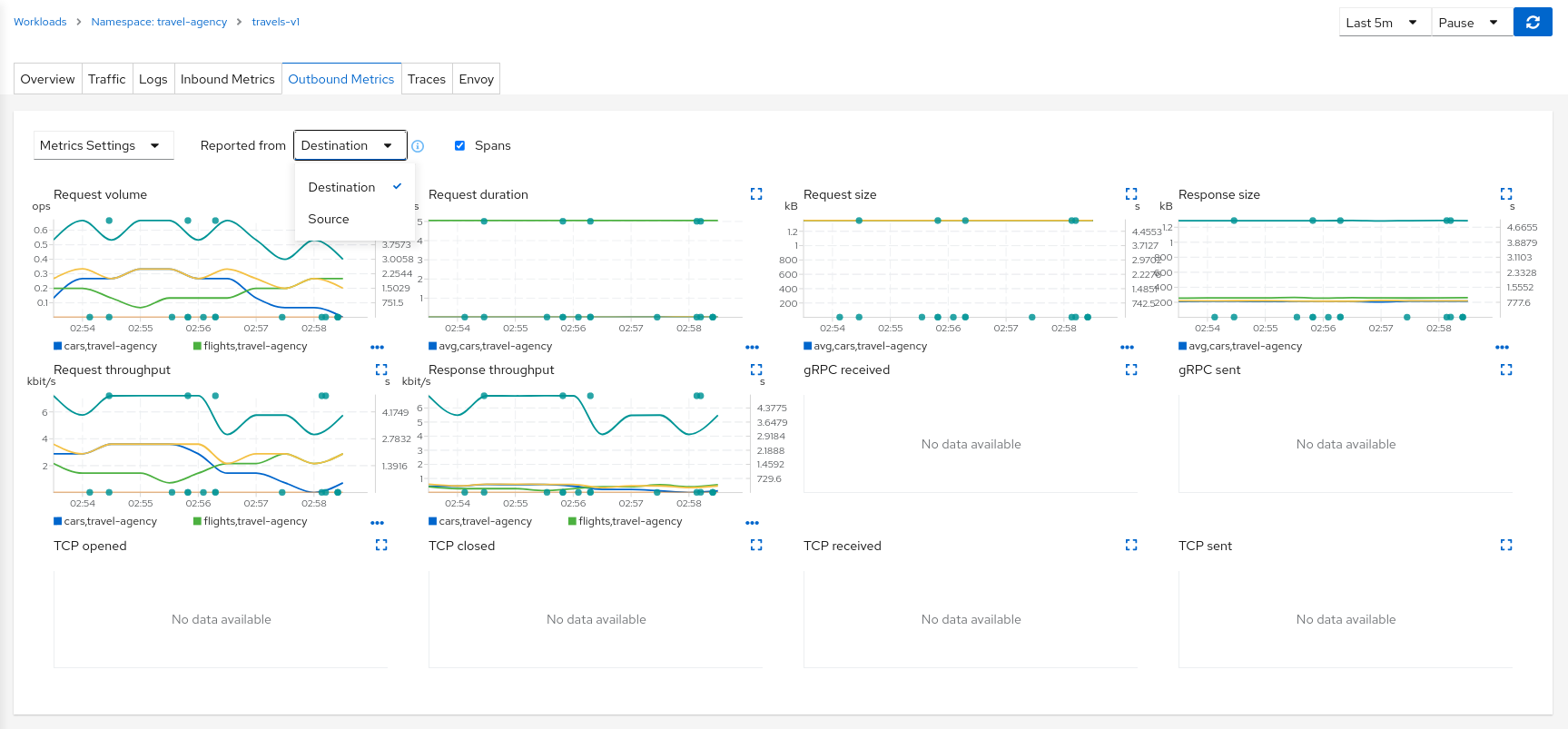

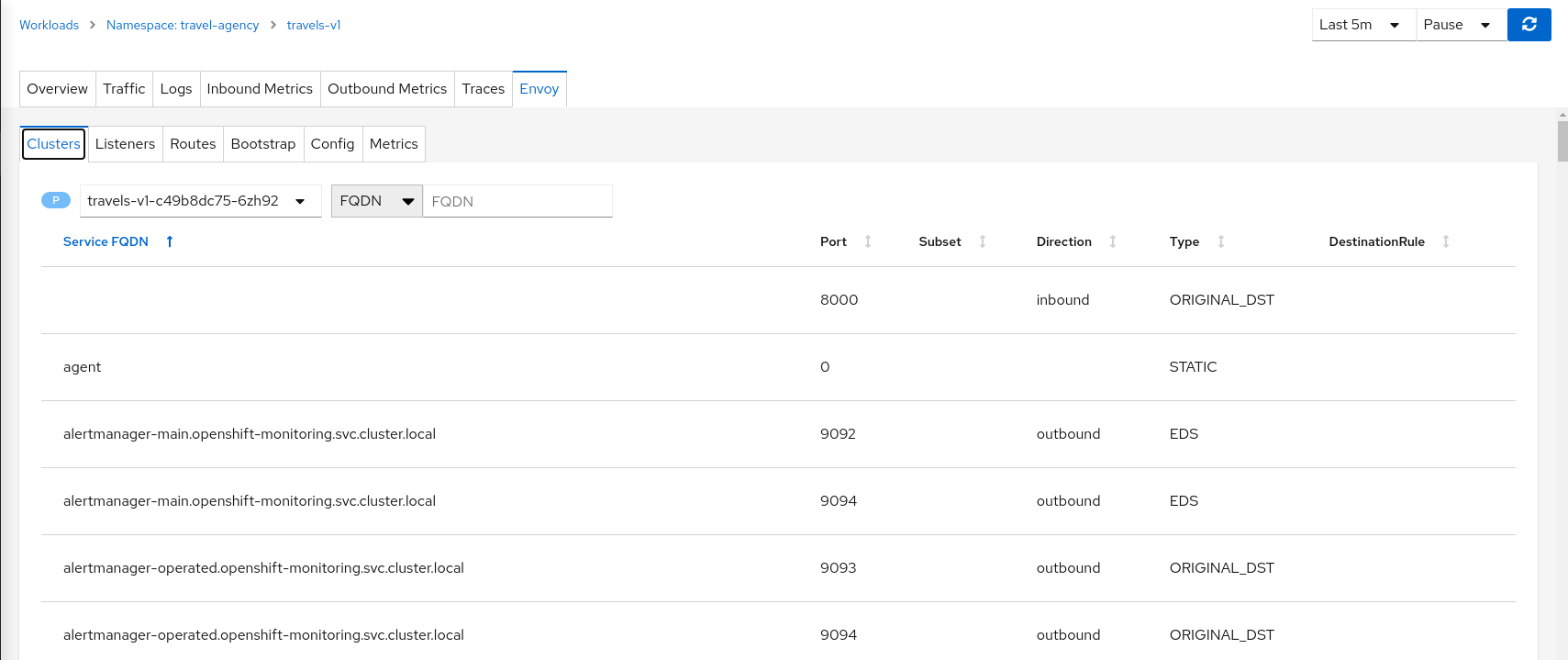

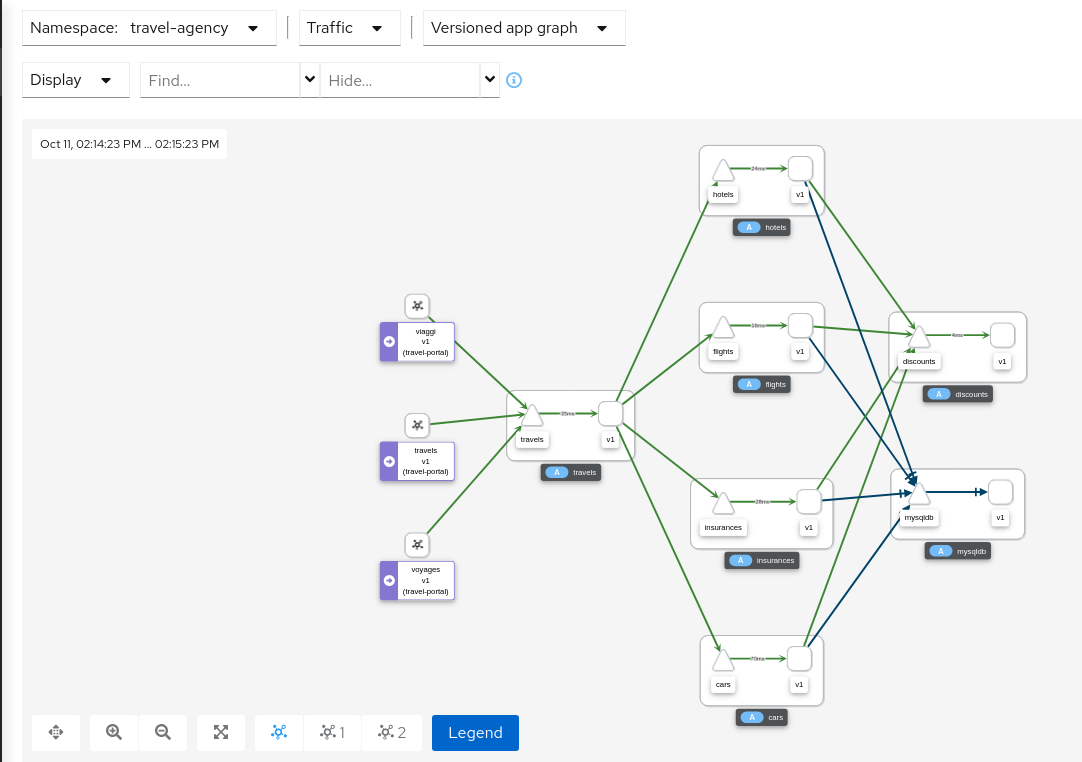

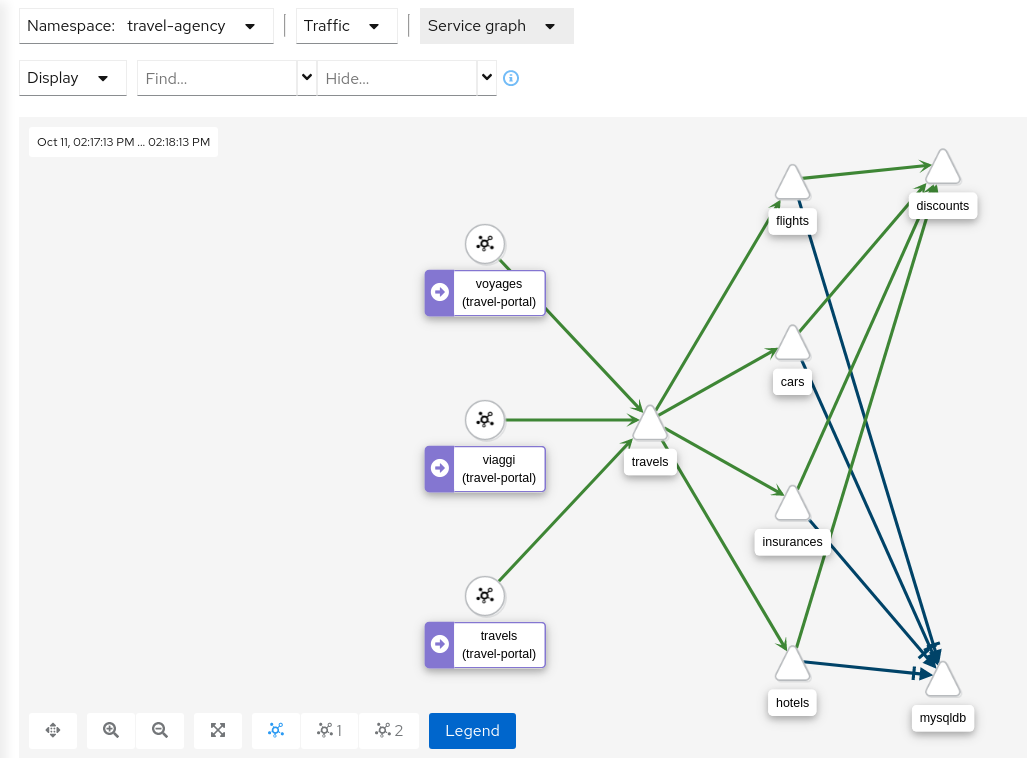

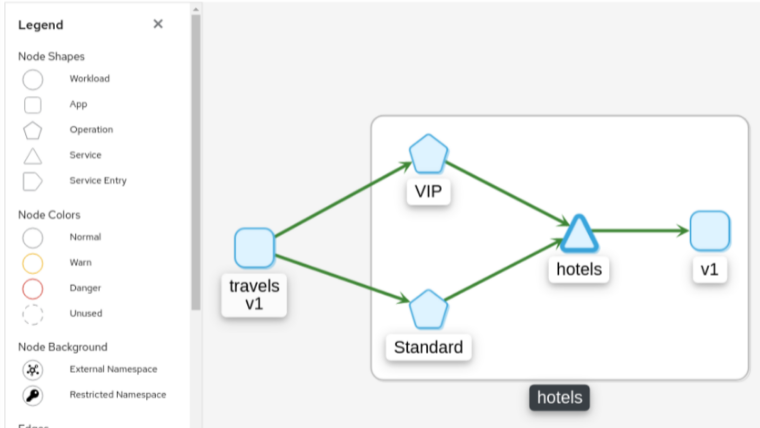

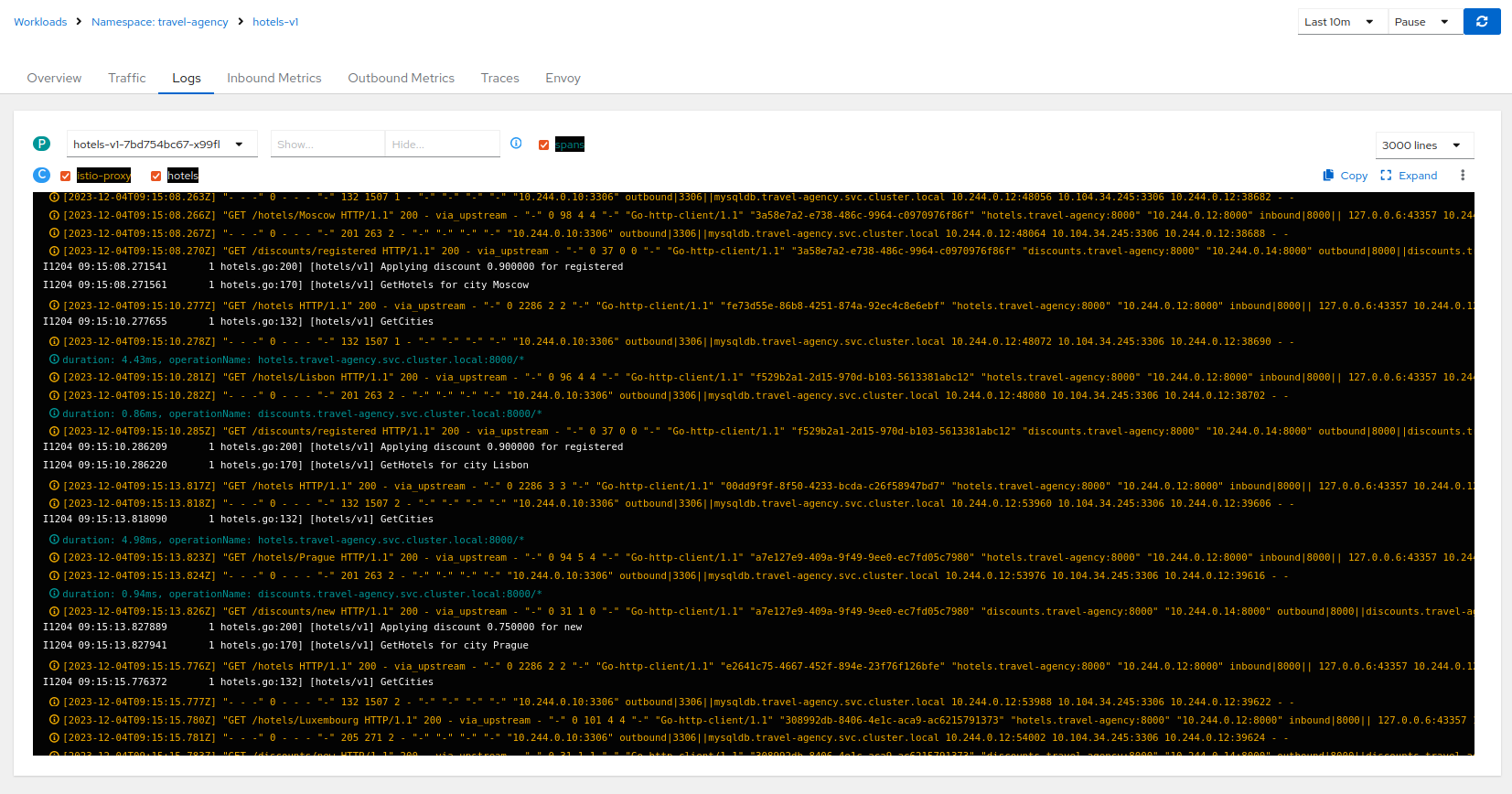

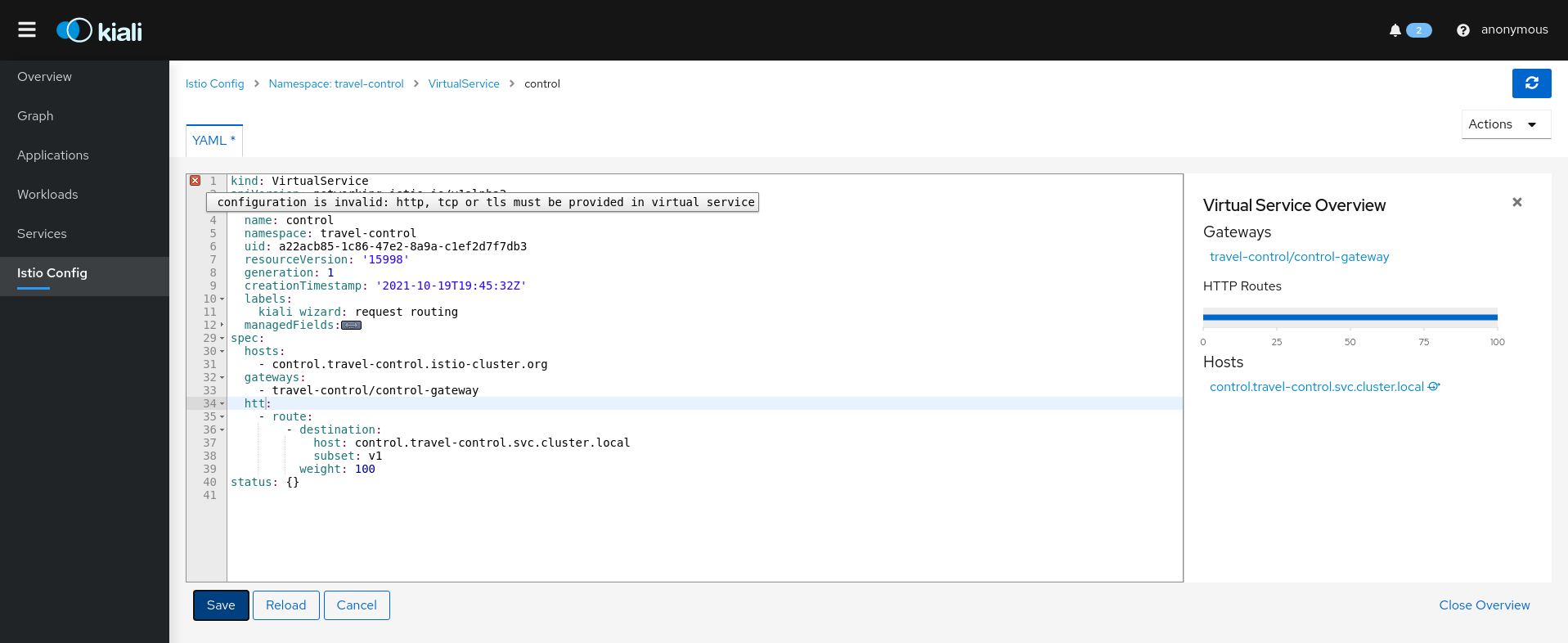

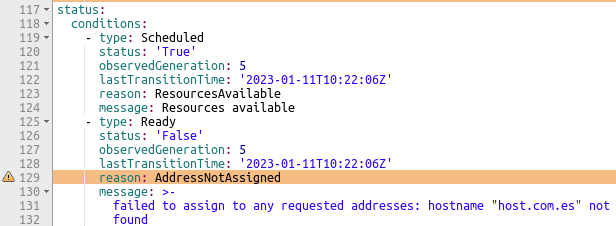

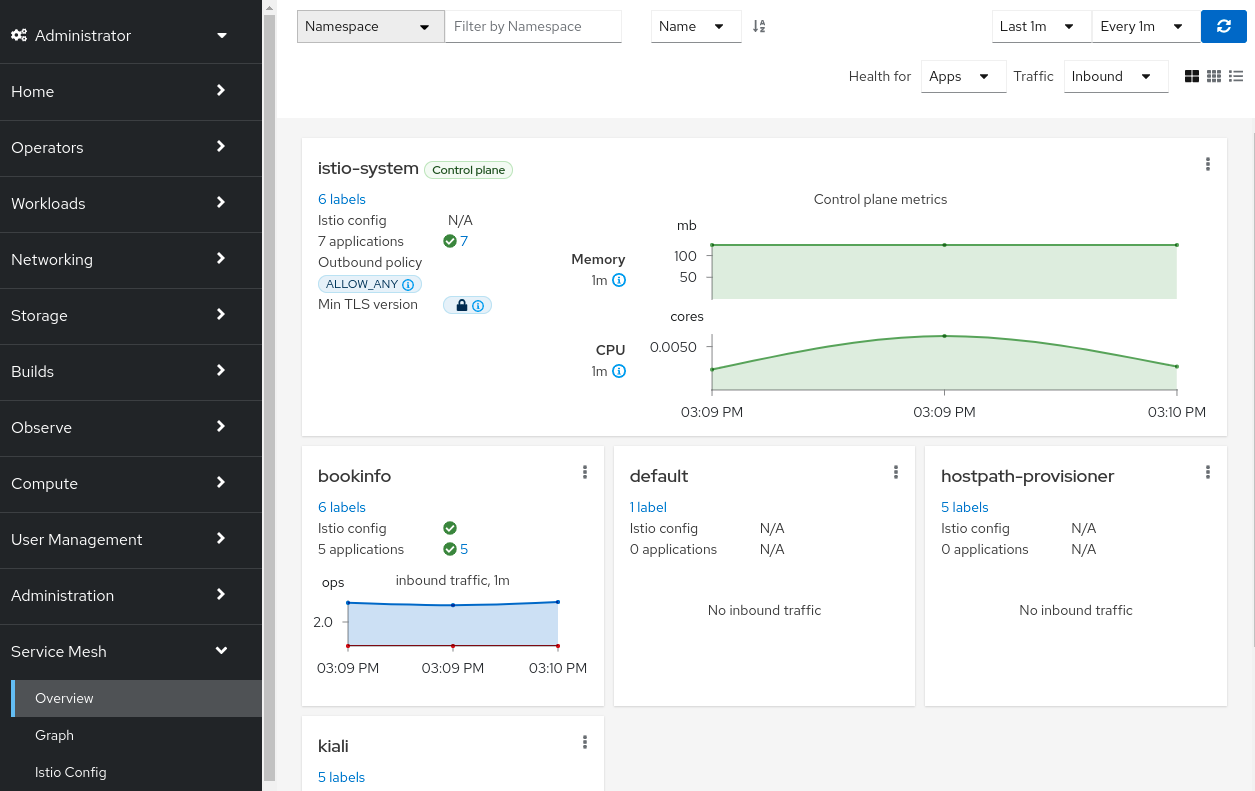

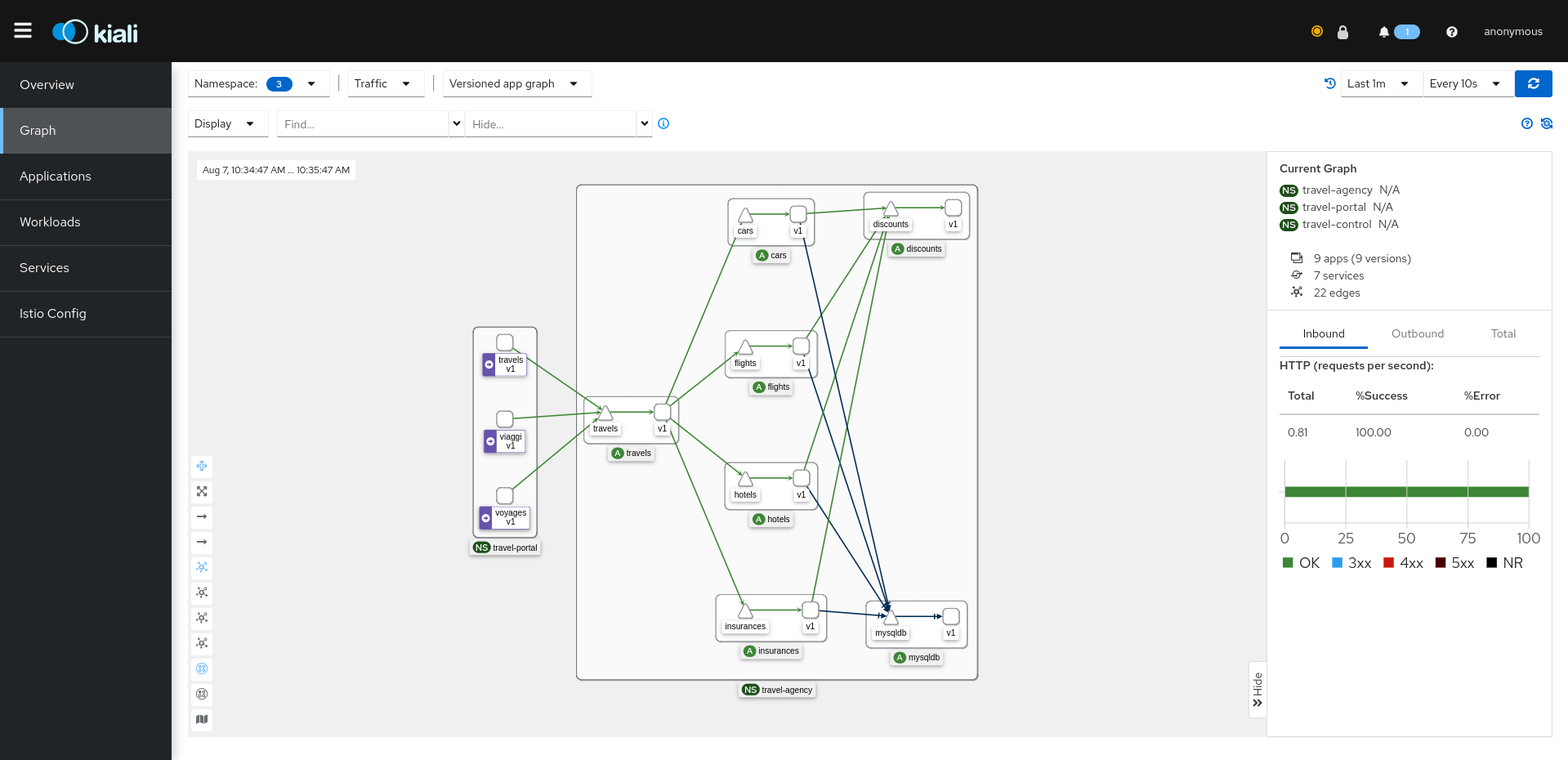

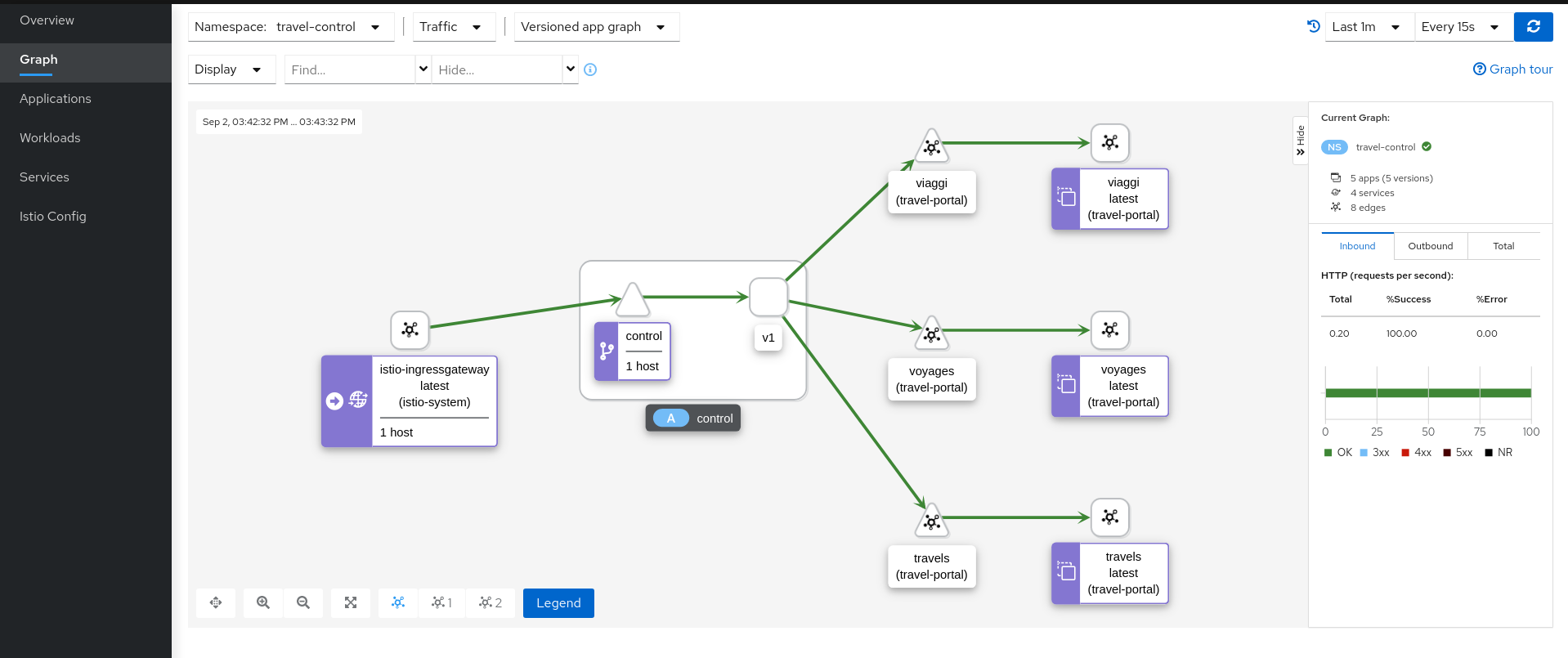

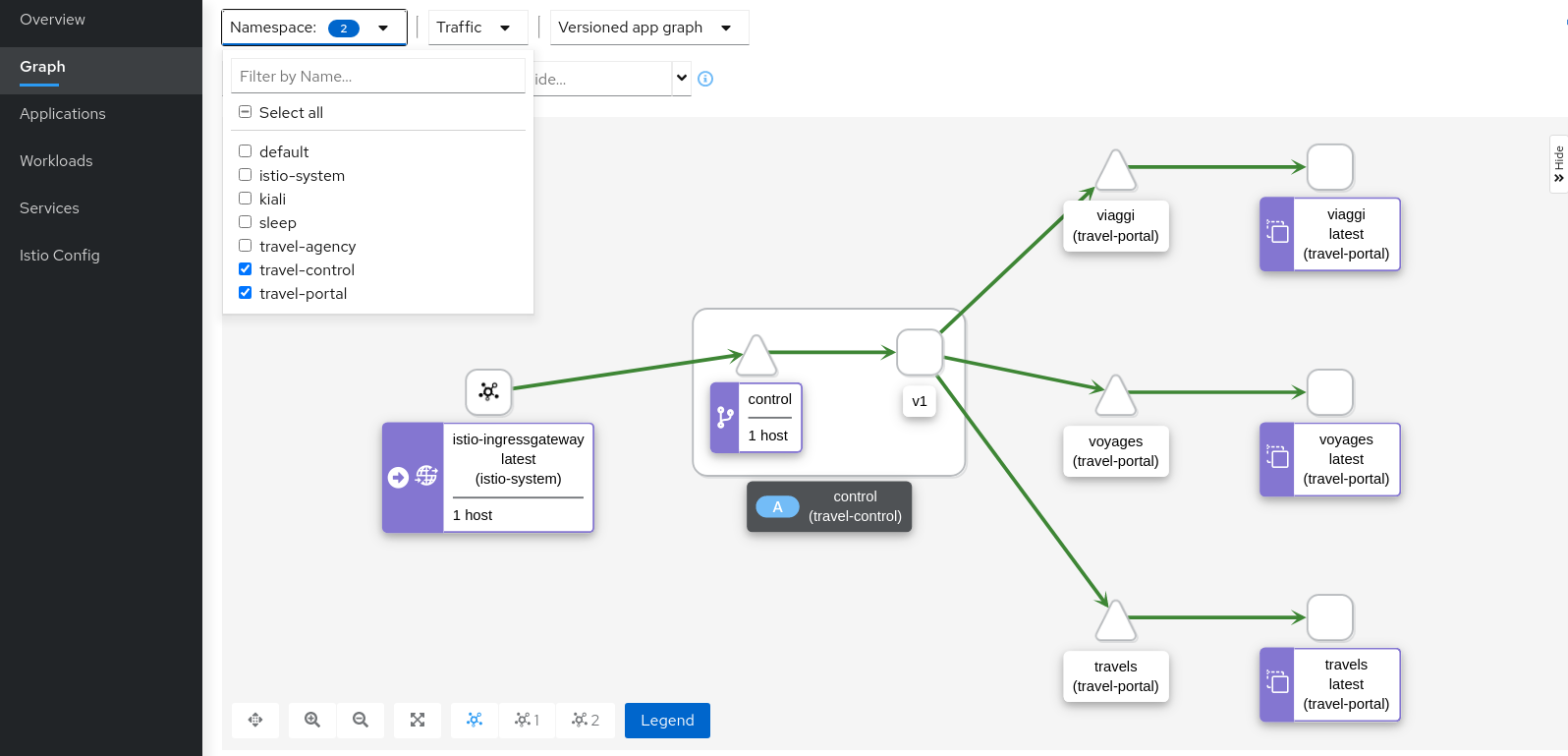

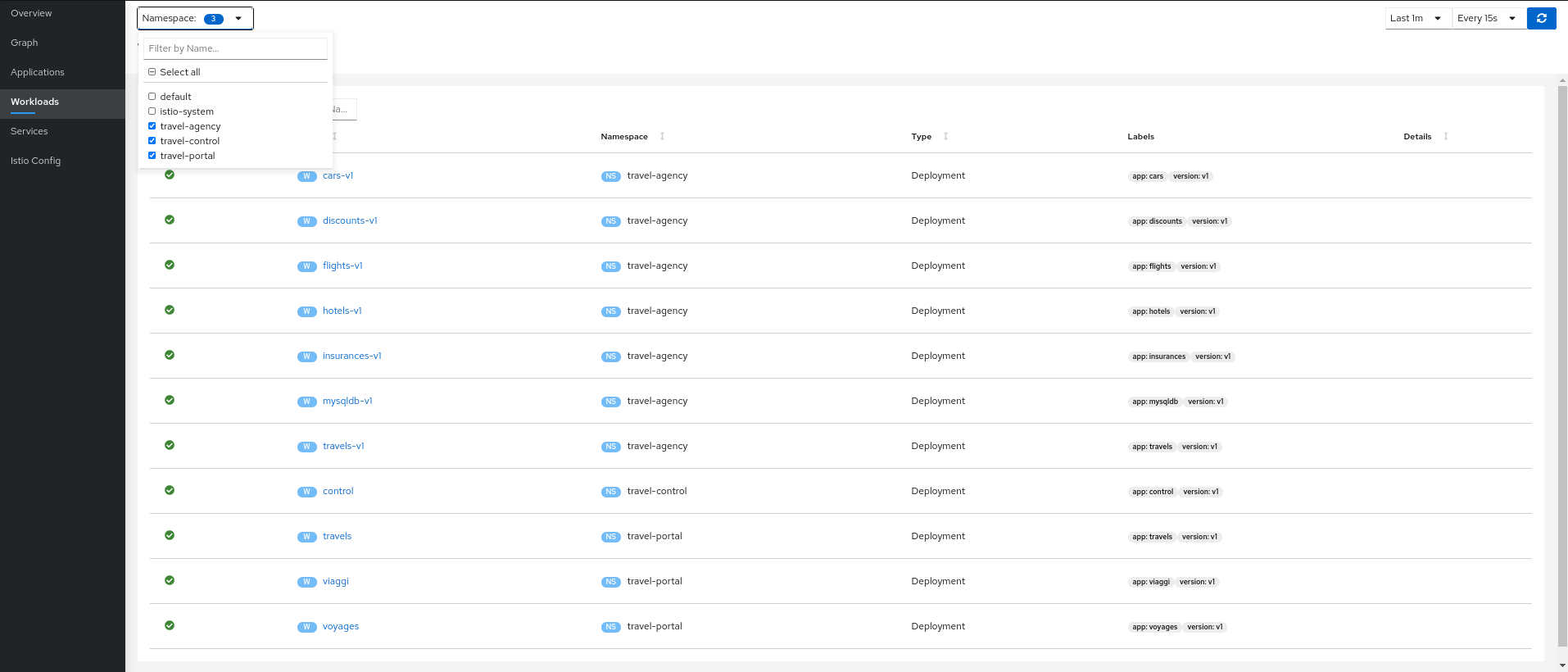

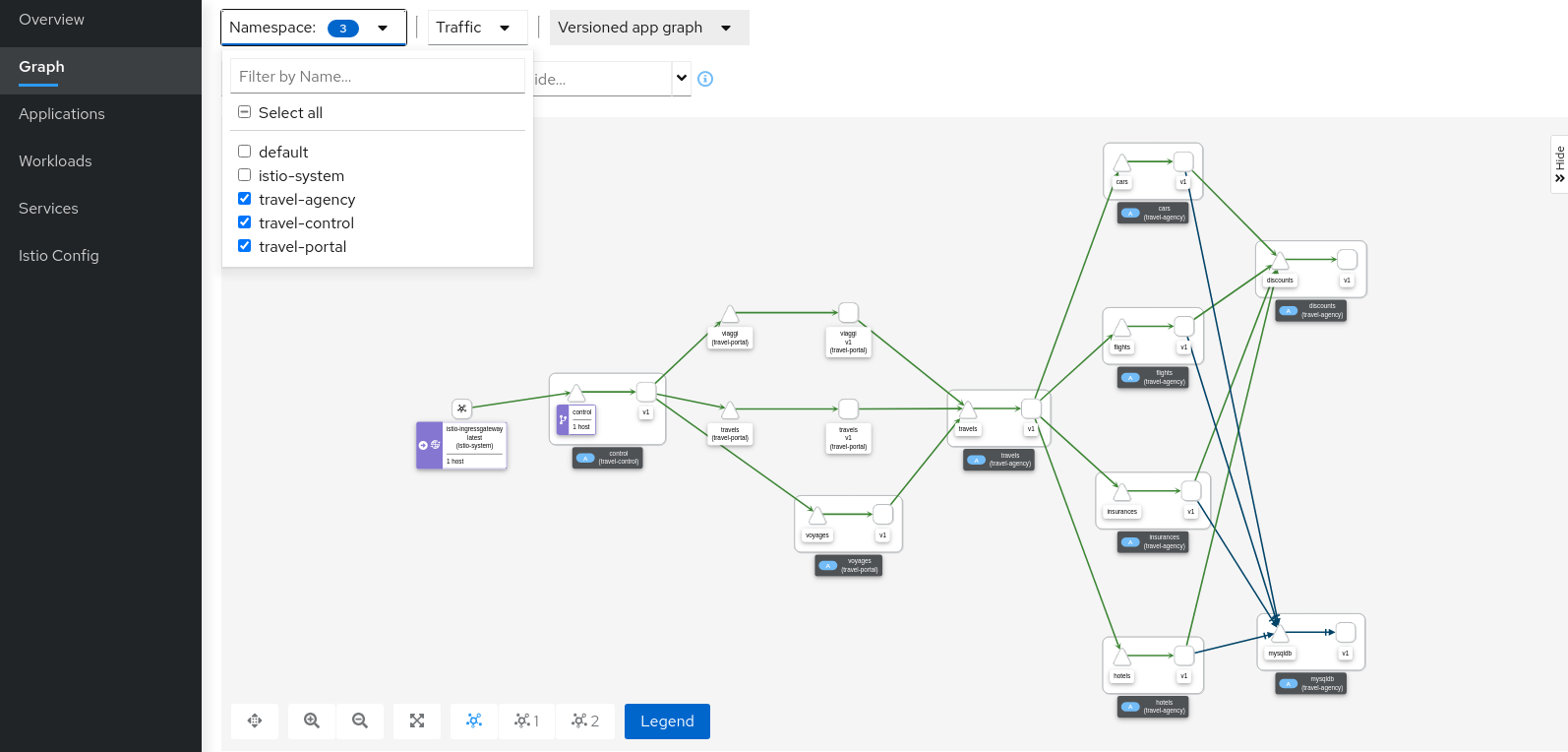

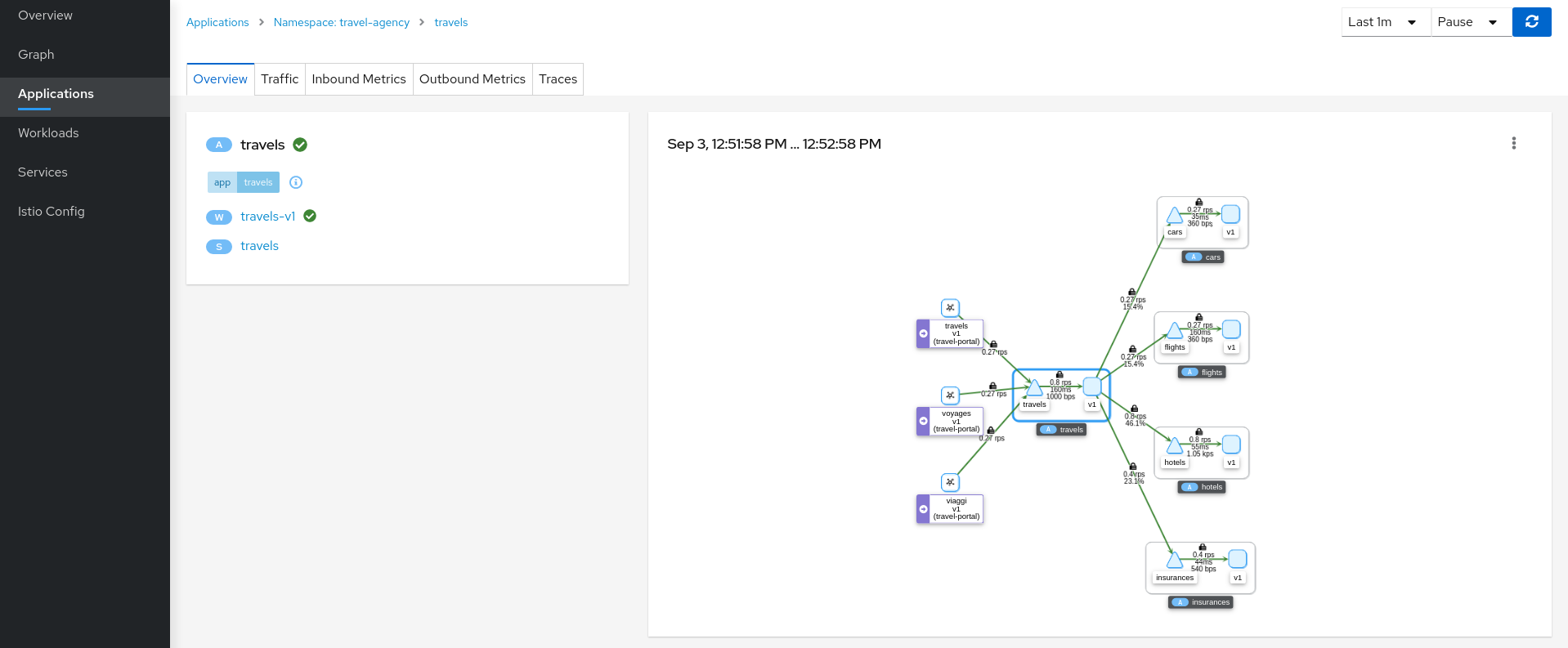

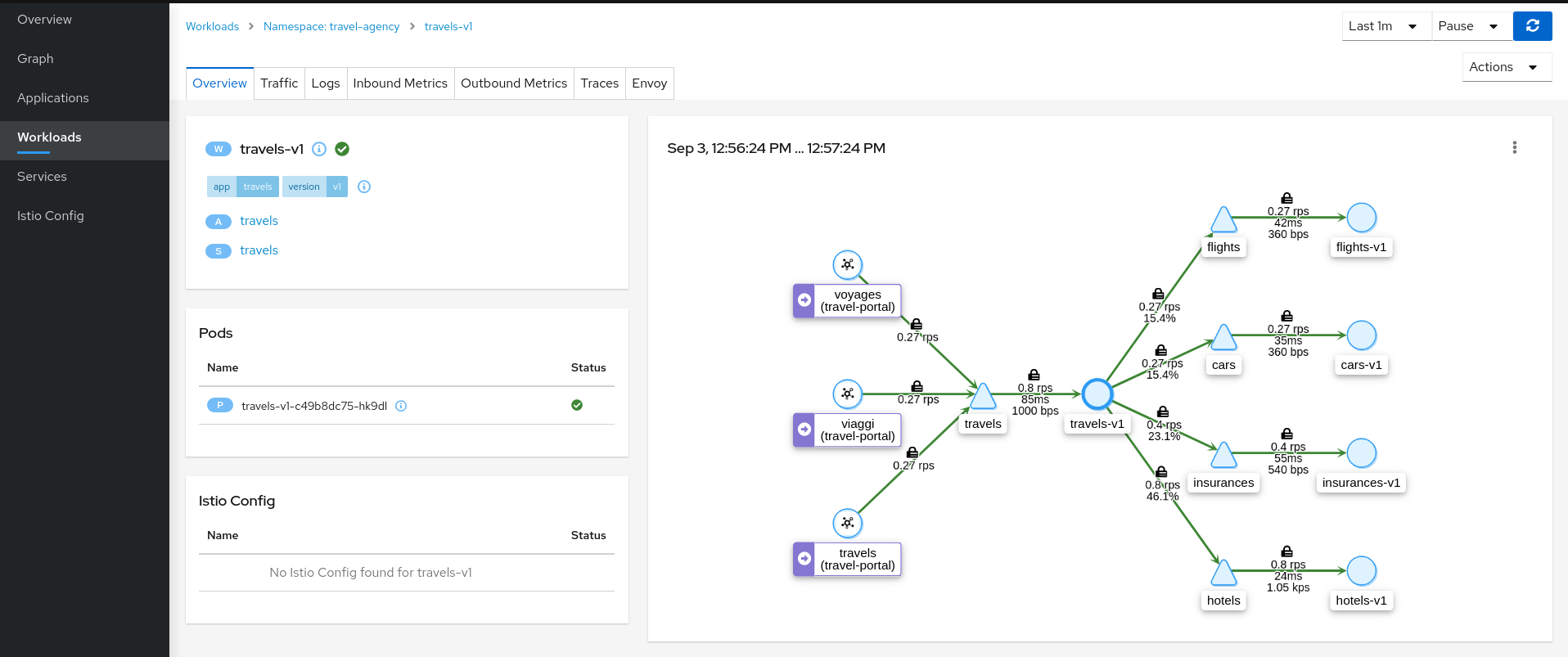

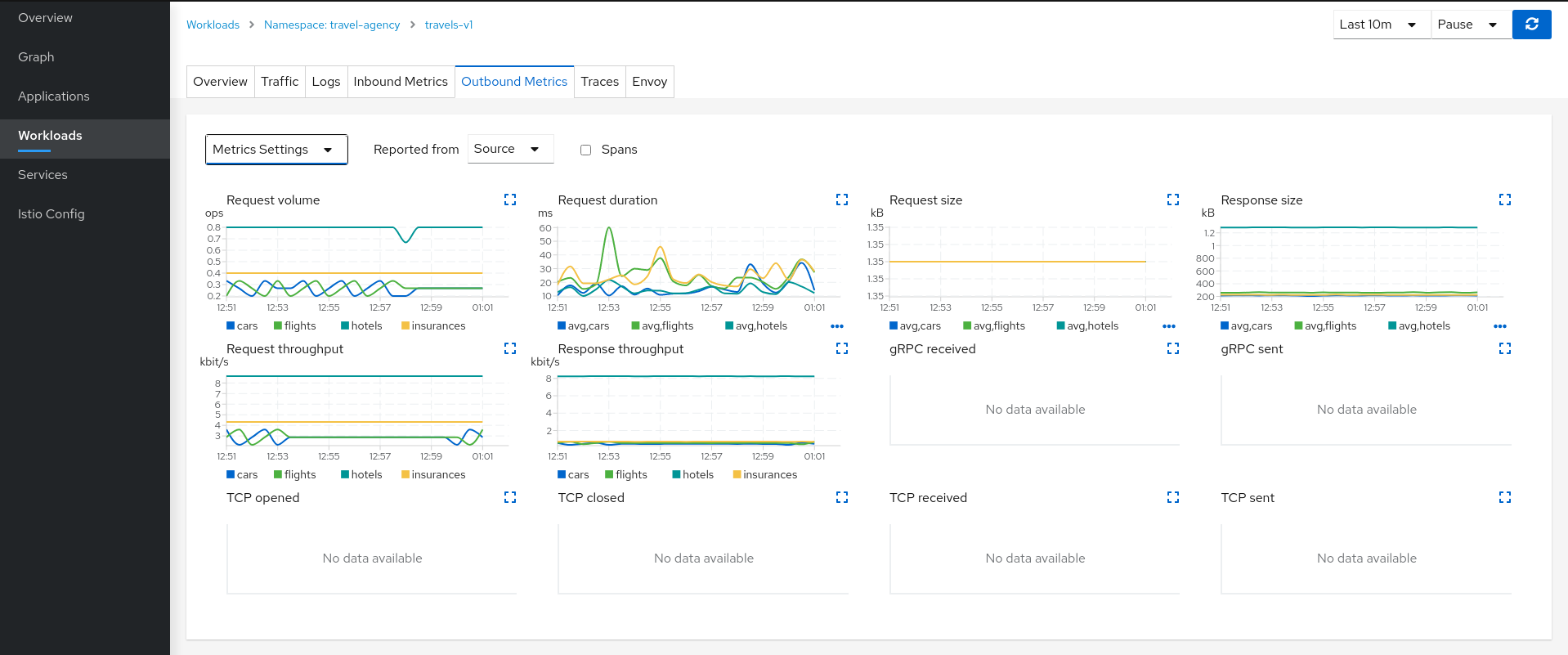

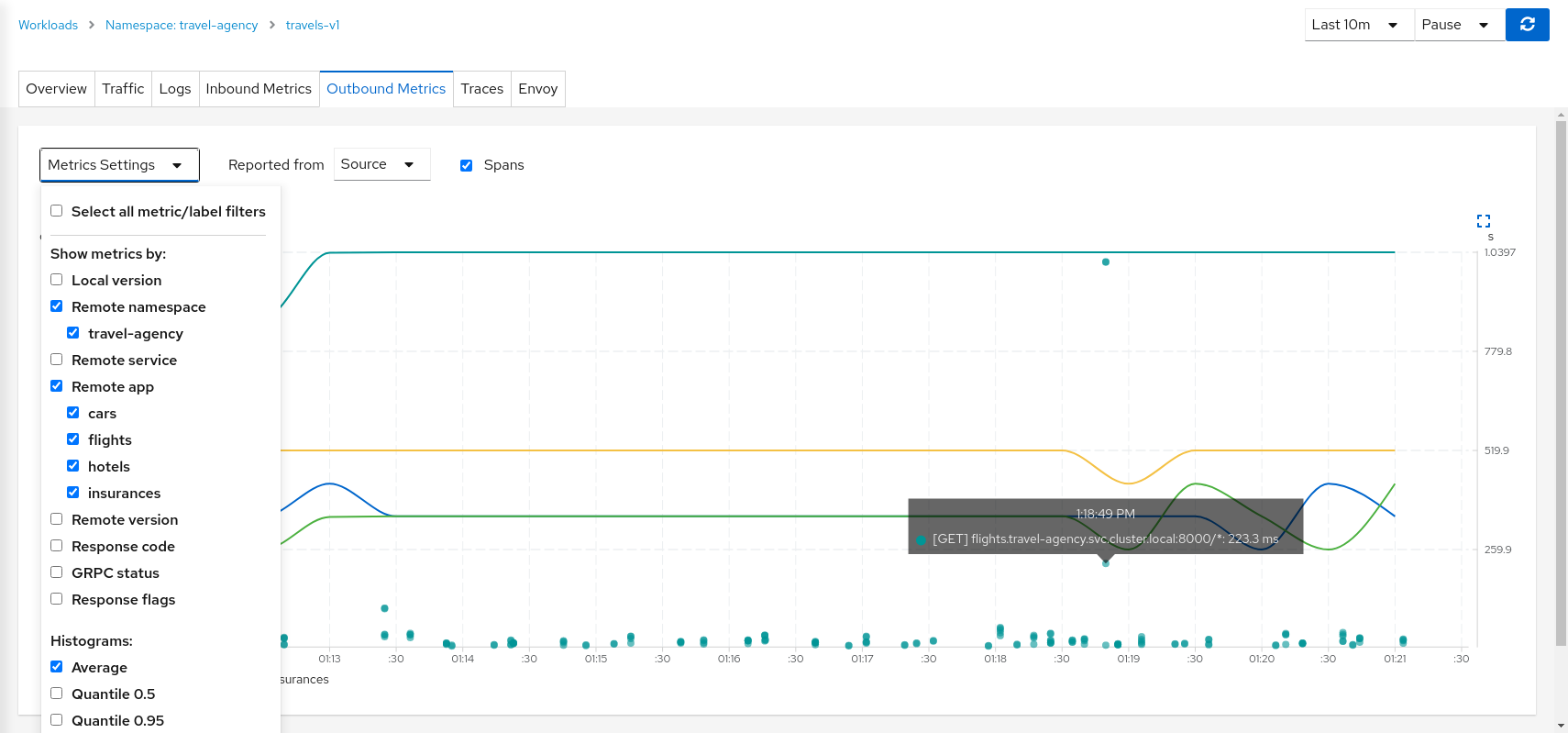

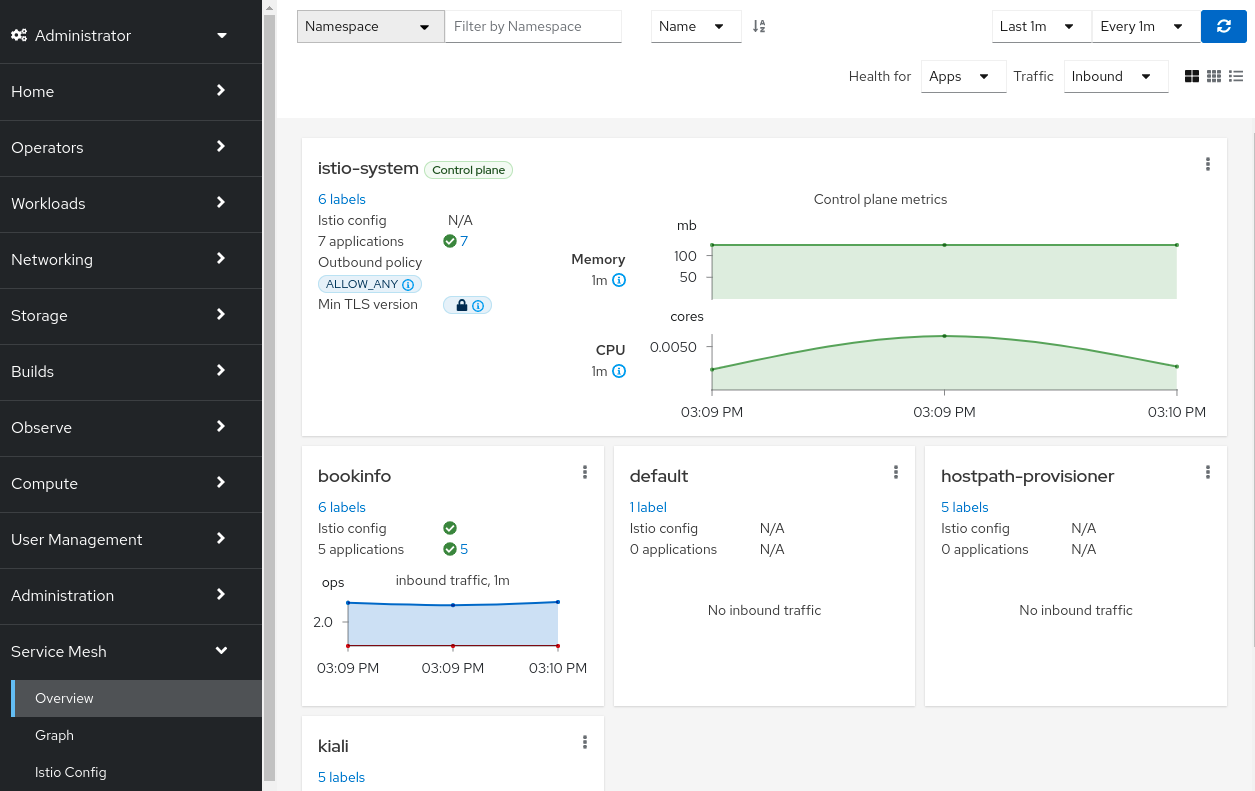

- 5.3.4: Observe

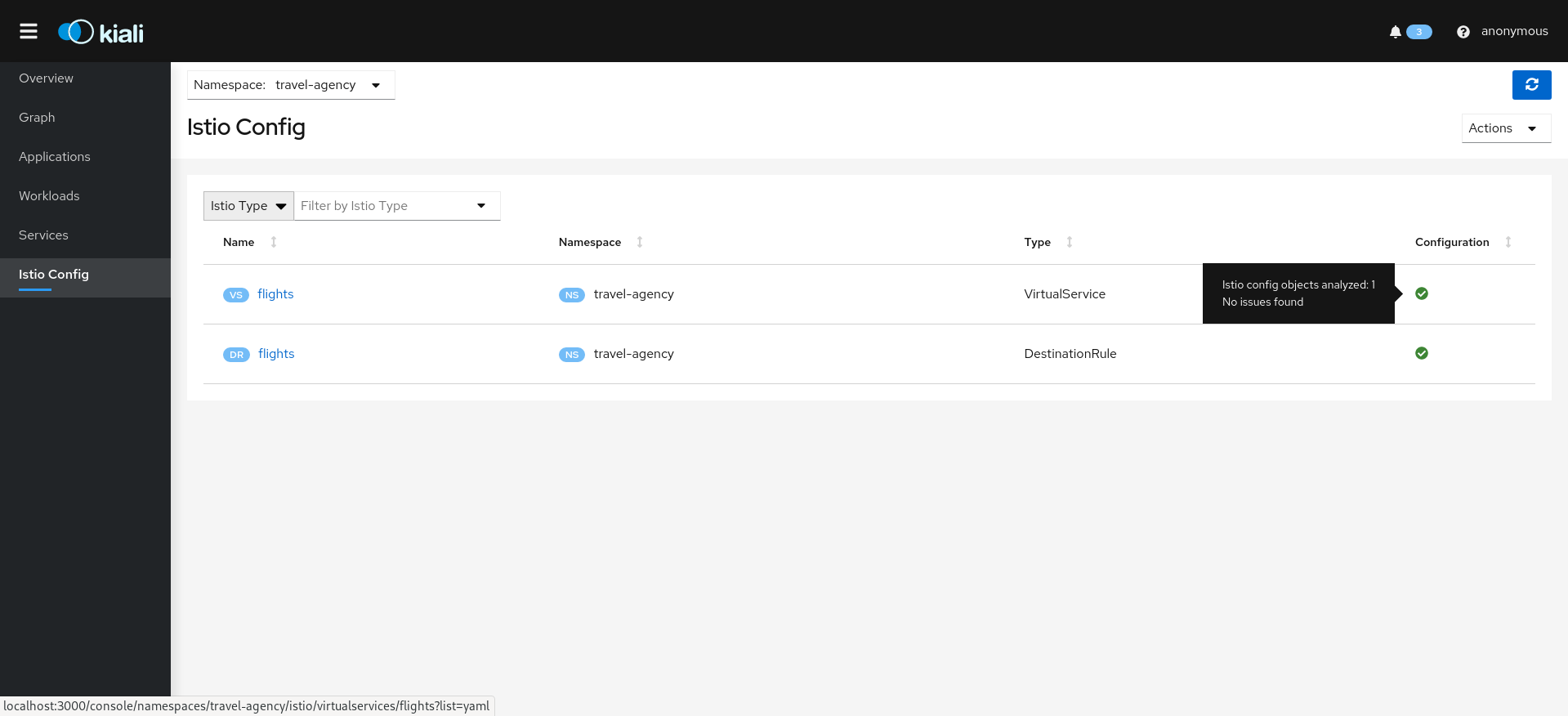

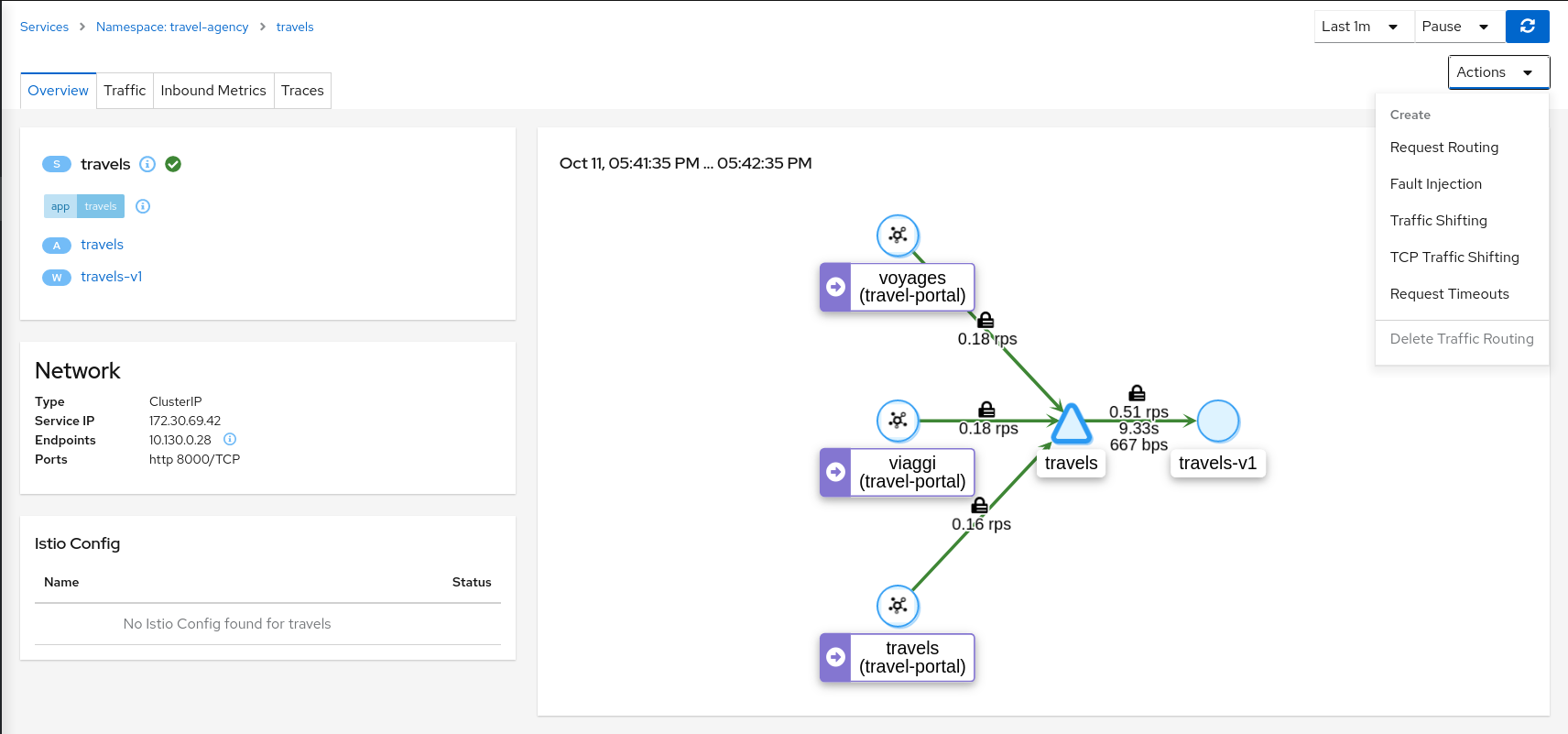

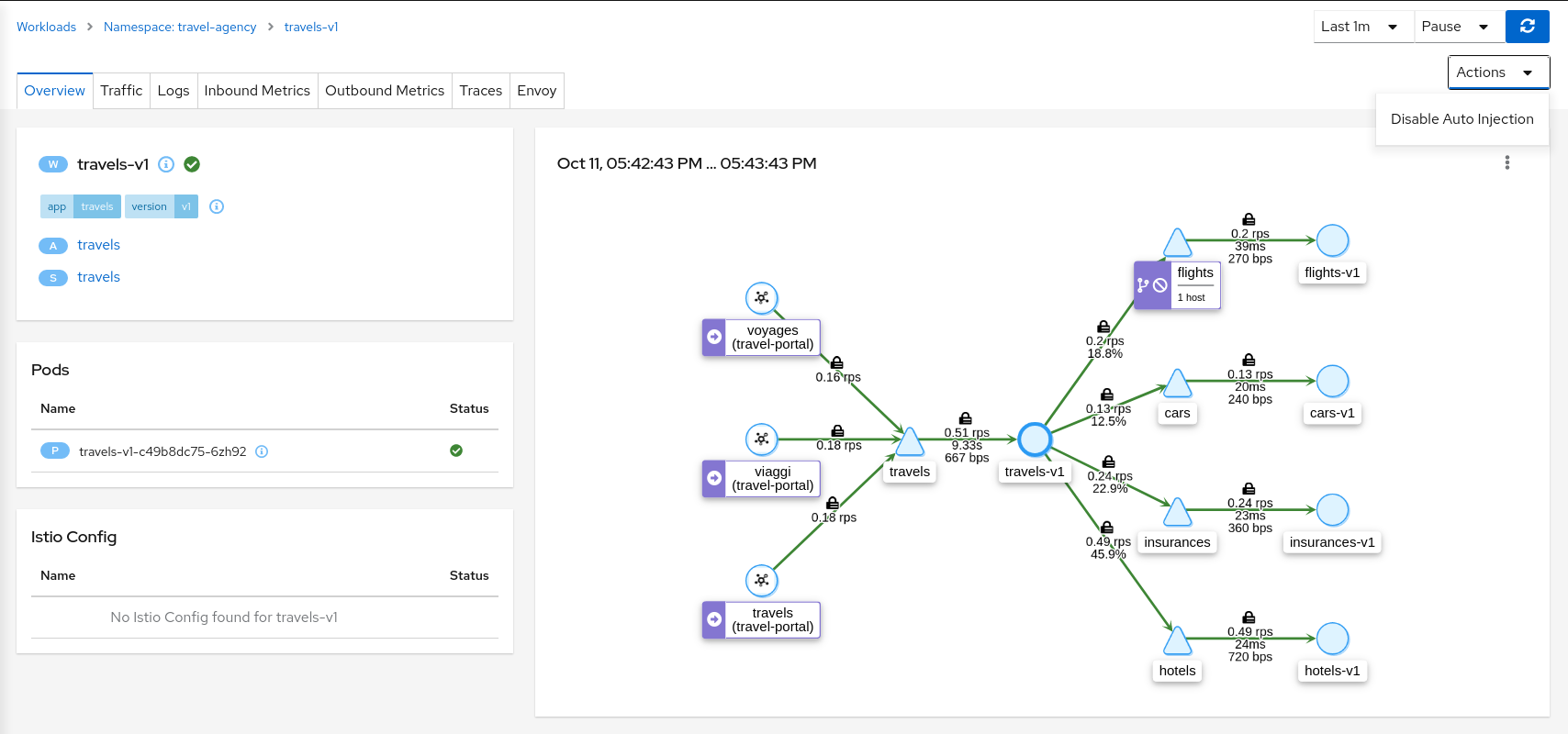

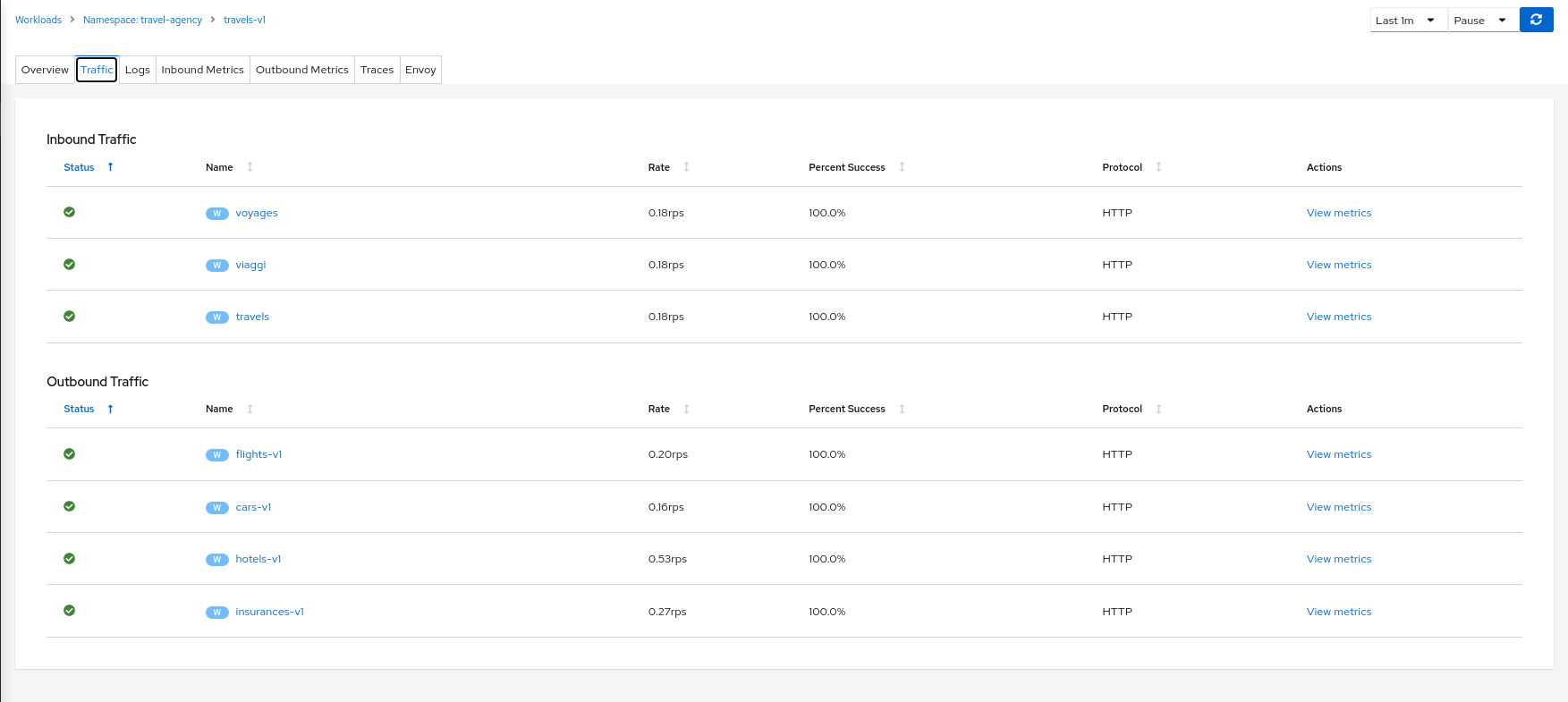

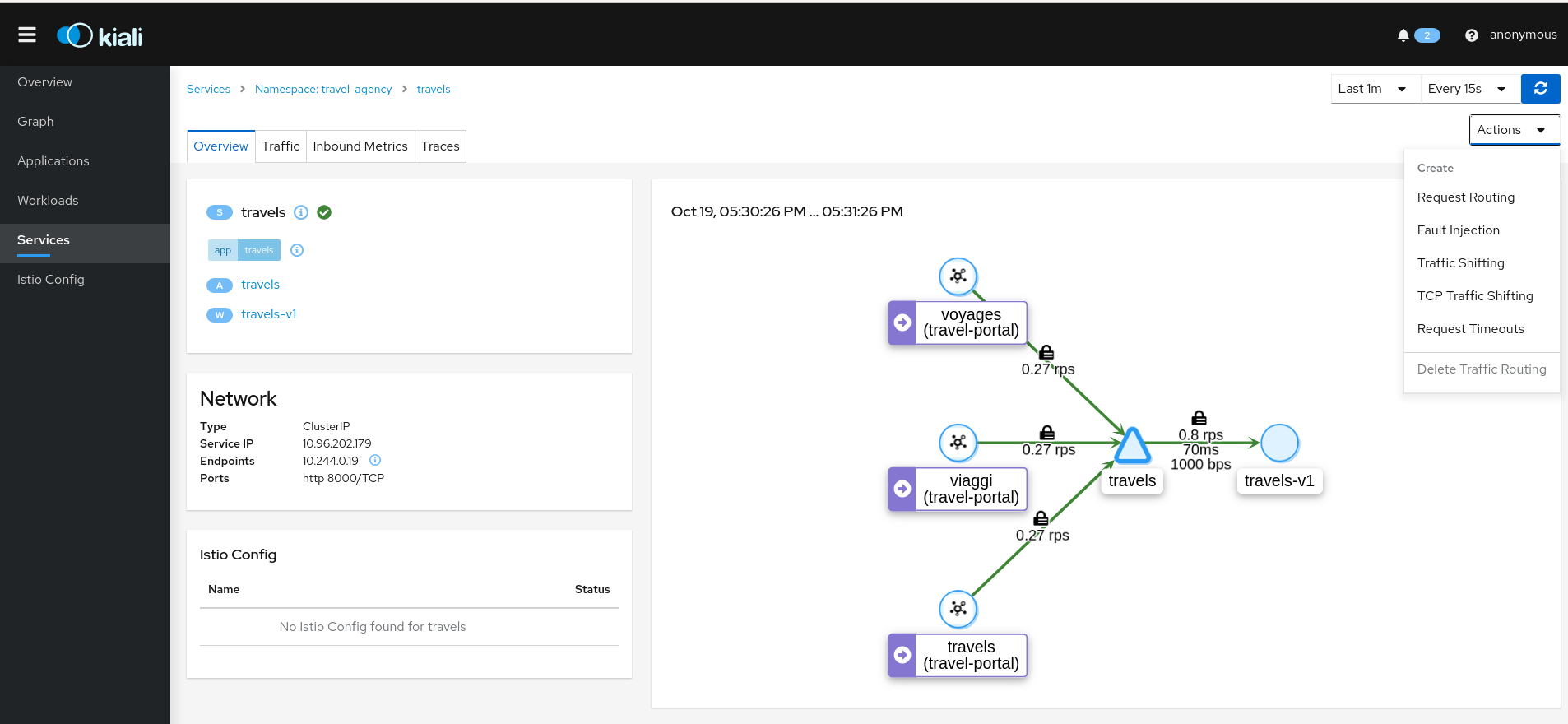

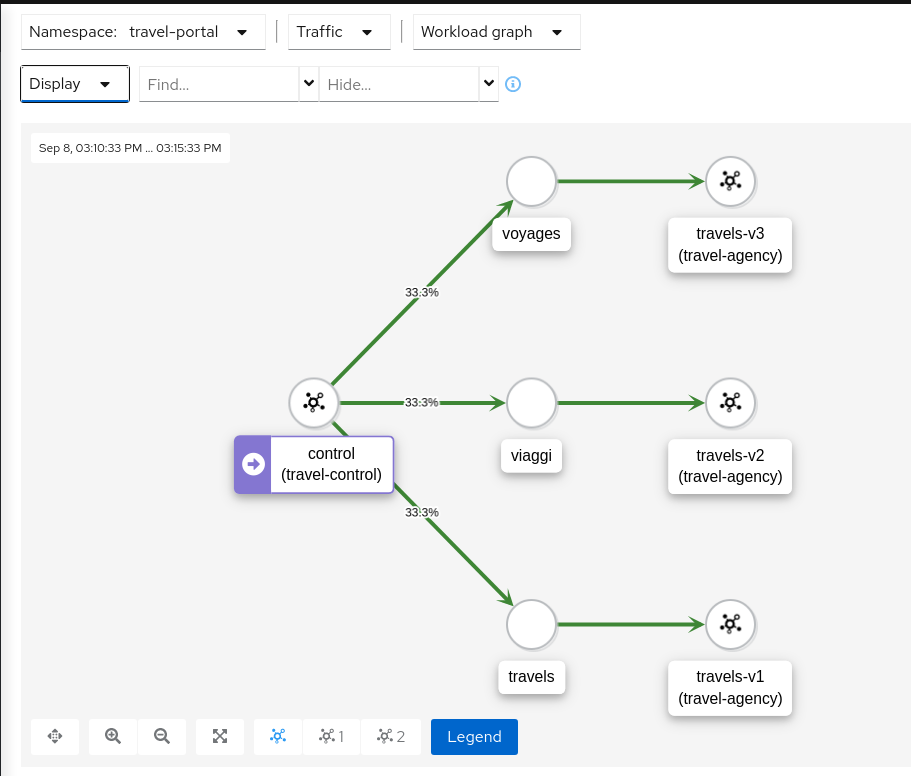

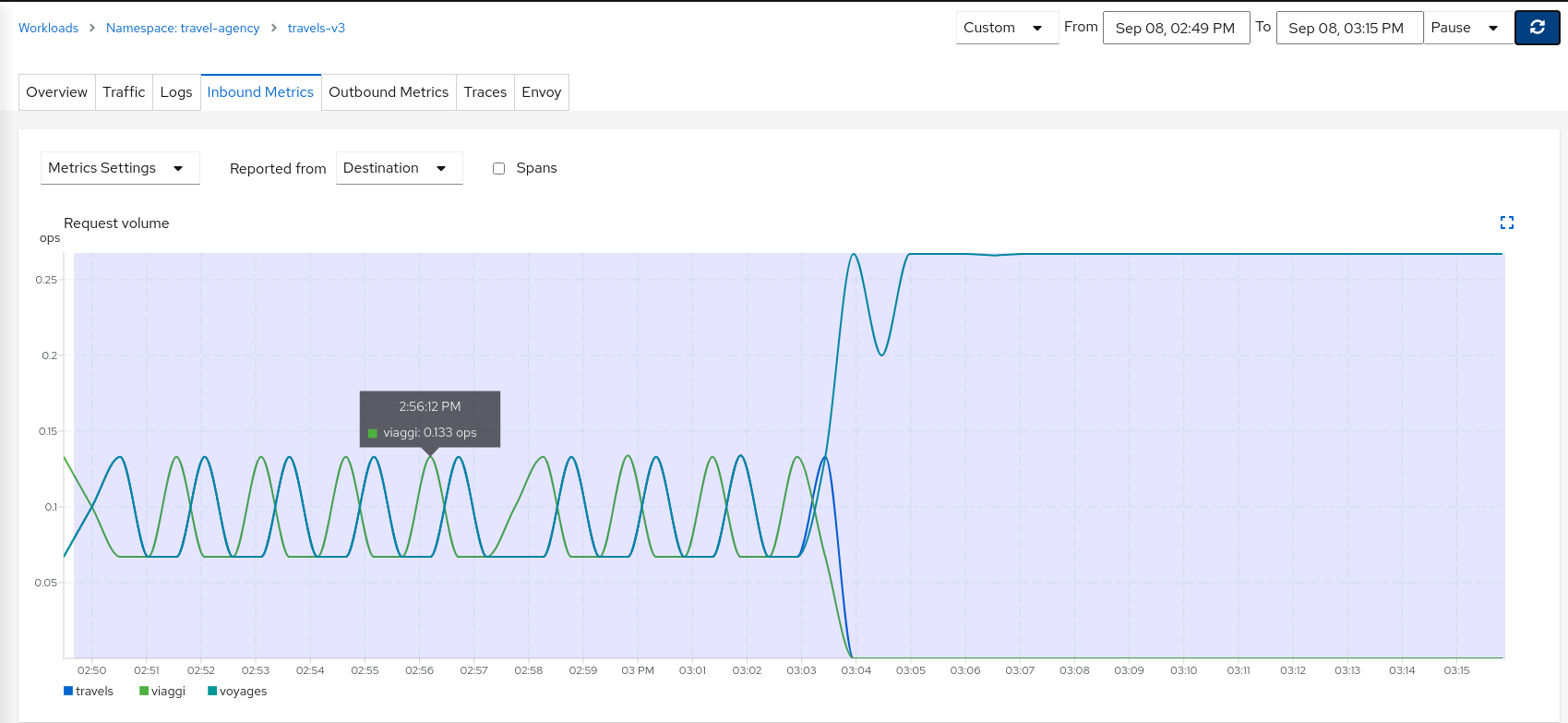

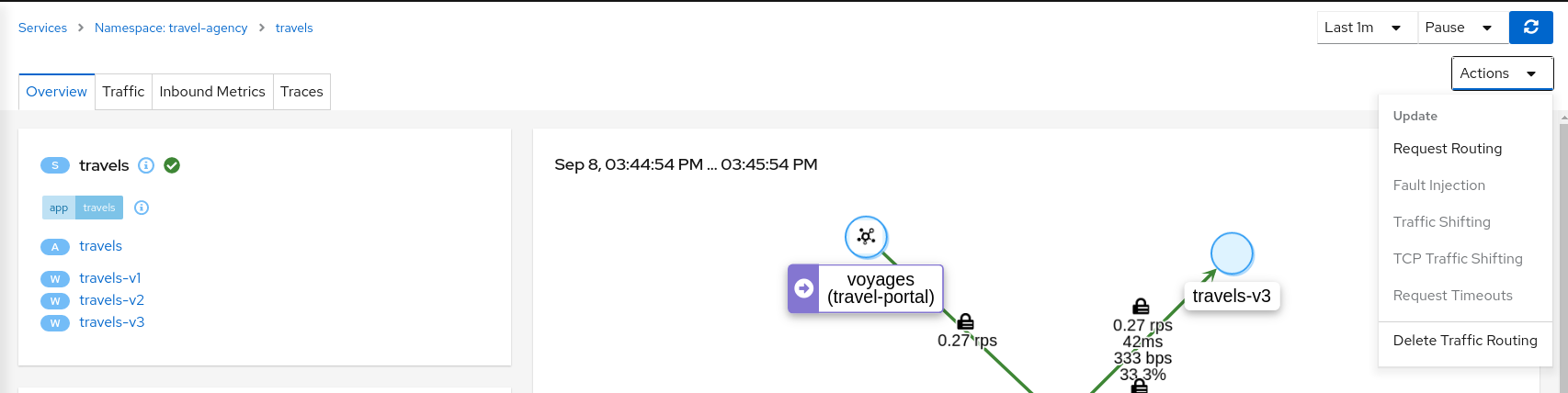

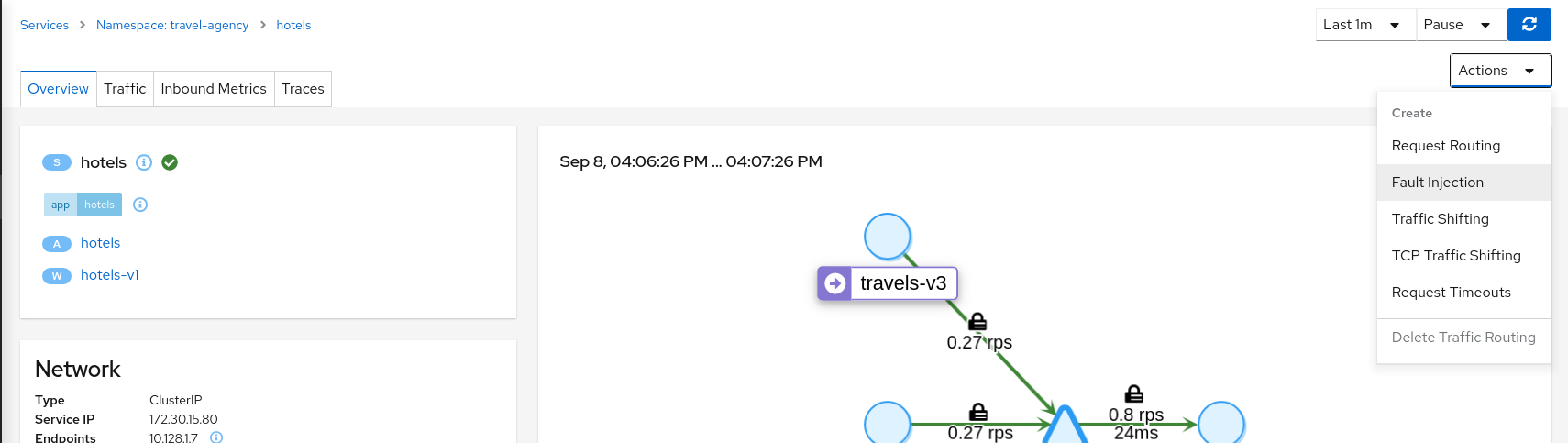

- 5.3.5: Connect

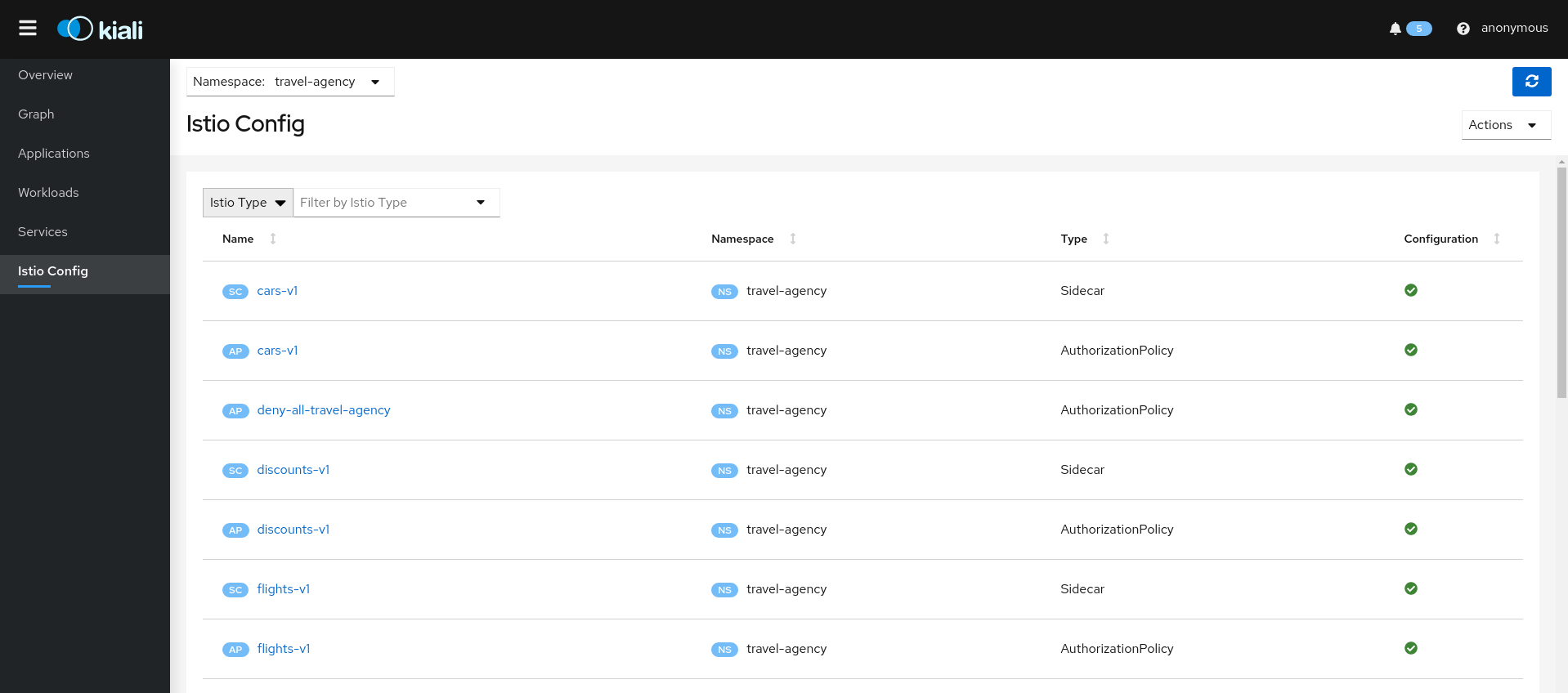

- 5.3.6: Secure

- 5.3.7: Uninstall Travel Demo

- 6: Architecture and Terms

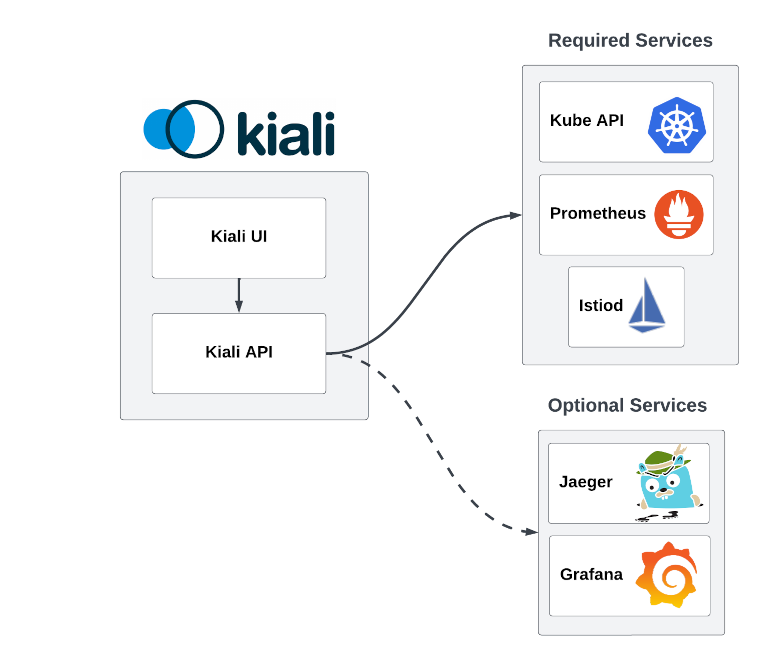

- 6.1: Architecture

- 6.2: Terminology

- 6.2.1: Concepts

- 6.2.2: Networking

- 7: FAQ

- 7.1: Authentication

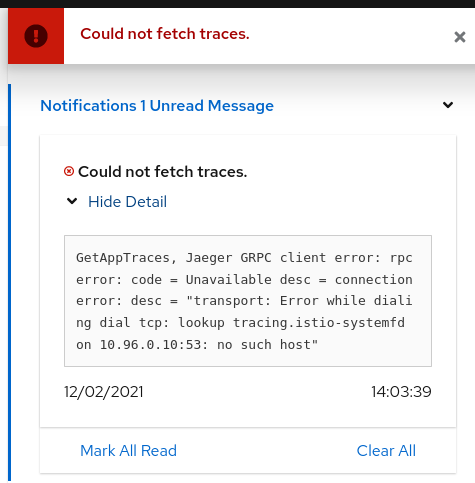

- 7.2: Distributed Tracing

- 7.3: General

- 7.4: Graph

- 7.5: Installation

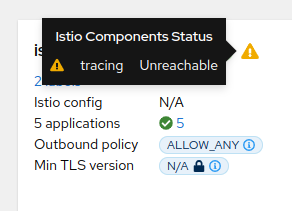

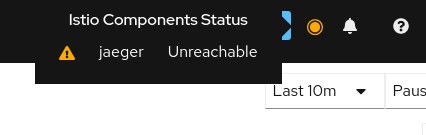

- 7.6: Istio Component Status

- 7.7: Validations

- 8: Contribution Guidelines

- 8.1: How to Contribute

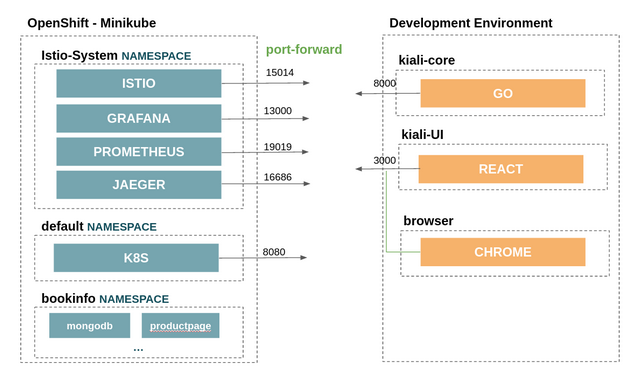

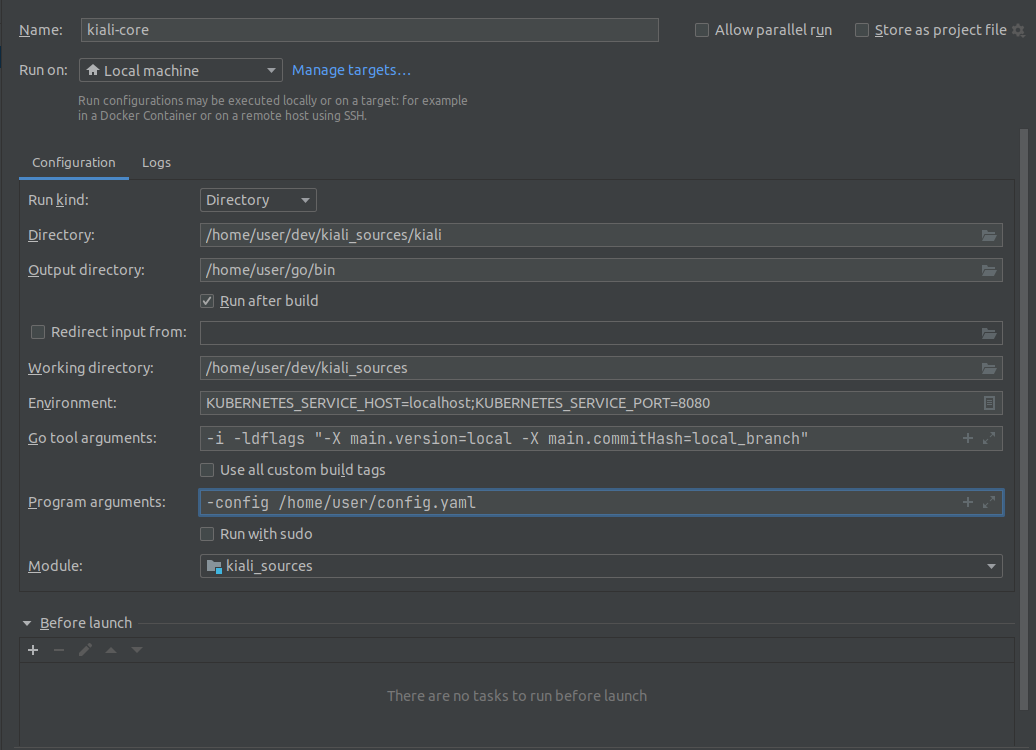

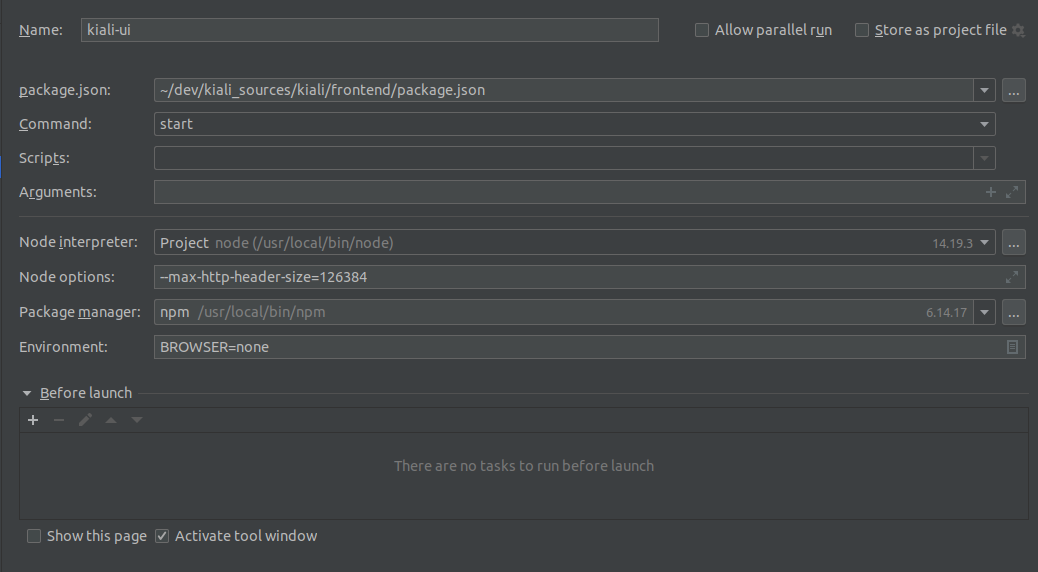

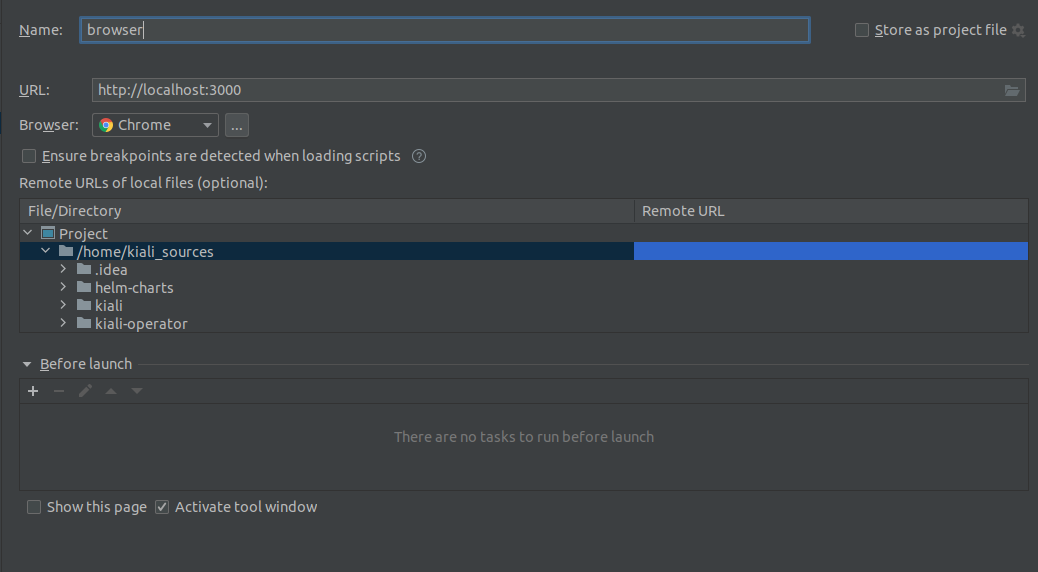

- 8.2: Development Environment

- 9: Kiali Integrations

- 9.1: OSSM Console

1 - Installation

1.1 - Quick Start

You can quickly install and try Kiali via one of the following two methods.

Install via Istio Addons

If you downloaded Istio, the easiest way to install and try Kiali is by running:

kubectl apply -f ${ISTIO_HOME}/samples/addons/kiali.yaml

To uninstall:

kubectl delete -f ${ISTIO_HOME}/samples/addons/kiali.yaml --ignore-not-found

Install via Helm

To install the latest version of Kiali Server using Helm, run the following command:

helm install \

--namespace istio-system \

--set auth.strategy="anonymous" \

--repo https://kiali.org/helm-charts \

kiali-server \

kiali-server

--disable-openapi-validation (this is needed on some versions of OpenShift, for example).

To uninstall:

helm uninstall --namespace istio-system kiali-server

Access to the UI

Run the following command:

kubectl port-forward svc/kiali 20001:20001 -n istio-system

Then, access Kiali by visiting https://localhost:20001/ in your preferred web browser.

1.2 - Installation Guide

This section describes the production installation methods available for Kiali.

The recommended way to deploy Kiali is via the Kiali Operator, either using Helm Charts or OperatorHub.

The Kiali Operator is a Kubernetes Operator and manages your Kiali installation. It watches the Kiali Custom Resource (Kiali CR), a YAML file that holds the deployment configuration.

1.2.1 - Prerequisites

Istio

Before you install Kiali you must have already installed Istio along with its telemetry storage addon (i.e. Prometheus). You might also consider installing Istio’s optional tracing addon (i.e. Jaeger) and optional Grafana addon but those are not required by Kiali. Refer to the Istio documentation for details.

Enable the Debug Interface

Like istioctl, Kiali makes use of Istio’s port 8080 “Debug Interface”. Despite the naming, this is required for accessing the status of the proxies

and the Istio registry.

The ENABLE_DEBUG_ON_HTTP setting controls the relevant access. Istio suggests to disable this for security, but Kiali requires ENABLE_DEBUG_ON_HTTP=true,

which is the default.

For more information, see the Istio documentation.

Version Compatibility

Each Kiali release is tested against the most recent Istio release. In general, Kiali tries to maintain compatibility with older Istio releases and Kiali versions later than those posted in the below table may work, but such combinations are not tested and will not be supported. Known incompatibilities are noted in the compatibility table below.

| Istio | Kiali Min | Kiali Max | Notes |

|---|---|---|---|

| 1.21 | 1.79.0 | ||

| 1.20 | 1.76.0 | 1.78.0 | |

| 1.19 | 1.72.0 | 1.75.0 | |

| 1.18 | 1.67.0 | 1.73.0 | |

| 1.17 | 1.63.2 | 1.66.1 | Avoid 1.63.0,1.63.1 due to a regression. |

| 1.16 | 1.59.1 | 1.63.2 | Istio 1.16 is out of support. Avoid 1.62.0,1.63.0,1.63.1 due to a regression. |

| 1.15 | 1.55.1 | 1.59.0 | Istio 1.15 is out of support. |

| 1.14 | 1.50.0 | 1.54 | Istio 1.14 is out of support. |

| 1.13 | 1.45.1 | 1.49 | Istio 1.13 is out of support. |

| 1.12 | 1.42.0 | 1.44 | Istio 1.12 is out of support. |

| 1.11 | 1.38.1 | 1.41 | Istio 1.11 is out of support. |

| 1.10 | 1.34.1 | 1.37 | Istio 1.10 is out of support. |

| 1.9 | 1.29.1 | 1.33 | Istio 1.9 is out of support. |

| 1.8 | 1.26.0 | 1.28 | Istio 1.8 removes all support for mixer/telemetry V1, as does Kiali 1.26.0. Use earlier versions of Kiali for mixer support. |

| 1.7 | 1.22.1 | 1.25 | Istio 1.7 istioctl no longer installs Kiali. Use the Istio samples/addons for quick demo installs. Istio 1.7 is out of support. |

| 1.6 | 1.18.1 | 1.21 | Istio 1.6 introduces CRD and Config changes, Kiali 1.17 is recommended for Istio < 1.6. |

Maistra Version Compatibility

| Maistra | SMCP CR | Kiali | Notes |

|---|---|---|---|

| 2.5 | 2.5 | 1.73 | Using Maistra 2.5 to install service mesh control plane 2.5 requires Kiali Operator v1.73. Other versions are not compatible. |

| 2.5 | 2.4 | 1.65 | Using Maistra 2.5 to install service mesh control plane 2.4 requires Kiali Operator v1.73. Other versions are not compatible. |

| 2.5 | 2.3 | 1.57 | Using Maistra 2.5 to install service mesh control plane 2.3 requires Kiali Operator v1.73. Other versions are not compatible. |

| 2.4 | 2.4 | 1.65 | Using Maistra 2.4 to install service mesh control plane 2.4 requires Kiali Operator v1.65. Other versions are not compatible. |

| 2.4 | 2.3 | 1.57 | Using Maistra 2.4 to install service mesh control plane 2.3 requires Kiali Operator v1.65. Other versions are not compatible. |

| 2.3 | 2.3 | 1.57 | Using Maistra 2.3 to install service mesh control plane 2.3 requires Kiali Operator v1.57. Other versions are not compatible. |

| n/a | 2.2 | n/a | Service mesh control plane 2.2 is out of support. |

| n/a | 2.1 | n/a | Service mesh control plane 2.1 is out of support. |

| n/a | 2.0 | n/a | Service mesh control plane 2.0 is out of support. |

| n/a | 1.1 | n/a | Service mesh control plane 1.1 is out of support. |

| n/a | 1.0 | n/a | Service mesh control plane 1.0 is out of support. |

OpenShift Console Plugin (OSSMC) Version Compatibility

Currently OSSMC plugin only works with Kiali server 1.73.

| OpenShift | Min OSSMC | Max OSSMC | Kiali | Notes |

|---|---|---|---|---|

| 4.12+ | 1.73 | 1.73 | 1.73 | This is the most tested version. Newer community versions may also work. |

OpenShift Service Mesh Version Compatibility

OpenShift

If you are running Red Hat OpenShift Service Mesh (OSSM), use only the bundled version of Kiali.Browser Compatibility

Kiali requires a modern web browser and supports the last two versions of Chrome, Firefox, Safari or Edge.

Hardware Requirements

Any machine capable of running a Kubernetes based cluster should also be able to run Kiali.

However, Kiali tends to grow in resource usage as your cluster grows. Usually the more namespaces and workloads you have in your cluster, the more memory you will need to allocate to Kiali.

Platform-specific requirements

OpenShift

If you are installing on OpenShift, you must grant the cluster-admin role to the user that is installing Kiali. If OpenShift is installed locally on the machine you are using, the following command should log you in as user system:admin which has this cluster-admin role:

$ oc login -u system:admin

kubectl is used to interact with the cluster environment. On OpenShift you can simply replace kubectl with oc, unless otherwise noted.

Google Cloud Private Cluster

Private clusters on Google Cloud have network restrictions. Kiali needs your cluster’s firewall to allow access from the Kubernetes API to the Istio Control Plane namespace, for both the 8080 and 15000 ports.

To review the master access firewall rule:

gcloud compute firewall-rules list --filter="name~gke-${CLUSTER_NAME}-[0-9a-z]*-master"

To replace the existing rule and allow master access:

gcloud compute firewall-rules update <firewall-rule-name> --allow <previous-ports>,tcp:8080,tcp:15000

1.2.2 - Install via Helm

Introduction

Helm is a popular tool that lets you manage Kubernetes applications. Applications are defined in a package named Helm chart, which contains all of the resources needed to run an application.

Kiali has a Helm Charts Repository at https://kiali.org/helm-charts. Two Helm Charts are provided:

- The

kiali-operatorHelm Chart installs the Kiali operator which in turn installs Kiali when you create a Kiali CR. - The

kiali-serverHelm Chart installs a standalone Kiali without the need of the Operator nor a Kiali CR.

kiali-server Helm Chart does not provide all the functionality that the Operator

provides. Some features you read about in the documentation may only be available if

you install Kiali using the Operator. Therefore, although the kiali-server Helm Chart

is actively maintained, it is not recommended and is only provided for convenience.

If using Helm, the recommended method is to install the kiali-operator Helm Chart

and then create a Kiali CR to let the Operator deploy Kiali.

Make sure you have the helm command available by following the

Helm installation docs.

Adding the Kiali Helm Charts repository

Add the Kiali Helm Charts repository with the following command:

$ helm repo add kiali https://kiali.org/helm-charts

helm commands in this page assume that you added the Kiali Helm Charts repository as shown.

If you already added the repository, you may want to update your local cache to fetch latest definitions by running:

$ helm repo update

Installing Kiali using the Kiali operator

Once you’ve added the Kiali Helm Charts repository, you can install the latest Kiali Operator along with the latest Kiali server by running the following command:

$ helm install \

--set cr.create=true \

--set cr.namespace=istio-system \

--set cr.spec.auth.strategy="anonymous" \

--namespace kiali-operator \

--create-namespace \

kiali-operator \

kiali/kiali-operator

The --namespace kiali-operator and --create-namespace flags instructs to

create the kiali-operator namespace (if needed), and deploy the Kiali

operator on it. The --set cr.create=true and --set cr.namespace=istio-system flags instructs to create a Kiali CR in the

istio-system namespace. Since the Kiali CR is created in advance, as soon as

the Kiali operator starts, it will process it to deploy Kiali. After Kiali has started,

you can access Kiali UI through ‘http://localhost:20001’ by executing

kubectl port-forward service/kiali -n istio-system 20001:20001

because of --set cr.spec.auth.strategy="anonymous". But realize that anonymous mode will allow anyone to be able to see and use Kiali. If you wish to require users to authenticate themselves by logging into Kiali, use one of the other auth strategies.

The Kiali Operator Helm Chart is configurable. Check available options and default values by running:

$ helm show values kiali/kiali-operator

--version X.Y.Z flag to the helm install and helm show values commands to work with a specific version of Kiali.

The kiali-operator Helm Chart mirrors all settings of the Kiali CR as chart

values that you can configure using regular --set flags. For example, the

Kiali CR has a spec.server.web_root setting which you can configure in the

kiali-operator Helm Chart by passing --set cr.spec.server.web_root=/your-path

to the helm install command.

For more information about the Kiali CR, see the Creating and updating the Kiali CR page.

Operator-Only Install

To install only the Kiali Operator, omit the --set cr.create and

--set cr.namespace flags of the helm command previously shown. For example:

$ helm install \

--namespace kiali-operator \

--create-namespace \

kiali-operator \

kiali/kiali-operator

This will omit creation of the Kiali CR, which you will need to create later to install Kiali Server. This option is good if you plan to do large customizations to the installation.

Installing Multiple Instances of Kiali

By installing a single Kiali operator in your cluster, you can install multiple instances of Kiali by simply creating multiple Kiali CRs. For example, if you have two Istio control planes in namespaces istio-system and istio-system2, you can create a Kiali CR in each of those namespaces to install a Kiali instance in each control plane.

If you wish to install multiple Kiali instances in the same namespace, or if you need the Kiali instance to have different resource names than the default of kiali, you can specify spec.deployment.instance_name in your Kiali CR. The value for that setting will be used to create a unique instance of Kiali using that instance name rather than the default kiali. One use-case for this is to be able to have unique Kiali service names across multiple Kiali instances in order to be able to use certain routers/load balancers that require unique service names.

spec.deployment.instance_name field is used for the Kiali resource names, including the Service name, you must ensure the value you assign this setting follows the Kubernetes DNS Label Name rules. If it does not, the operator will abort the installation. And note that because Kiali uses this as a prefix (it may append additional characters for some resource names) its length is limited to 40 characters.

Standalone Kiali installation

To install the Kiali Server without the operator, use the kiali-server Helm Chart:

$ helm install \

--namespace istio-system \

kiali-server \

kiali/kiali-server

The kiali-server Helm Chart mirrors all settings of the Kiali CR as chart

values that you can configure using regular --set flags. For example, the

Kiali CR has a spec.server.web_fqdn setting which you can configure in the

kiali-server Helm Chart by passing the --set server.web_fqdn flag as

follows:

$ helm install \

--namespace istio-system \

--set server.web_fqdn=example.com \

kiali-server \

kiali/kiali-server

Upgrading Helm installations

If you want to upgrade to a newer Kiali version (or downgrade to older

versions), you can use the regular helm upgrade commands. For example, the

following command should upgrade the Kiali Operator to the latest version:

$ helm upgrade \

--namespace kiali-operator \

--reuse-values \

kiali-operator \

kiali/kiali-operator

WARNING: No migration paths are provided. However, Kiali is a stateless

application and if the helm upgrade command fails, please uninstall the

previous version and then install the new desired version.

Managing configuration of Helm installations

After installing either the kiali-operator or the kiali-server Helm Charts,

you may be tempted to manually modify the created resources to modify the

installation. However, we recommend using helm upgrade to update your

installation.

For example, assuming you have the following installation:

$ helm list -n kiali-operator

NAME NAMESPACE REVISION UPDATED STATUS CHART APP VERSION

kiali-operator kiali-operator 1 2021-09-14 18:00:45.320351026 -0500 CDT deployed kiali-operator-1.40.0 v1.40.0

Notice that the current installation is version 1.40.0 of the

kiali-operator. Let’s assume you want to use your own mirrors of the Kiali

Operator container images. You can update your installation with the following

command:

$ helm upgrade \

--namespace kiali-operator \

--reuse-values \

--set image.repo=your_mirror_registry_url/owner/kiali-operator-repo \

--set image.tag=your_mirror_tag \

--version 1.40.0 \

kiali-operator \

kiali/kiali-operator

--reuse-values flag to take the

configuration of your current installation. Then, you only need to specify the

new settings you want to change using --set flags.

--version X.Y.Z flag with the

version of your current installation. Otherwise, you may end up upgrading to a

new version.

Uninstalling

Removing the Kiali operator and managed Kialis

If you used the kiali-operator Helm chart, first you must ensure that all

Kiali CRs are deleted. For example, the following command will agressively

delete all Kiali CRs in your cluster:

$ kubectl delete kiali --all --all-namespaces

The previous command may take some time to finish while the Kiali operator removes all Kiali installations.

Then, remove the Kiali operator using a standard helm uninstall command. For

example:

$ helm uninstall --namespace kiali-operator kiali-operator

$ kubectl delete crd kialis.kiali.io

kialis.kiali.io CRD because

Helm won’t delete it.

Known problem: uninstall hangs (unable to delete the Kiali CR)

Typically this happens if not all Kiali CRs are deleted prior to uninstalling the operator. To force deletion of a Kiali CR, you need to clear its finalizer. For example:

$ kubectl patch kiali kiali -n istio-system -p '{"metadata":{"finalizers": []}}' --type=merge

Removing standalone Kiali

If you installed a standalone Kiali by using the kiali-server Helm chart, use

the standard helm uninstall commands. For example:

$ helm uninstall --namespace istio-system kiali-server

1.2.3 - Install via OperatorHub

Introduction

The OperatorHub is a website that contains a catalog of Kubernetes Operators. Its aim is to be the central location to find Operators.

The OperatorHub relies in the Operator Lifecycle Manager (OLM) to install, manage and update Operators on any Kubernetes cluster.

The Kiali Operator is being published to the OperatorHub. So, you can use the OLM to install and manage the Kiali Operator installation.

Installing the Kiali Operator using the OLM

Go to the Kiali Operator page in the OperatorHub: https://operatorhub.io/operator/kiali.

You will see an Install button at the right of the page. Press it and you will be presented with the installation instructions. Follow these instructions to install and manage the Kiali Operator installation using OLM.

Afterwards, you can create the Kiali CR to install Kiali.

Installing the Kiali Operator in OpenShift

The OperatorHub is bundled in the OpenShift console. To install the Kiali Operator, simply go to the OperatorHub in the OpenShift console and search for the Kiali Operator. Then, click on the Install button and follow the instruction on the screen.

Afterwards, you can create the Kiali CR to install Kiali.

1.2.4 - The Kiali CR

The Kiali Operator watches the Kiali Custom Resource (Kiali CR), a custom resource that contains the Kiali Server deployment configuration. Creating, updating, or removing a Kiali CR will trigger the Kiali Operator to install, update, or remove Kiali.

If you want the operator to re-process the Kiali CR (called “reconciliation”) without having to change the Kiali CR’s spec fields, you can modify any annotation on the Kiali CR itself. This will trigger the operator to reconcile the current state of the cluster with the desired state defined in the Kiali CR, modifying cluster resources if necessary to get them into their desired state. Here is an example illustrating how you can modify an annotation on a Kiali CR:

$ kubectl annotate kiali my-kiali -n istio-system --overwrite kiali.io/reconcile="$(date)"

The Operator provides comprehensive defaults for all properties of the Kiali

CR. Hence, the minimal Kiali CR does not have a spec:

apiVersion: kiali.io/v1alpha1

kind: Kiali

metadata:

name: kiali

Assuming you saved the previous YAML to a file named my-kiali-cr.yaml, and that you are

installing Kiali in the same default namespace as Istio, create the resource with the following command:

$ kubectl apply -f my-kiali-cr.yaml -n istio-system

Once created, the Kiali Operator should shortly be notified and will process the resource, performing the Kiali

installation. You can wait for the Kiali Operator to finish the reconcilation by using the standard kubectl wait

command and ask for it to wait for the Kiali CR to achieve the condition of Successful. For example:

kubectl wait --for=condition=Successful kiali kiali -n istio-system

You can check the installation progress by inspecting the status attribute of the created Kiali CR:

$ kubectl describe kiali kiali -n istio-system

Name: kiali

Namespace: istio-system

Labels: <none>

Annotations: <none>

API Version: kiali.io/v1alpha1

Kind: Kiali

(...some output is removed...)

Status:

Conditions:

Last Transition Time: 2021-09-15T17:17:40Z

Message: Running reconciliation

Reason: Running

Status: True

Type: Running

Deployment:

Instance Name: kiali

Namespace: istio-system

Environment:

Is Kubernetes: true

Kubernetes Version: 1.21.2

Operator Version: v1.40.0

Progress:

Duration: 0:00:16

Message: 5. Creating core resources

Events: <none>

You may want to check the example install page to see some examples where the Kiali CR has a spec and to better

understand its structure. Most available attributes of the Kiali CR are

described in the pages of the Installation and

Configuration sections of the

documentation. For a complete list, see the Kiali CR Reference.

spec.deployment.accessible_namespaces setting in the CR. See the

Namespace Management page

for more information.

Once you created a Kiali CR, you can manage your Kiali installation by editing the resource using the usual Kubernetes tools:

$ kubectl edit kiali kiali -n istio-system

To confirm your Kiali CR is valid, you can utilize the Kiali CR validation tool.

1.2.5 - The OSSMConsole CR

OpenShift ServiceMesh Console (aka OSSMC) provides a Kiali integration with the OpenShift Console; in other words it provides Kiali functionality within the context of the OpenShift Console. OSSMC is applicable only within OpenShift environments.

The main component of OSSMC is a plugin that gets installed inside the OpenShift Console. Prior to installing this plugin, you are required to have already installed the Kiali Operator and Kiali Server in your OpenShift environment. Please the Installation Guide for details.

The Kiali Operator watches the OSSMConsole Custom Resource (OSSMConsole CR), a custom resource that contains the OSSMC deployment configuration. Creating, updating, or removing a OSSMConsole CR will trigger the Kiali Operator to install, update, or remove OSSMC.

Creating the OSSMConsole CR to Install the OSSMC Plugin

With the Kiali Operator and Kial Server installed and running, you can install the OSSMC plugin in one of two ways - either via the OpenShift Console or via the “oc” CLI. Both methods are described below. You choose the method you want to use.

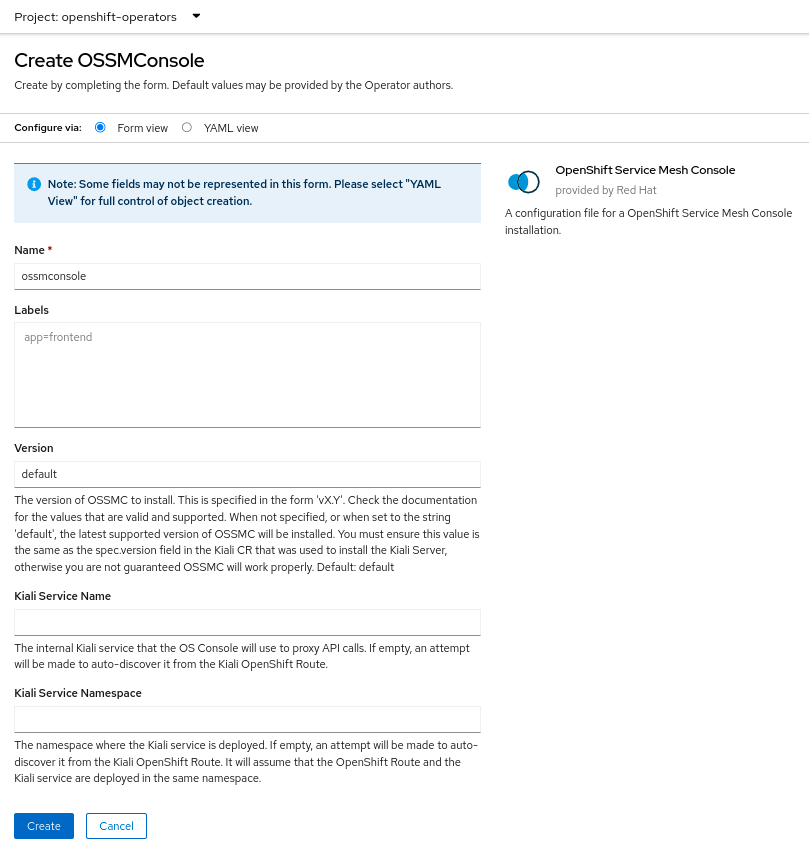

Installing via OpenShift Console

From the Kiali Operator details page in the OpenShift Console, create an instance of the “OpenShift Service Mesh Console” resource. Accept the defaults on the installation form and press “Create”.

Installing via “oc” CLI

To instruct the Kiali Operator to install the plugin, simply create a small OSSMConsole CR. A minimal CR can be created like this:

cat <<EOM | oc apply -f -

apiVersion: kiali.io/v1alpha1

kind: OSSMConsole

metadata:

namespace: openshift-operators

name: ossmconsole

EOM

Note that the operator will deploy the plugin resources in the same namespace where you create this OSSMConsole CR - in this case openshift-operators but you can create the CR in any namespace.

For a complete list of configuration options available within the OSSMConsole CR, see the OSSMConsole CR Reference.

To confirm your OSSMConsole CR is valid, you can utilize the OSSMConsole CR validation tool.

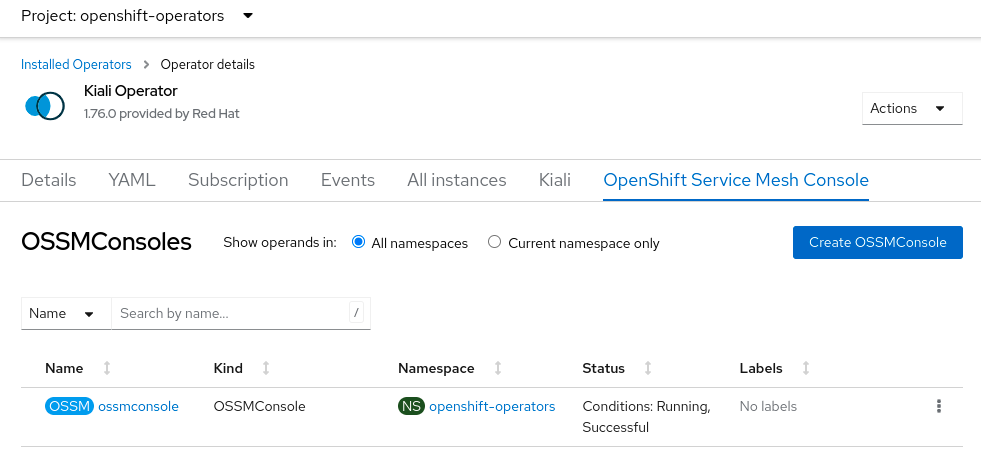

Installation Status

After the plugin is installed, you can see the “OSSMConsole” resource that was created in the OpenShift Console UI. Within the operator details page in the OpenShift Console UI, select the OpenShift Service Mesh Console tab to view the resource that was created and its status. The CR status field will provide you with any error messages should the deployment of OSSMC fail.

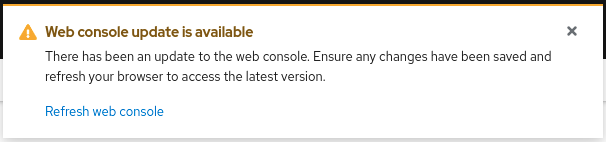

Once the operator has finished processing the OSSMConsole CR, you must then wait for the OpenShift Console to load and initialize the plugin. This may take a minute or two. You will know when the plugin is ready when the OpenShift Console pops up this message - when you see this message, refresh the browser window to reload the OpenShift Console:

Uninstalling OSSMC

This section will describe how to uninstall the OpenShift Service Mesh Console plugin. You can uninstall the plugin in one of two ways - either via the OpenShift Console or via the “oc” CLI. Both methods are described in the sections below. You choose the method you want to use.

oc patch ossmconsoles <CR name> -n <CR namespace> -p '{"metadata":{"finalizers": []}}' --type=merge

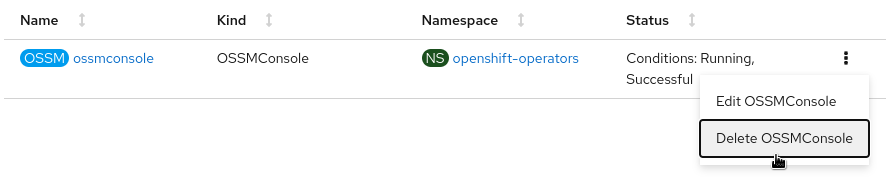

Uninstalling via OpenShift Console

Remove the OSSMConsole CR by navigating to the operator details page in the OpenShift Console UI. From the operator details page, select the OpenShift Service Mesh Console tab and then select the Delete option in the kebab menu.

Uninstalling via “oc” CLI

Remove the OSSMConsole CR via oc delete ossmconsoles <CR name> -n <CR namespace>. To make sure any and all CRs are deleted from any and all namespaces, you can run this command:

for r in $(oc get ossmconsoles --ignore-not-found=true --all-namespaces -o custom-columns=NS:.metadata.namespace,N:.metadata.name --no-headers | sed 's/ */:/g'); do oc delete ossmconsoles -n $(echo $r|cut -d: -f1) $(echo $r|cut -d: -f2); done

1.2.6 - Accessing Kiali

Introduction

After Kiali is succesfully installed you will need to make Kiali accessible to users. This page describes some popular methods of exposing Kiali for use.

If exposing Kiali in a custom way, you may need to set some configurations to make Kiali aware of how users will access Kiali.

istio-system namespace.

Accessing Kiali using port forwarding

You can use port-forwarding to access Kiali by running any of these commands:

# If you have oc command line tool

oc port-forward svc/kiali 20001:20001 -n istio-system

# If you have kubectl command line tool

kubectl port-forward svc/kiali 20001:20001 -n istio-system

These commands will block. Access Kiali by visiting https://localhost:20001/ in

your preferred web browser.

Accessing Kiali through an Ingress

You can configure Kiali to be installed with an

Ingress resource

defined, allowing you to access

the Kiali UI through the Ingress. By default, an Ingress will not be created. You can

enable a simple Ingress by setting spec.deployment.ingress.enabled to true in the Kiali

CR (a similar setting for the server Helm chart is available if you elect to install Kiali

via Helm as opposed to the Kiali Operator).

Exposing Kiali externally through this spec.deployment.ingress mechanism is a

convenient way of exposing Kiali externally but it will not necessarily work or

be the best way to do it because the way in which you should expose Kiali

externally will be highly dependent on your specific cluster environment and

how services are exposed generally for that environment.

spec.deployment.ingress.enabled: false. Note that the Route is required

if you configure Kiali to use the auth strategy of openshift (which is the default

auth strategy Kiali will use when installed on OpenShift).

The default Ingress that is created will be configured for a typical NGinx implementation. If you have your own

Ingress implementation you want to use, you can override the default configuration through

the settings spec.deployment.ingress.override_yaml and spec.deployment.ingress.class_name.

More details on customizing the Ingress can be found below.

The Ingress IP or domain name should then be used to access the Kiali UI. To find your Ingress IP or domain name, as per the Kubernetes documentation, try the following command (though this may not work if using Minikube without the ingress addon):

kubectl get ingress kiali -n istio-system -o jsonpath='{.status.loadBalancer.ingress[0].ip}'

If it doesn’t work, unfortunately, it depends on how and where you had setup your cluster. There are several Ingress controllers available and some cloud providers have their own controller or preferred exposure method. Check the documentation of your cloud provider. You may need to customize the pre-installed Ingress rule or expose Kiali using a different method.

Customizing the Ingress resource

The created Ingress resource will route traffic to Kiali regardless of the domain in the URL. You may need a more specific Ingress resource that routes traffic to Kiali only on a specific domain or path. To do this, you can specify route settings.

Alternatively, and for more advanced Ingress configurations, you can provide your own Ingress declaration in the Kiali CR. For example:

deployment.ingress.override_yaml will be applied

to the created Route. The deployment.ingress.class_name is ignored on OpenShift.

spec:

deployment:

ingress:

class_name: "nginx"

enabled: true

override_yaml:

metadata:

annotations:

nginx.ingress.kubernetes.io/secure-backends: "true"

nginx.ingress.kubernetes.io/backend-protocol: "HTTPS"

spec:

rules:

- http:

paths:

- path: /kiali

backend:

serviceName: kiali

servicePort: 20001

Accessing Kiali in Minikube

If you enabled the Ingress controller, the default Ingress resource created by the installation (mentioned in the previous section) should be enough to access Kiali. The following command should open Kiali in your default web browser:

xdg-open https://$(minikube ip)/kiali

Accessing Kiali through a LoadBalancer or a NodePort

By default, the Kiali service is created with the ClusterIP type. To use a

LoadBalancer or a NodePort, you can change the service type in the Kiali CR as

follows:

spec:

deployment:

service_type: LoadBalancer

Once the Kiali operator updates the installation, you should be able to use

the kubectl get svc -n istio-system kiali command to retrieve the external

address (or port) to access Kiali. For example, in the following output Kiali

is assigned the IP 192.168.49.201, which means that you can access Kiali by

visiting http://192.168.49.201:20001 in a browser:

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kiali LoadBalancer 10.105.236.127 192.168.49.201 20001:31966/TCP,9090:30128/TCP 34d

If you are using the LoadBalancer service type to directly expose the Kiali

service, you may want to check the available options for the

HTTP Server and

Metrics server.

Accessing Kiali through an Istio Ingress Gateway

If you want to take advantage of Istio’s infrastructure, you can expose Kiali using an Istio Ingress Gateway. The Istio documentation provides a good guide explaining how to expose the sample add-ons. Even if the Istio guide is focused on the sample add-ons, the steps are the same to expose a Kiali installed using this Installation guide.

Accessing Kiali in OpenShift

By default, Kiali is exposed through a Route if installed on OpenShift. The following command should open Kiali in your default web browser:

xdg-open https://$(oc get routes -n istio-system kiali -o jsonpath='{.spec.host}')/console

Specifying route settings

If you are using your own exposure method or if you are using one of the methods mentioned in this page, you may need to configure the route that is being used to access Kiali.

In the Kiali CR, route settings are broken in several attributes. For example,

to specify that Kiali is being accessed under the

https://apps.example.com:8080/dashboards/kiali URI, you would need to set the

following:

spec:

server:

web_fqdn: apps.example.com

web_port: 8080

web_root: /dashboards/kiali

web_schema: https

If you are letting the installation create an Ingress resource for you, the Ingress will be adjusted to match these route settings. If you are using your own exposure method, these spec.server settings are only making Kiali aware of what its public endpoint is.

It is possible to omit these settings and Kiali may be able to discover some of

these configurations, depending on your exposure method. For example, if you

are exposing Kiali via LoadBalancer or NodePort service types, Kiali can

discover most of these settings. If you are using some kind of Ingress, Kiali

will honor X-Forwarded-Proto, X-Forwarded-Host and X-Forwarded-Port HTTP

headers if they are properly injected in the request.

The web_root receives special treatment, because this is the path where Kiali

will serve itself (both the user interface and its api). This is useful if you

are serving multiple applications under the same domain. It must begin with a

slash and trailing slashes must be omitted. The default value is /kiali for

Kubernetes and / for OpenShift.

1.2.7 - Advanced Install

Multiple Istio control planes in the same cluster

Currently, Kiali can manage only one Istio control plane. However, there are certain cases where you may have more than one Istio control plane in your cluster. One of such cases is when performing a Canary upgrade of Istio.

In these cases, you will need to configure in Kiali which control plane you want to manage. This is done by configuring the name of the components of the control plane. This is configured in the Kiali CR and the default values are the following:

spec:

external_services:

istio:

config_map_name: "istio"

istiod_deployment_name: "istiod"

istio_sidecar_injector_config_map_name: "istio-sidecar-injector"

If you want to manage both Istio control planes, simply install two Kiali instances and point each one to a different Istio control plane.

Installing a Kiali Server of a different version than the Operator

When you install the Kiali Operator, it will be configured to install a Kiali Server that is the same version as the operator itself. For example, if you have Kiali Operator v1.34.0 installed, that operator will install Kiali Server v1.34.0. If you upgrade (or downgrade) the Kiali Operator, the operator will in turn upgrade (or downgrade) the Kiali Server.

There are certain use-cases in which you want the Kiali Operator to install a Kiali Server whose version is different than the operator version. Read the following section «Using a custom image registry» section to learn how to configure this setup.

Using a custom image registry

Kiali is released and published to the Quay.io container image registry. There is a repository hosting the Kiali operator images and another one for the Kiali server images.

If you need to mirror the Kiali container images to some other registry, you still can use Helm to install the Kiali operator as follows:

$ helm install \

--namespace kiali-operator \

--create-namespace \

--set image.repo=your.custom.registry/owner/kiali-operator-repo

--set image.tag=your_custom_tag

--set allowAdHocKialiImage=true

kiali-operator \

kiali/kiali-operator

--set allowAdHocKialiImage=true which allows specifying a

custom image in the Kiali CR. For security reasons, this is disabled by

default.

Then, when creating the Kiali CR, use the following attributes:

spec:

deployment:

image_name: your.custom.registry/owner/kiali-server-repo

image_version: your_custom_tag

Change the default image

As explained earlier, when you install the Kiali Operator, it will be

configured to install a Kiali Server whose image will be pulled from quay.io

and whose version will be the same as the operator. You can ask the operator to

use a different image by setting spec.deployment.image_name and

spec.deployment.image_version within the Kiali CR (as explained above).

However, you may wish to alter this default behavior exhibited by the operator.

In other words, you may want the operator to install a different Kiali Server

image by default. For example, if you have an air-gapped environment with its

own image registry that contains its own copy of the Kiali Server image, you

will want the operator to install a Kiali Server that uses that image by

default, as opposed to quay.io/kiali/kiali. By configuring the operator to do

this, you will not force the authors of Kiali CRs to have to explicitly define

the spec.deployment.image_name setting and you will not need to enable the

allowAdHocKialiImage setting in the operator.

To change the default Kiali Server image installed by the operator, set the

environment variable RELATED_IMAGE_kiali_default in the Kiali Operator

deployment. The value of that environment variable must be the full image tag

in the form repoName/orgName/imageName:versionString (e.g.

my.internal.registry.io/mykiali/mykialiserver:v1.50.0). You can do this when

you install the operator via helm:

$ helm install \

--namespace kiali-operator \

--create-namespace \

--set "env[0].name=RELATED_IMAGE_kiali_default" \

--set "env[0].value=my.internal.registry.io/mykiali/mykialiserver:v1.50.0" \

kiali-operator \

kiali/kiali-operator

Development Install

This option installs the latest Kiali Operator and Kiali Server images which are built from the master branches of Kiali GitHub repositories. This option is good for demo and development installations.

helm install \

--set cr.create=true \

--set cr.namespace=istio-system \

--set cr.spec.deployment.image_version=latest \

--set image.tag=latest \

--namespace kiali-operator \

--create-namespace \

kiali-operator \

kiali/kiali-operator

1.2.8 - Example Install

This is a quick example of installing Kiali. This example will install the operator and two Kiali Servers - one server will require the user to enter credentials at a login screen in order to obtain read-write access and the second server will allow anonymous read-only access.

For this example, assume there is a Minikube Kubernetes cluster running with an

Istio control plane installed in the namespace istio-system and

the Istio Bookinfo Demo installed in the namespace bookinfo:

$ kubectl get deployments.apps -n istio-system

NAME READY UP-TO-DATE AVAILABLE AGE

grafana 1/1 1 1 8h

istio-egressgateway 1/1 1 1 8h

istio-ingressgateway 1/1 1 1 8h

istiod 1/1 1 1 8h

jaeger 1/1 1 1 8h

prometheus 1/1 1 1 8h

$ kubectl get deployments.apps -n bookinfo

NAME READY UP-TO-DATE AVAILABLE AGE

details-v1 1/1 1 1 21m

productpage-v1 1/1 1 1 21m

ratings-v1 1/1 1 1 21m

reviews-v1 1/1 1 1 21m

reviews-v2 1/1 1 1 21m

reviews-v3 1/1 1 1 21m

Install Kiali Operator via Helm Chart

First, the Kiali Operator will be installed in the kiali-operator namespace using the operator helm chart:

$ helm repo add kiali https://kiali.org/helm-charts

$ helm repo update kiali

$ helm install \

--namespace kiali-operator \

--create-namespace \

kiali-operator \

kiali/kiali-operator

Install Kiali Server via Operator

Next, the first Kiali Server will be installed. This server will require the user to enter a Kubernetes token in order to log into the Kiali dashboard and will provide the user with read-write access. To do this, a Kiali CR will be created that looks like this (file: kiali-cr-token.yaml):

apiVersion: kiali.io/v1alpha1

kind: Kiali

metadata:

name: kiali

namespace: istio-system

spec:

auth:

strategy: "token"

deployment:

accessible_namespaces: ["bookinfo"]

view_only_mode: false

server:

web_root: "/kiali"

This Kiali CR will command the operator to deploy the Kiali Server in the same namespace where the Kiali CR is (istio-system). The operator will configure the server to: respond to requests to the web root path of /kiali, enable read-write access, use the authentication strategy of token, and be given access to the bookinfo namespace:

$ kubectl apply -f kiali-cr-token.yaml

Get the Status of the Installation

The status of a particular Kiali Server installation can be found by examining the status field of its corresponding Kiali CR. For example:

$ kubectl get kiali kiali -n istio-system -o jsonpath='{.status}'

When the installation has successfully completed, the status field will look something like this (when formatted):

$ kubectl get kiali kiali -n istio-system -o jsonpath='{.status}' | jq

{

"conditions": [

{

"ansibleResult": {

"changed": 21,

"completion": "2021-10-20T19:17:35.519131",

"failures": 0,

"ok": 102,

"skipped": 90

},

"lastTransitionTime": "2021-10-20T19:17:12Z",

"message": "Awaiting next reconciliation",

"reason": "Successful",

"status": "True",

"type": "Running"

}

],

"deployment": {

"accessibleNamespaces": "bookinfo,istio-system",

"instanceName": "kiali",

"namespace": "istio-system"

},

"environment": {

"isKubernetes": true,

"kubernetesVersion": "1.20.2",

"operatorVersion": "v1.41.0"

},

"progress": {

"duration": "0:00:20",

"message": "7. Finished all resource creation"

}

}

Access the Kiali Server UI

The Kiali Server UI is accessed by pointing a browser to the Kiali Server endpoint and requesting the web root /kiali:

xdg-open http://$(minikube ip)/kiali

Because the auth.strategy was set to token, that URL will display the Kiali login screen that will require a Kubernetes token in order to authenticate with the server. For this example, you can use the token that belongs to the Kiali service account itself:

$ kubectl get secret -n istio-system $(kubectl get sa kiali-service-account -n istio-system -o jsonpath='{.secrets[0].name}') -o jsonpath='{.data.token}' | base64 -d

The output of that command above can be used to log into the Kiali login screen.

Install a Second Kiali Server

The second Kiali Server will next be installed. This server will not require the user to enter any login credentials but will only provide a view-only look at the service mesh. To do this, a Kiali CR will be created that looks like this (file: kiali-cr-anon.yaml):

apiVersion: kiali.io/v1alpha1

kind: Kiali

metadata:

name: kiali

namespace: kialianon

spec:

installation_tag: "Kiali - View Only"

istio_namespace: "istio-system"

auth:

strategy: "anonymous"

deployment:

accessible_namespaces: ["bookinfo"]

view_only_mode: true

instance_name: "kialianon"

server:

web_root: "/kialianon"

This Kiali CR will command the operator to deploy the Kiali Server in the same namespace where the Kiali CR is (kialianon). The operator will configure the server to: respond to requests to the web root path of /kialianon, disable read-write access, not require the user to authenticate, have a unique instance name of kialianon and be given access to the bookinfo namespace. The Kiali UI will also show a custom title in the browser tab so the user is aware they are looking at a “view only” Kiali dashboard. The unique deployment.instance_name is needed in order for this Kiali Server to be able to share access to the Bookinfo application with the first Kiali Server.

$ kubectl create namespace kialianon

$ kubectl apply -f kiali-cr-anon.yaml

The UI for this second Kiali Server is accessed by pointing a browser to the Kiali Server endpoint and requesting the web root /kialianon. Note that no credentials are required to gain access to this Kiali Server UI because auth.strategy was set to anonymous; however, the user will not be able to modify anything via the Kiali UI - it is strictly “view only”:

xdg-open http://$(minikube ip)/kialianon

Reconfigure Kiali Server

A Kiali Server can be reconfigured by simply editing its Kiali CR. The Kiali Operator will perform all the necessary tasks to complete the reconfiguration and reboot the Kiali Server pod when necessary. For example, to change the web root for the Kiali Server:

$ kubectl patch kiali kiali -n istio-system --type merge --patch '{"spec":{"server":{"web_root":"/specialkiali"}}}'

The Kiali Operator will update the necessary resources (such as the Kiali ConfigMap) and will reboot the Kiali Server pod to pick up the new configuration.

Uninstall Kiali Server

To uninstall a Kiali Server installation, simply delete the Kiali CR. The Kiali Operator will then perform all the necessary tasks to remove all remnants of the associated Kiali Server.

kubectl delete kiali kiali -n istio-system

Uninstall Kiali Operator

To uninstall the Kiali Operator, use helm uninstall and then manually remove the Kiali CRD.

$ kubectl delete kiali --all --all-namespaces

$ helm uninstall --namespace kiali-operator kiali-operator

$ kubectl delete crd kialis.kiali.io

1.3 - Deployment Options

There are other, more complex deployment settings, described in dedicated pages:

- Authentication

- Customization of the Ingress resource

- Customization of the service type (LoadBalancer or NodePort)

- Namespace management (configuring namespaces accessible and visible to Kiali)

Kiali and Istio installation namespaces

By default, the Kiali operator installs Kiali in the same namespace where the Kiali CR is created. However, it is possible to specify a different namespace for installation:

spec:

deployment:

namespace: "custom-kiali-namespace"

It is assumed that Kiali is installed to the same namespace as Istio. Kiali reads some Istio resources and may not work properly if those resources are not found. Thus, if you are installing Kiali and Istio on different namespaces, you must specify what is the Istio namespace:

spec:

istio_namespace: "istio-system"

Log level and format

By default, Kiali will print up to INFO-level messages in simple text format.

You can change the log level, output format, and time format as in the

following example:

spec:

deployment:

logger:

# Supported values are "trace", "debug", "info", "warn", "error" and "fatal"

log_level: error

# Supported values are "text" and "json".

log_format: json

time_field_format: "2006-01-02T15:04:05Z07:00"

The syntax for the time_field_format is the same as the Time.Format

function of the Go language.

The json format is useful if you are parsing logs of your applications for

further processing.

In Kiali, there are some special logs called audit logs that are emitted each time a user creates, updates or deletes a resource through Kiali. Audit logs are INFO-level messages and are enabled by default. If audit logs are too verbose, you can disable them without reducing the log level as follows:

spec:

server:

audit_log: false

Kiali instance name

If you plan to install more than one Kiali instance on the same cluster, you may need to configure an instance name to avoid conflicts on created resources:

spec:

deployment:

instance_name: "secondary"

The instance_name will be used as a prefix for all created Kiali resources.

The exception is the kiali-signing-key secret which will always have

the same name and will be shared on all deployments of the same namespace,

unless you specify a custom secret name.

instance_name will be used as a name prefix in resources, it must

follow Kubernetes naming

constraints.

instance_name of an existing Kiali installation. The workaround is to

uninstall Kiali and re-install with the desired instance_name.

Resource requests and limits

You can set the amount of resources available to Kiali using the

spec.deployment.resources attribute, like in the following example:

spec:

deployment:

resources:

requests:

memory: "128Mi"

cpu: "100m"

limits:

memory: "1Gi"

cpu: "500m"

Please, read the Managing Resources for Containers section in the Kubernetes documentation for more details about possible configurations.

Custom labels and annotations on the Kiali pod and service

Although some labels and annotations are set on the Kiali pod and on its

service (depending on configurations), you can add additional ones. For the

pod, use the spec.deployment.pod_labels and spec.deployment.pod_annotations

attributes. For the service, you can only add annotations using the

spec.deployment.service_annotations attribute. For example:

spec:

deployment:

pod_annotations:

a8r.io/repository: "https://github.com/kiali/kiali"

pod_labels:

sidecar.istio.io/inject: "true"

service_annotations:

a8r.io/documentation: "https://kiali.io/docs/installation/deployment-configuration"

Kiali page title (browser title bar)

If you have several Kiali installations and you are using them at the same time, there are good chances that you will want to identify each Kiali by simply looking at the browser’s title bar. You can set a custom text in the title bar with the following configuration:

spec:

installation_tag: "Kiali West"

The installation_tag is any human readable text of your desire.

Kubernetes scheduler settings

Replicas and automatic scaling

By default, only one replica of Kiali is deployed. If needed, you can change the replica count like in the following example:

spec:

deployment:

replicas: 2

If you prefer automatic scaling, creation of an HorizontalPodAutoscaler

resource is supported. For example, the following configuration automatically

scales Kiali based on CPU utilization:

spec:

deployment:

hpa:

api_version: "autoscaling/v1"

spec:

minReplicas: 1

maxReplicas: 2

targetCPUUtilizationPercentage: 80

scaleTargetRef field of the HPA spec, because this field

will be populated by the Kiali operator (or by Helm) depending on other

configuration.

Read the Kubernetes Horizontal Pod Autoscaler documentation to learn more about the HPA.

Allocating the Kiali pod to specific nodes of the cluster

You can constrain the Kiali pod to run on a specific set of nodes by using some of the standard Kubernetes scheduler configurations.

The simplest option is to use the

nodeSelector

configuration which you can configure like in the following example:

spec:

deployment:

node_selector:

worker-type: infra

You can also use the affinity/anti-affinity native Kubernetes feature if you prefer its more expressive syntax, or if you need more complex matching rules. The following is an example for configuring node affinity:

spec:

deployment:

affinity:

node:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: worker-type

operator: In

values:

- infra

Similarly, you can also configure pod affinity and pod anti-affinity using the

spec.deployment.affinity.pod and spec.deployment.affinity.pod_anti

attributes.

Finally, if you want to run Kiali in a node with taints, the following is an example to configure tolerations:

spec:

deployment:

tolerations: # Allow to run Kiali in a tainted master node

- key: "node-role.kubernetes.io/master"

operator: "Exists"

effect: "NoSchedule"

Read the following Kubernetes documentation to learn more about these configurations:

Priority class of the Kiali pod

If you are using priority classes in your cluster, you can specify the priority class that will be set on the Kiali pod. For example:

spec:

deployment:

priority_class_name: high-priority

For more information about priority classes, read Pod Priority and Preemption in the Kubernetes documentation.

Adding host aliases to the Kiali pod

If you need to provide some static hostname resolution in the Kiali pod, you

can use HostAliases to add entries to the /etc/hosts

file

of the Kiali pod, like in the following example:

spec:

deployment:

host_aliases:

- ip: 192.168.1.100

hostnames:

- "foo.local"

- "bar.local"

HTTP server

Kiali is served over HTTP. You can configure a few options of the HTTP server. The following are the defaults, but you can change them to suit your needs.

spec:

server:

# Listen/bind address of the HTTP server. By default it is empty, which means to

# listen on all interfaces.

address: ""

# Listening port of the HTTP server. If you change it, also Kiali's Kubernetes

# Service is affected to use this port.

port: 20001

# Use GZip compression for responses larger than 1400 bytes. You may want to disable

# compression if you are exposing Kiali via a reverse proxy that is already

# doing compression.

gzip_enabled: true

# For development purposes only. Controls if "Cross-Origin Resourse Sharing" is

# enabled.

cors_allow_all: false

There is one additional spec.server.web_root option that affects the HTTP

server, but that one is described in the Specifying route settings section

of the Instalation guide.

Metrics server

Kiali emits metrics that can be collected by Prometheus. Most of these metrics are performance measurements.

The metrics server is enabled by default and listens on port 9090:

spec:

server:

metrics_enabled: true

metrics_port: 9090

The bind address is the same as the HTTP server. Thus, make sure that the HTTP Server and the metrics server are not configured to the same port.

2 - Configuration

The pages in this Configuration section describe most available options for managing and customizing your Kiali installation.

Unless noted, it is assumed that you are using the Kiali operator and that you are managing the Kiali installation through a Kiali CR. The provided YAML snippets for configuring Kiali should be placed in your Kiali CR. For example, the provided configuration snippet for setting up the Anonymous authentication strategy is the following:

spec:

auth:

strategy: anonymous

You will need to take this YAML snippet and apply it to your Kiali CR. As an example, an almost minimal Kiali CR using the previous configuration snippet would be the following:

apiVersion: kiali.io/v1alpha1

kind: Kiali

metadata:

namespace: kiali-namespace

name: kiali

spec:

istio_namespace: istio-system

deployment:

namespace: kiali-namespace

auth:

strategy: anonymous

Then, you can save the finished YAML file and apply it with kubectl apply -f.

It is recommended that you read The Kiali CR and the Example Install pages of the Installation Guide for more information about using the Kiali CR.

Also, for reference, see Kiali CR Reference which documents all available options.

2.1 - Authentication Strategies

Kiali supports five authentication mechanisms.

- The default authentication strategy for OpenShift clusters is

openshift. - The default authentication strategy for all other Kubernetes clusters is

token.

All mechanisms other than anonymous support limiting per-user namespace access control.

For multi-cluster, only anonymous and openid are currently supported.

Read the dedicated page of each authentication strategy to learn more.

2.1.1 - Anonymous strategy

Introduction

The anonymous strategy removes any authentication requirement. Users will

have access to Kiali without providing any credentials.

Although the anonymous strategy doesn’t provide any access protection, it’s

valid for some use-cases. Some examples known from the community:

- Exposing Kiali through a reverse proxy, where the reverse proxy is providing a custom authentication mechanism.

- Exposing Kiali on an already limited network of trusted users.

- When Kiali is accessed through

kubectl port-forwardor alike commands that allow usage of the cluster’s RBAC capabilities to limit access. - When developing Kiali, where a developer has a private instance on his own machine.

anonymous

strategy will leave Kiali unsecured. If you are using this option, make sure

that Kiali is available only to trusted users, or access is protected by other

means.

Set-up

To use the anonymous strategy, use the following configuration in the Kiali CR:

spec:

auth:

strategy: anonymous

The anonymous strategy doesn’t have any additional configuration.

Access control

When using the anonymous strategy, the content displayed in Kiali is based on

the permissions of the Kiali service account. By default, the Kiali service

account has cluster wide access and will be able to display everything in the

cluster.

OpenShift

If you are running Kiali in OpenShift, access can be customized by changing privileges to the Kiali ServiceAccount. For example, to reduce permissions to individual namespaces, first, remove the cluster-wide permissions granted by default:

oc delete clusterrolebindings kiali

Then grant the kiali role only in needed namespaces. For example:

oc adm policy add-role-to-user kiali system:serviceaccount:istio-system:kiali-service-account -n ${NAMESPACE}

View only

You can tell the Kiali Operator to install Kiali in “view only”

mode (this does work for either OpenShift or Kubernetes). You do this by

setting the view_only_mode to true in the Kiali CR, which

allows Kiali to read service mesh resources found in the cluster, but it does

not allow any change:

spec:

deployment:

view_only_mode: true

2.1.2 - Header strategy

Introduction

The header strategy assumes a reverse proxy is in front of Kiali, such as

OpenUnison or OAuth2 Proxy, injecting the user’s identity into each request to

Kiali as an Authorization header. This token can be an OpenID Connect

token or any other token the cluster recognizes.

In addition to a user token, the header strategy supports impersonation

headers. If the impersonation headers are present in the request, then Kiali

will act on behalf of the user specified by the impersonation (assuming the

token supplied in the Authorization header is authorized to do so).

The header strategy allows for namespace access control.

The header strategy is only supported for single cluster.

Set-up

The header strategy will work with any Kubernetes cluster. The token provided

must be supported by that cluster. For instance, most “on-prem” clusters support

OpenID Connect, but cloud hosted clusters do not. For clusters that don’t support

a token, the impersonation

headers can be injected by the reverse proxy.

spec:

auth:

strategy: header

The header strategy doesn’t have any additional configuration.

HTTP Header

The header strategy looks for a token in the Authorization HTTP header with the

Bearer prefix. The HTTP header should look like:

Authorization: Bearer TOKEN

Where TOKEN is the appropriate token for your cluster. This TOKEN will be

submitted to the API server via a TokenReview to validate the token ONLY

on the first access to Kiali. On subsequent calls the TOKEN is passed through

directly to the API server.

Security Considerations

Network Policies

A policy should be put in place to make sure that the only “client” for Kiali is the authenticating reverse proxy. This helps limit potential abuse and ensures that the authenticating reverse proxy is the source of truth for who accessed Kiali.

Short Lived Tokens

The authenticating reverse proxy should inject a short lived token in the

Authorization header. A shorter lived token is less likely to be abused if

leaked. Kiali will take whatever token is passed into the reqeuest, so as tokens

are regenerated Kiali will use the new token.

Impersonation

TokenRequest API

The authenticating reverse proxy should use the TokenRequest API instead of static

ServiceAccount tokens when possible while using impersonation. The

ServiceAccount that can impersonate users and groups is privileged and having it

be short lived cuts down on the possibility of a token being leaked while it’s being

passed between different parts of the infrastructure.

Drop Incoming Impersonation Headers

The authenticating proxy MUST drop any headers it receives from a remote client that match the impersonation headers. Not only do you want to make sure that the authenticating proxy can’t be overriden on which user to authenticate, but also what groups they’re a member of.

2.1.3 - OpenID Connect strategy

Introduction

The openid authentication strategy lets you integrate Kiali to an external

identity provider that implements OpenID Connect, and allows

users to login to Kiali using their existing accounts of a

third-party system.

If your

Kubernetes cluster is also integrated with your OpenId provider,

then Kiali’s openid strategy can offer

namespace access control.

Kiali only supports the authorization code flow of the OpenId Connect spec.

Requirements

The Kiali’s signing key needs to be 16, 24 or 32 byte long. If you install Kiali via the operator and don’t set a custom signing key, the operator should create a 16 byte long signing key.

If you don’t need namespace access control support, you can use any working OpenId Server where Kiali can be configured as a client application.

If you do need namespace access control support, you need either:

- A Kubernetes cluster configured with OpenID connect integration, which results in the API server accepting tokens issued by your identity provider.

- A replacement or reverse proxy for the Kubernetes cluster API capable of handling the OIDC authentication.

The first option is preferred if you can manipulate your cluster API server startup flags, which will result in your cluster to also be integrated with the external OpenID provider.

The second option is provided for cases where you are using a managed

Kubernetes and your cloud provider does not support configuring OpenID

integration. Kiali assumes an implementation of a Kubernetes API server. For

example, a community user has reported to successfully configure Kiali’s OpenID

strategy by using

kube-oidc-proxy which is a

reverse proxy that handles the OpenID authentication and forwards the

authenticated requests to the Kubernetes API.

Set-up with namespace access control support

Assuming you already have a working Kubernetes cluster with OpenId integration

(or a working alternative like kube-oidc-proxy), you should already had

configured an application or a client in your OpenId server (some cloud

providers configure this app/client automatically for you). You must re-use

this existing application/client by adding the root path of your Kiali

instance as an allowed/authorized callback URL. If the OpenID server provided

you a client secret for the application/client, or if you had manually set a

client secret, issue the following command to create a Kubernetes secret

holding the OpenId client secret:

kubectl create secret generic kiali --from-literal="oidc-secret=$CLIENT_SECRET" -n $NAMESPACE

where $NAMESPACE is the namespace where you installed Kiali and

$CLIENT_SECRET is the secret you configured or provided by your OpenId

Server. If Kiali is already running, you may need to restart the Kiali pod so

that the secret is mounted in Kiali.

Then, to enable the OpenID Connect strategy, the minimal configuration you need to set in the Kiali CR is like the following:

spec:

auth:

strategy: openid

openid:

client_id: "kiali-client"

issuer_uri: "https://openid.issuer.com"

This assumes that your Kubernetes cluster is configured with OpenID Connect

integration. In this case, the client-id and issuer_uri attributes must

match the --oidc-client-id and --oidc-issuer-url flags

used to start the cluster API server.

If these values don’t match, users will fail to login to Kiali.

If you are using a replacement or a reverse proxy for the Kubernetes API server, the minimal configuration is like the following:

spec:

auth:

strategy: openid

openid:

api_proxy: "https://proxy.domain.com:port"

api_proxy_ca_data: "..."

client_id: "kiali-client"

issuer_uri: "https://openid.issuer.com"

The value of client-id and issuer_uri must match the values of the

configuration of your reverse proxy or cluster API replacement. The api_proxy

attribute is the URI of the reverse proxy or cluster API replacement (only

HTTPS is allowed). The api_proxy_ca_data is the public certificate authority

file encoded in a base64 string, to trust the secure connection.

Set-up with no namespace access control support

Register Kiali as a client application in your OpenId Server. Use the root path of your Kiali instance as the callback URL. If the OpenId Server provides you a client secret, or if you manually set a client secret, issue the following command to create a Kubernetes secret holding the OpenId client secret:

kubectl create secret generic kiali --from-literal="oidc-secret=$CLIENT_SECRET" -n $NAMESPACE

where $NAMESPACE is the namespace where you installed Kiali and

$CLIENT_SECRET is the secret you configured or provided by your OpenId

Server. If Kiali is already running, you may need to restart the Kiali pod so

that the secret is mounted in Kiali.

Then, to enable the OpenID Connect strategy, the minimal configuration you need to set in the Kiali CR is like the following:

spec:

auth:

strategy: openid

openid:

client_id: "kiali-client"

disable_rbac: true

issuer_uri: "https://openid.issuer.com"

Additional configurations

Configuring the displayed user name

The Kiali front-end will, by default, retrieve the string of the sub claim of

the OpenID token and display it as the user name. You can customize which field

to display as the user name by setting the username_claim attribute of the

Kiali CR. For example:

spec:

auth:

openid:

username_claim: "email"

If you enabled namespace access control, you will want the username_claim

attribute to match the --oidc-username-claim flag used to start the

Kubernetes API server, or the equivalent option if you are using a replacement

or reverse proxy of the API server. Else, any user-friendly claim will be OK as

it is purely informational.

Configuring requested scopes

By default, Kiali will request access to the openid, profile and email

standard scopes. If you need a different set of scopes, you can set the

scopes attribute in the Kiali CR. For example:

spec:

auth:

openid:

scopes:

- "openid"

- "email"

- "groups"

The openid scope is forced. If you don’t add it to the list of scopes to

request, Kiali will still request it from the identity provider.

Configuring authentication timeout

When the user is redirected to the external authentication system, by default

Kiali will wait at most 5 minutes for the user to authenticate. After that time

has elapsed, Kiali will reject authentication. You can adjust this timeout by

setting the authentication_timeout with the number of seconds that Kiali

should wait at most. For example:

spec:

auth:

openid:

authentication_timeout: 60 # Wait only one minute.

Configuring allowed domains

Some identity providers use a shared login and regardless of configuring your own application under your domain (or organization account), login can succeed even if the user that is logging in does not belong to your account or organization. Google is an example of this kind of provider.

To prevent foreign users from logging into your Kiali instance, you can configure a list of allowed domains:

spec:

auth:

openid:

allowed_domains:

- example.com

- foo.com

The e-mail reported by the identity provider is used for the validation. Login

will be allowed if the domain part of the e-mail is listed as an allowed

domain; else, the user will be rejected. Naturally, you will need to

configure the email scope to be requested.

There is a special case: some identity providers include a hd claim in the

id_token. If this claim is present, this is used instead of extracting the

domain from the user e-mail. For example, Google Workspace (aka G Suite)

includes this hd claim for hosted

domains.

Using an OpenID provider with a self-signed certificate

If your OpenID provider is using a self-signed certificate, you can disable

certificate validation by setting the insecure_skip_verify_tls to true in

the Kiali CR:

spec:

auth:

openid:

insecure_skip_verify_tls: true

However, if your organization or internal network has an internal trusted

certificate authority (CA), and your OpenID server is using a certificate

issued by this CA, you can configure Kiali to trust certificates from this CA,

rather than disabling verification. For this, create a ConfigMap named

kiali-cabundle containing the root CA certificate (the public component)

under the openid-server-ca.crt key:

apiVersion: v1

kind: ConfigMap

metadata:

name: kiali-cabundle

namespace: istio-system # This is Kiali's install namespace

data:

openid-server-ca.crt: <the public component of your CA root certificate>

After restarting the Kiali pod, Kiali will trust this root certificate for all HTTPS requests related to OpenID authentication.

Using an HTTP/HTTPS Proxy

In some network configurations, there is the need to use proxies to connect to

the outside world. OpenID requires outside world connections to get metadata and

do key validation, so you can configure it by setting the http_proxy and

https_proxy keys in the Kiali CR. They use the same format as the HTTP_PROXY

and HTTPS_PROXY environment variables.

spec:

auth:

openid:

http_proxy: http://USERNAME:PASSWORD@10.0.1.1:8080/

https_proxy: https://USERNAME:PASSWORD@10.0.0.1:8080/

Passing additional options to the identity provider

When users click on the Login button on Kiali, a redirection occurs to the

authentication page of the external identity provider. Kiali sends a fixed set

of parameters to the identity provider to enable authentication. If you need to

add an additional set of parameters to your identity provider, you can use the

additional_request_params setting of the Kiali CR, which accepts key-value

pairs. For example:

spec:

auth:

openid:

additional_request_params:

prompt: login

The prompt parameter is a

standard OpenID parameter.

When the login value is passed in this parameter, the

identity provider is instructed to ask for user credentials regardless if the

user already has an active session because of a previous login in some other

system.

If your OpenId provider supports other non-standard parameters, you can specify

the ones you need in this additional_request_params setting.

Take into account that you should not add the client_id, response_type,

redirect_uri, scope, nonce nor state parameters to this list. These are

already in use by Kiali and some already have a dedicated setting.

Provider-specific instructions

Using with Keycloak

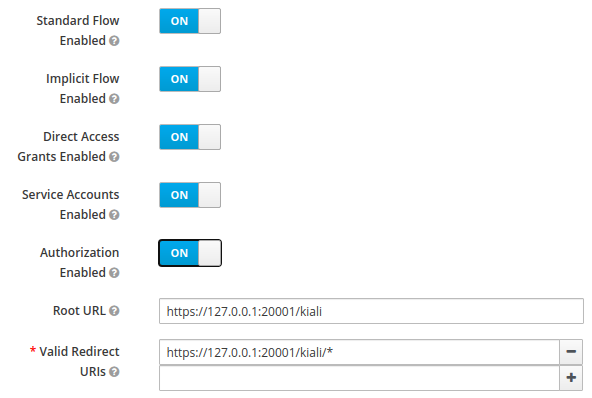

When using OpenId with Keycloak, you will need to enable the Standard Flow Enabled

option on the Client (in the Administration Console):

The Standard Flow described on the options is the same as the authorization code flow from the rest of the documentation.

Using with Google Cloud Platform / GKE OAuth2

If you are using Google Cloud Platform (GCP) and its products such as Google Kubernetes Engine (GKE), it should be straightforward to configure Kiali’s OpenID strategy to authenticate using your Google credentials.

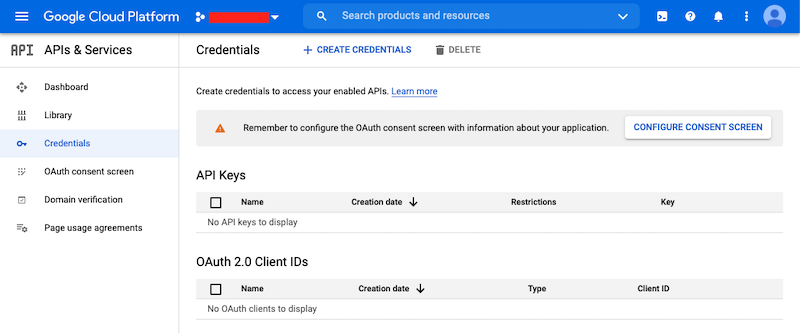

First, you’ll need to go to your GCP Project and to the Credentials screen which

is available at (Menu Icon) > APIs & Services > Credentials.

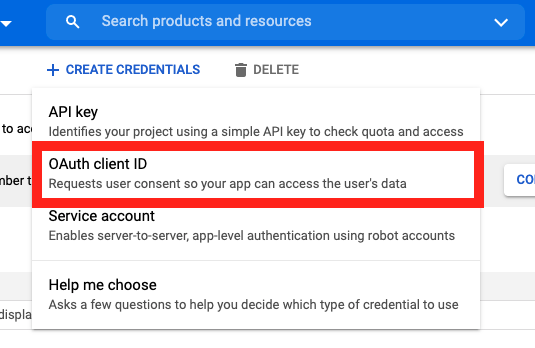

On the Credentials screen you can select to create a new OAuth client ID.

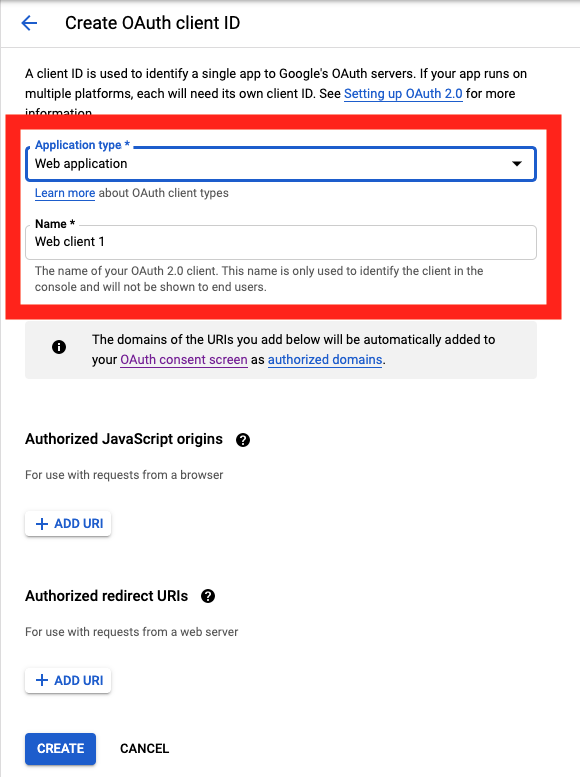

On the Create OAuth client ID screen, set the Application type to Web Application

and enter a name for your key.

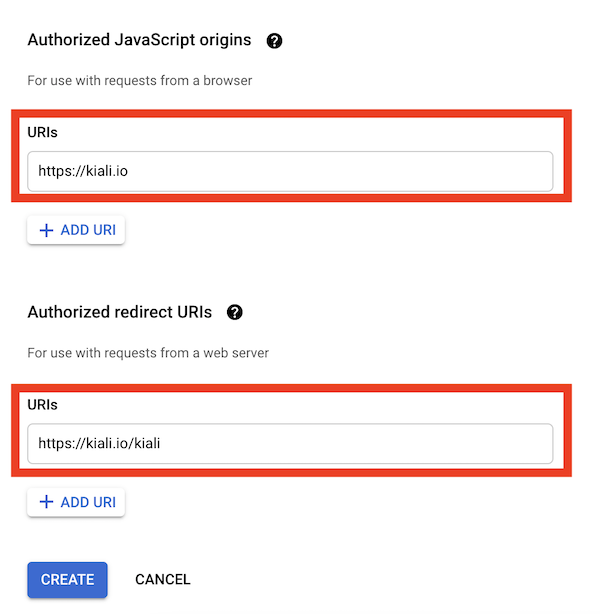

Then enter in the Authorized Javascript origins and Authorized redirect URIs for your project.

You can enter in localhost as appropriate during testing. You can also enter multiple URIs as appropriate.

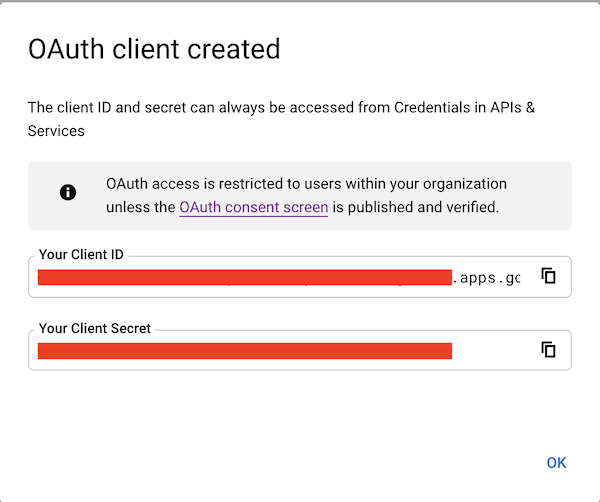

After clicking Create you’ll be shown your newly minted client id and secret. These are important and needed for your Kiali CR yaml and Kiali secrets files.

You’ll need to update your Kiali CR file to include the following auth block.

spec:

auth:

strategy: "openid"

openid:

client_id: "<your client id from GCP>"

disable_rbac: true

issuer_uri: "https://accounts.google.com"

scopes: ["openid", "email"]

username_claim: "email"

issuer_uri should be https://accounts.google.com.

Finally you will need to create a secret, if you don’t have one already, that sets the oidc-secret for the openid flow.

apiVersion: v1

kind: Secret

metadata:

name: kiali

namespace: istio-system

labels:

app: kiali

type: Opaque

data:

oidc-secret: "<base64 encode your client secret from GCP and enter here>"

Once all these settings are complete just set your Kiali CR and the Kiali secret to your cluster. You may need to refresh your Kiali Pod to set the Secret if you add the Secret after the Kiali pod is created.

Using with Azure: AKS and AAD

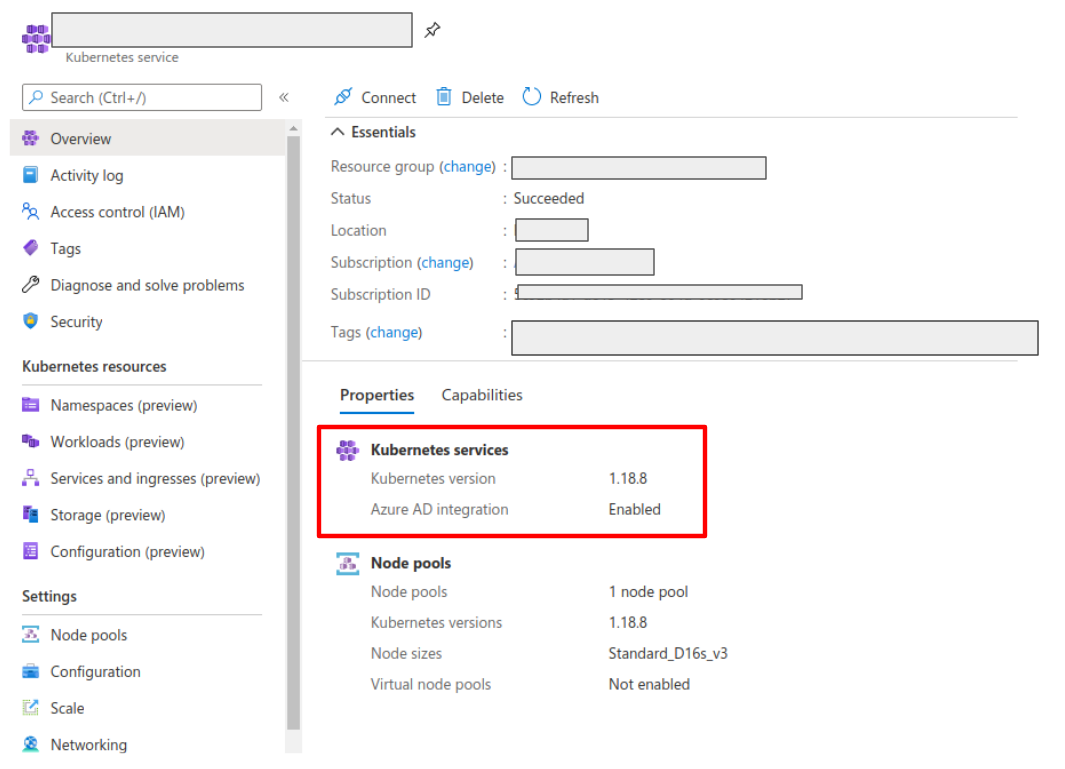

AKS has support for a feature named AKS-managed Azure Active Directory, which enables integration between AKS and AAD. This has the advantage that users can use their AAD credentials to access AKS clusters and can also use Kubernetes RBAC features to assign privileges to AAD users.

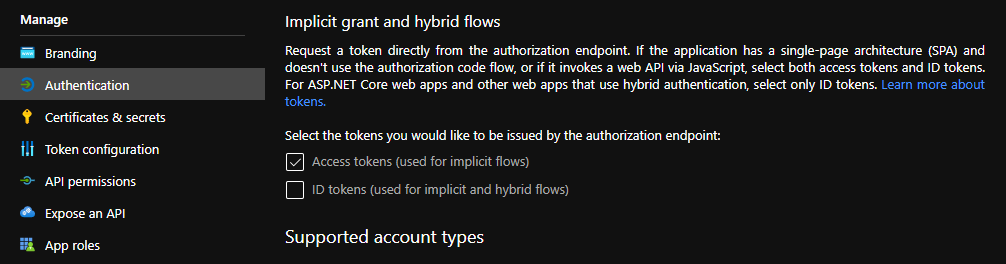

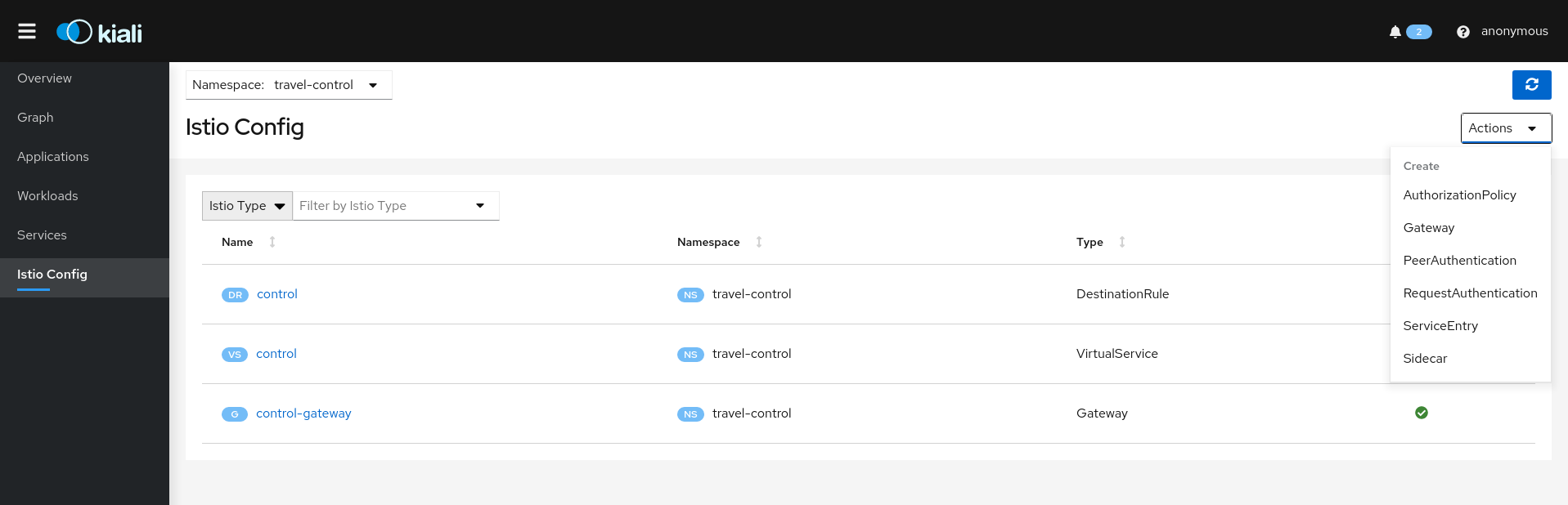

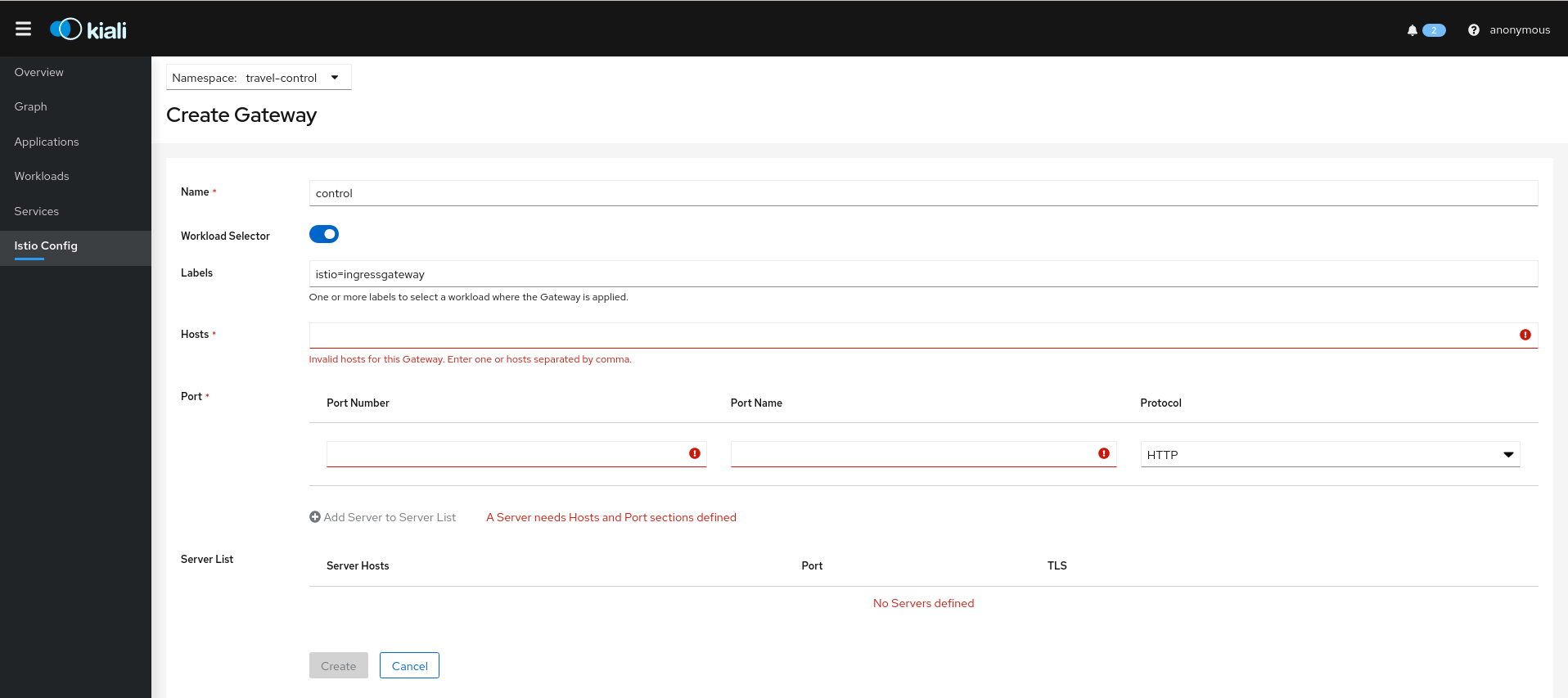

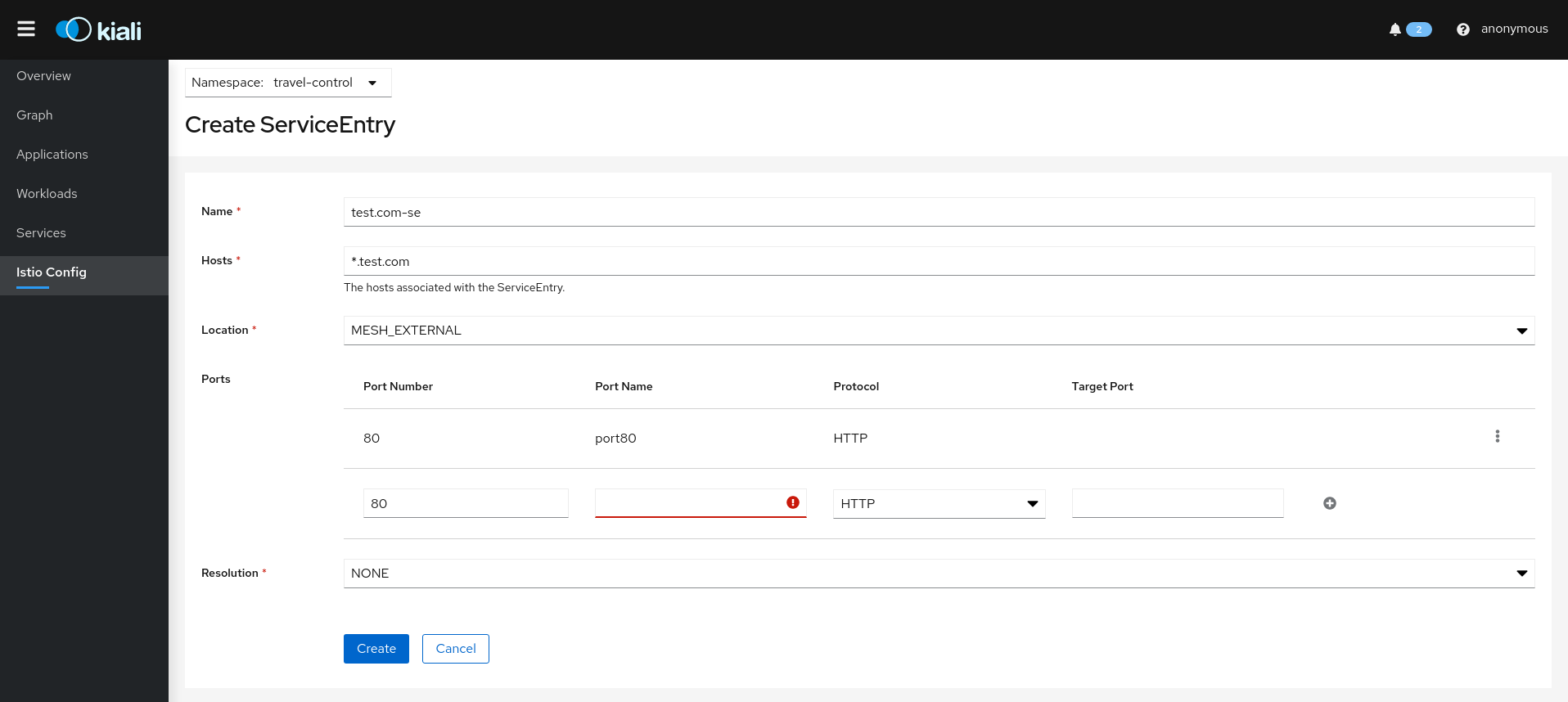

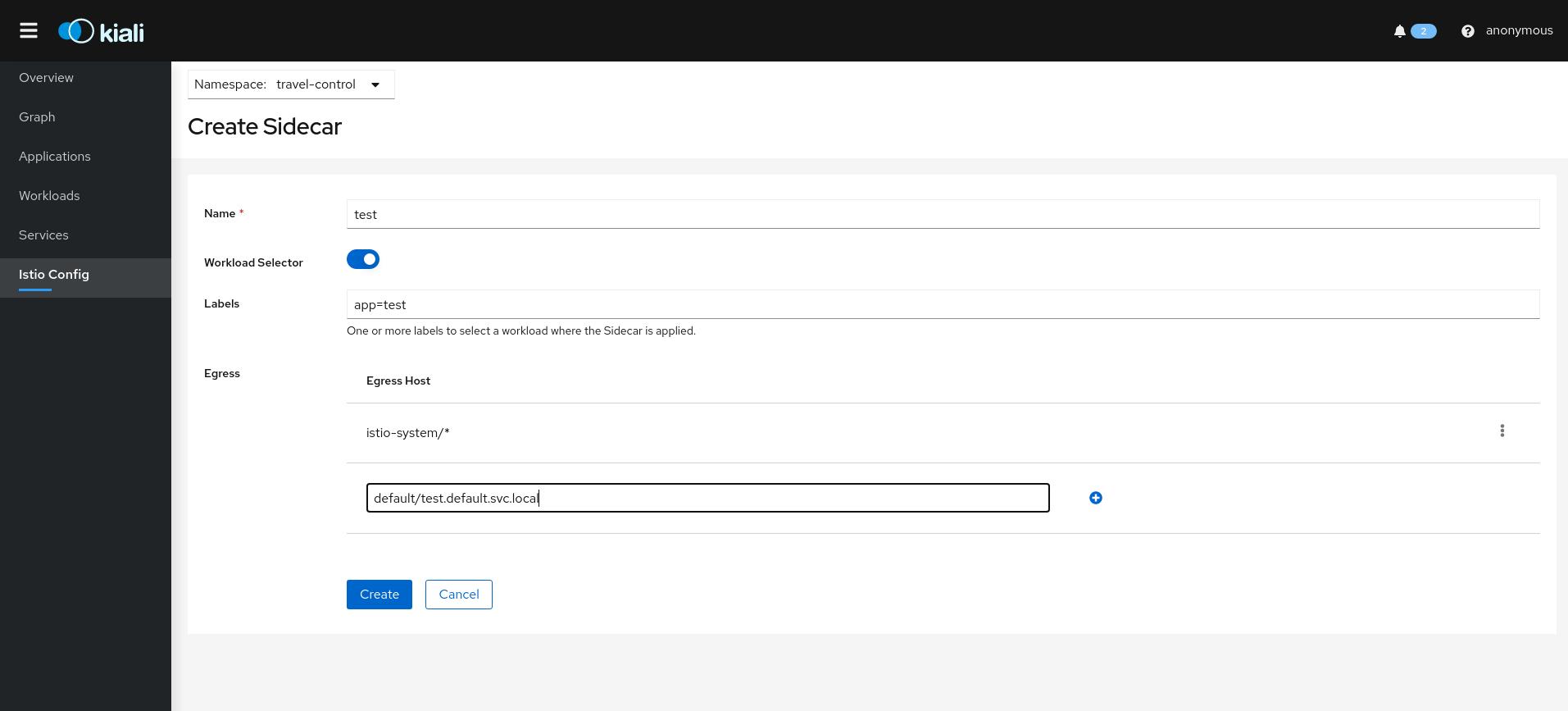

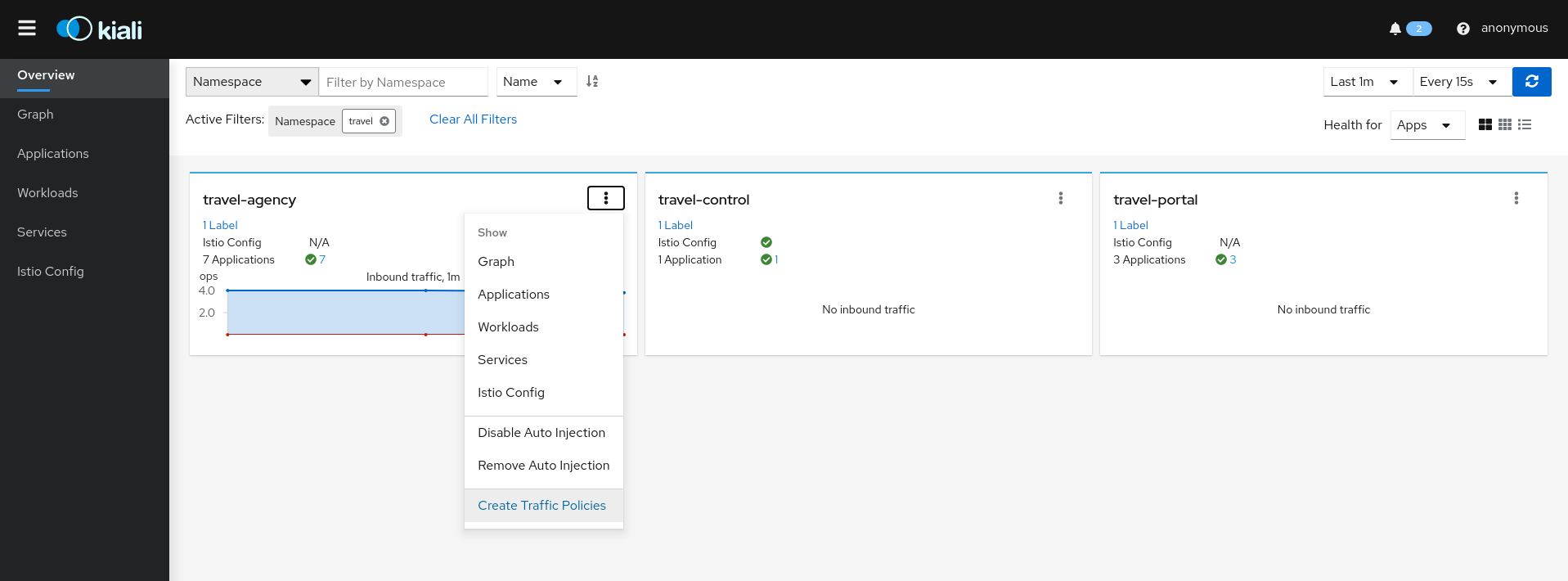

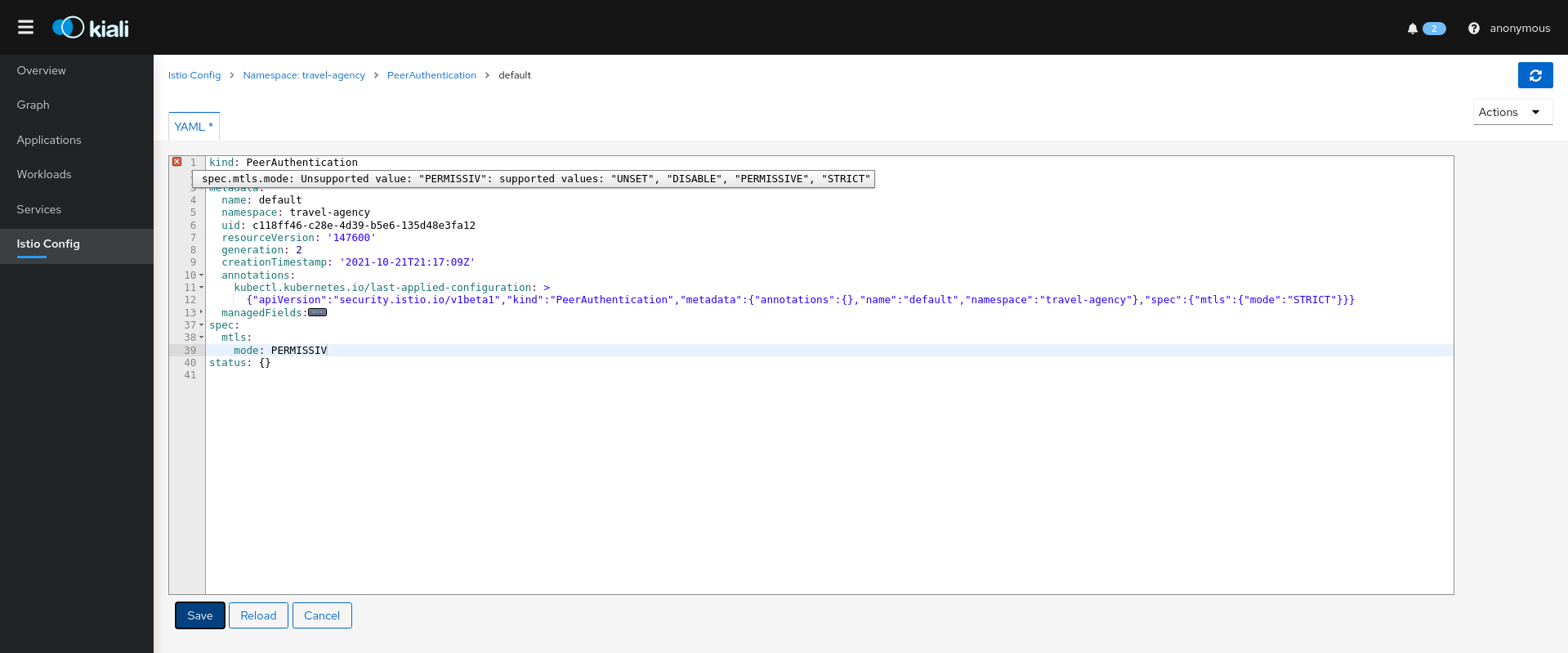

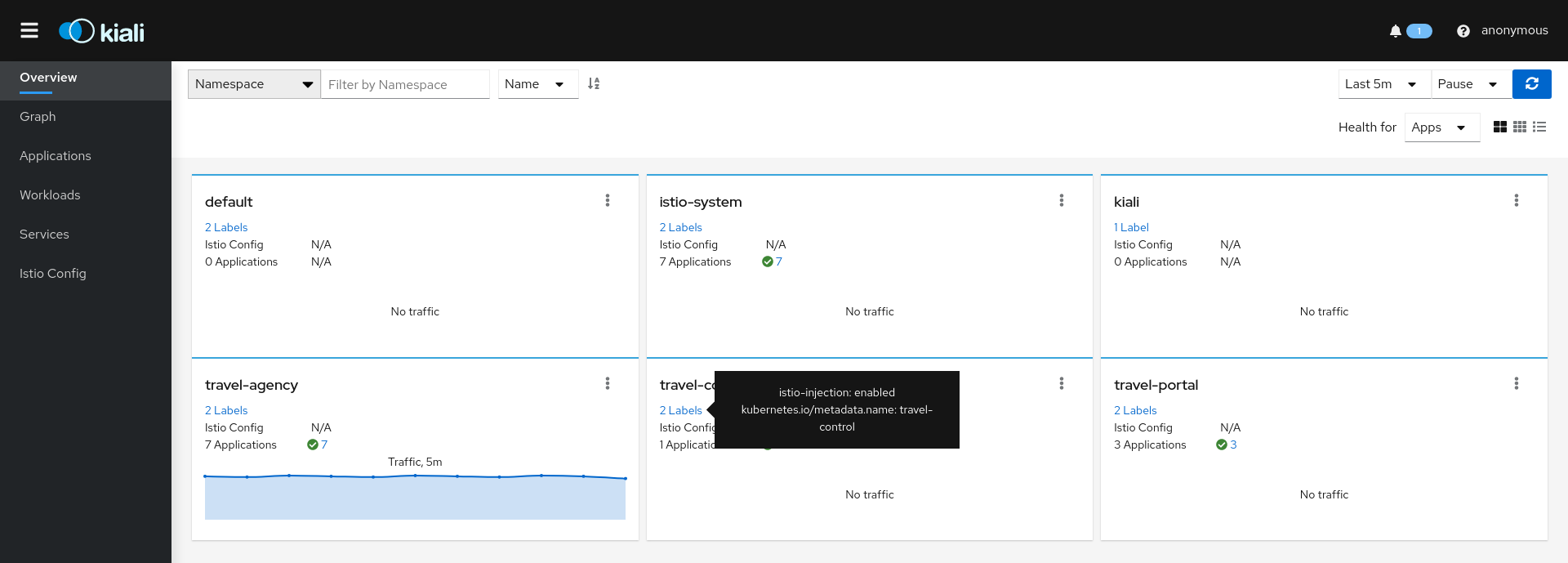

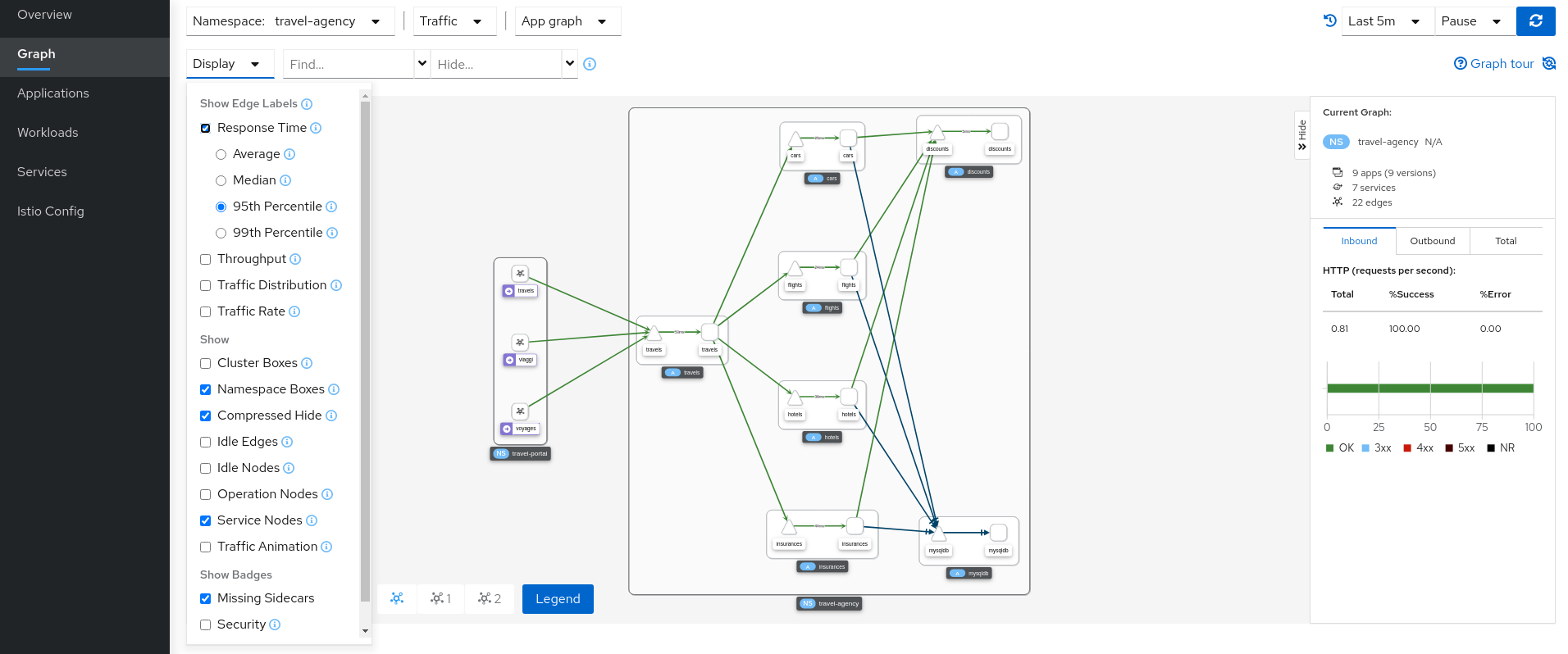

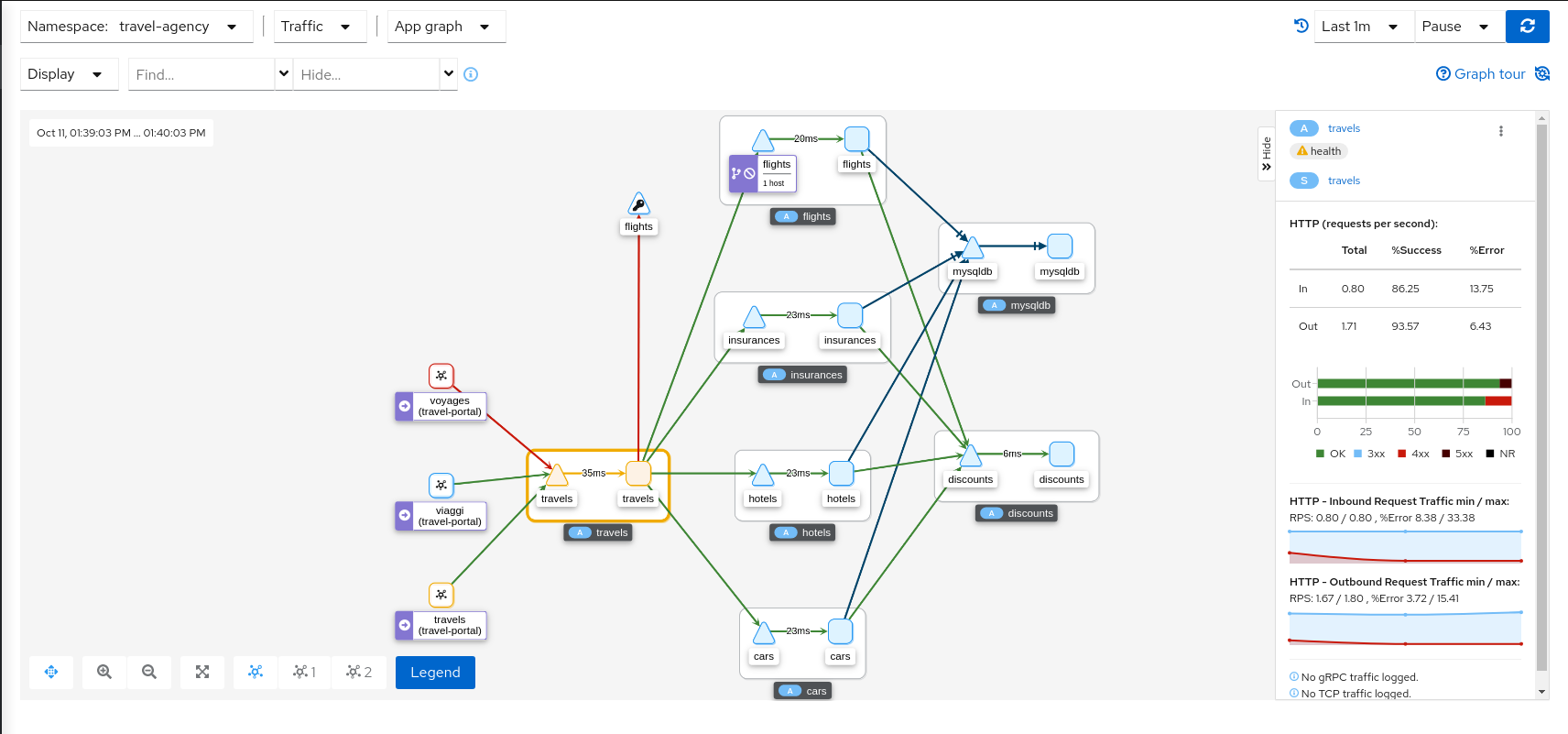

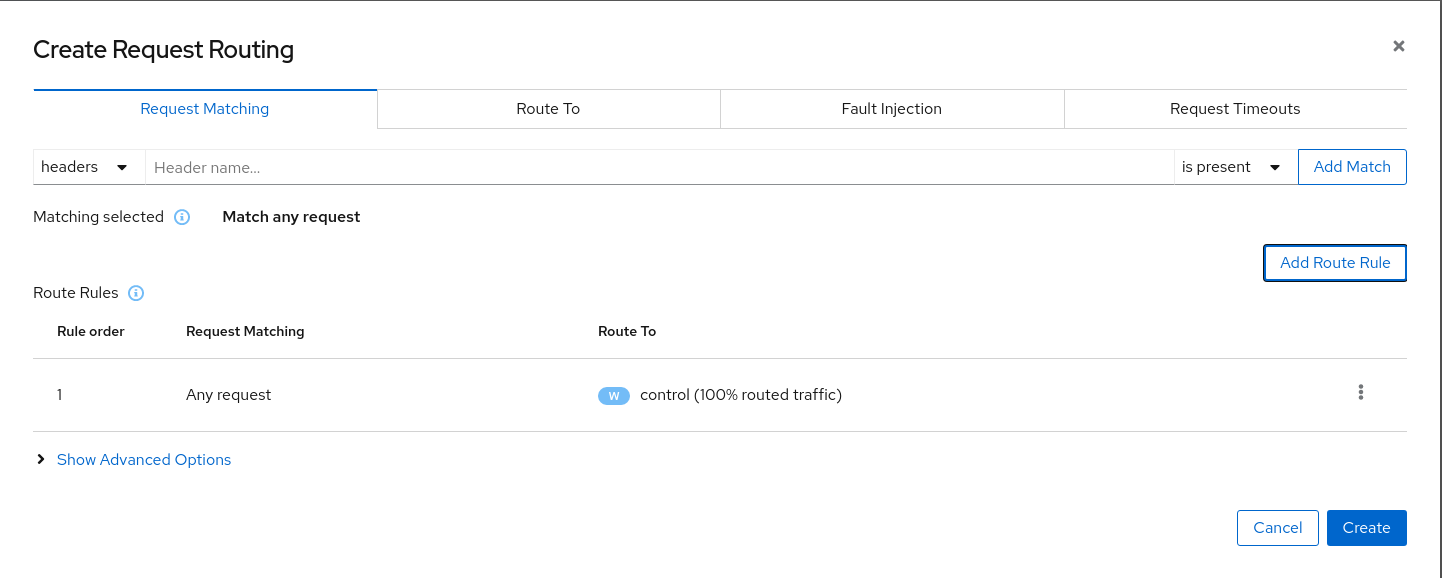

However, Azure is implementing this integration via the Kubernetes Webhook Token Authentication rather than via the Kubernetes OpenID Connect Tokens authentication (see the Azure AD integration section in AKS Concepts documentation). Because of this difference, authentication in AKS behaves slightly different from a standard OpenID setup, but Kiali’s OpenID authentication strategy can still be used with namespace access control support by following the next steps.